Created by: zhiqiu

When clone(for_test=True) called after minimize, the appended backward part should be pruned correctly.

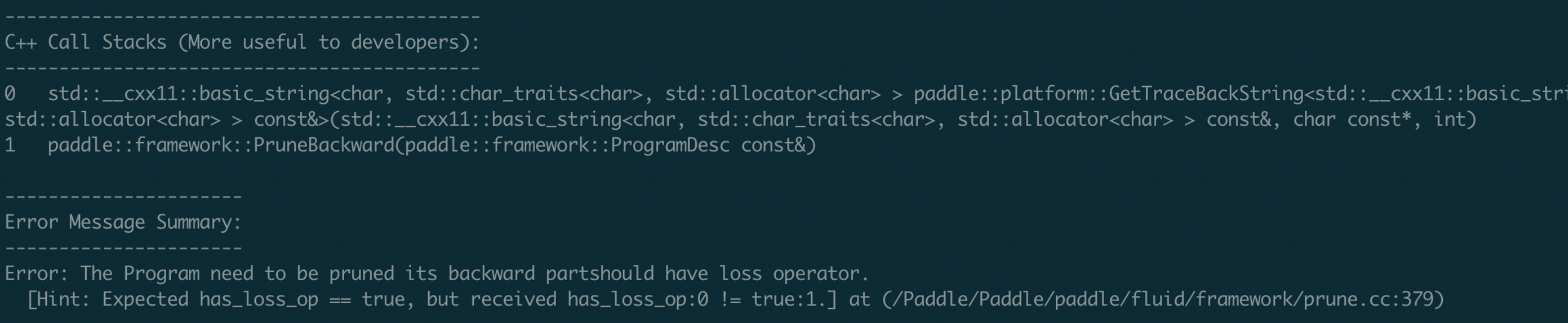

The origin implementation cannot handle program with conditional block. For example, it raises error like this,

The main problem is that, the loss may not occur in global block and the number of blocks in cloned program will be less than the original program. There are two cases,

- Without minimize in conditional block

def gen_loss1: ...

def gen_loss2: ...

sgd = fluid.optimizer.SGD()

# 在运行时根据pred选择执行gen_loss1还是gen_loss2

loss = fluid.layers.cond(pred, gen_loss1, gen_loss2)

sgd.minimize(loss)

for i in range(10):

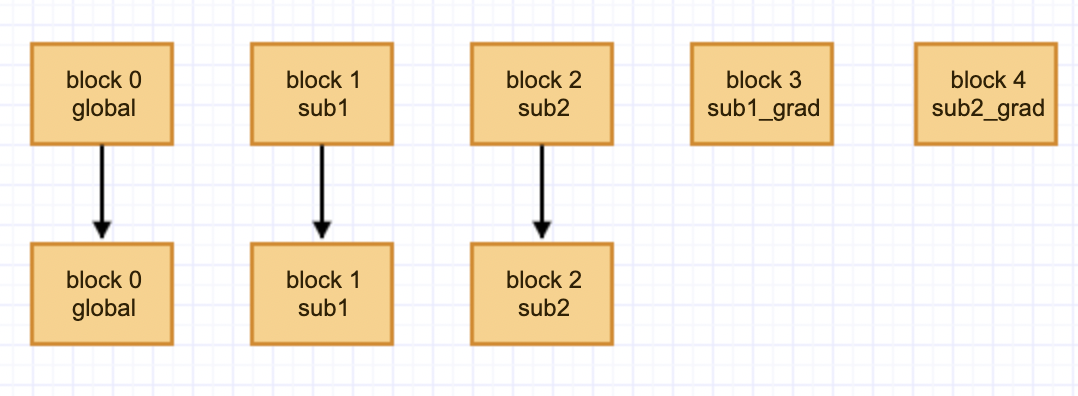

res = exe.run(feed, fetch_list=[loss.name]) In this case, the id of a block in cloned program and its corresponding origin one are identical, though the number of blocks is decreased.

In this case, the id of a block in cloned program and its corresponding origin one are identical, though the number of blocks is decreased.

- With minimize in conditional block

def gen_loss1:

loss1 = Net1()

sgd,m.minimize(loss1)

return loss1def gen_loss2:

loss2 = Net1()

sgd.minimize(loss2)

return loss2

# 在运行时根据pred选择执行gen_loss1还是gen_loss2

loss = fluid.layers.cond(pred, gen_loss1, gen_loss2)

for i in range(10):

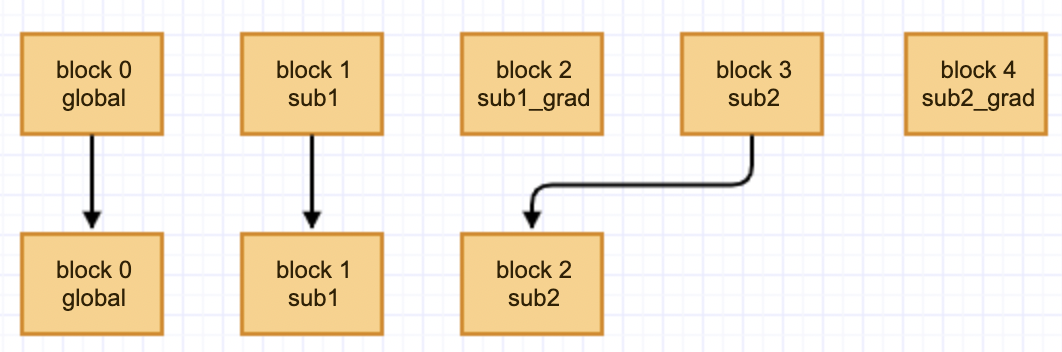

res = exe.run(feed, fetch_list=[loss.name]) In this case, the id of a block in cloned program and its corresponding origin one are not identical.

In this case, the id of a block in cloned program and its corresponding origin one are not identical.

This PR handles both cases.