Created by: grygielski

This PR adds MKL-DNN support of GELU activation, however formula used in MKL-DNN kernel is a bit different from used in native Paddle implementation.

Paddle uses following formula:

out(x) = 0.5 * x * (1 + erf(x / sqrt(2)))

This exact equation for GELU uses ERF function which can't be represented using elementary functions. Implementations of ERF are mostly based on Maclaurin series approximation.

MKL-DNN uses following formula:

out(x) = 0.5 * x * (1 + tanh(sqrt(2/pi) * (x + 0.044715 * x^3)))

This approximation has been proposed in original GELU paper (https://arxiv.org/pdf/1606.08415.pdf) and consists of only elementary functions.

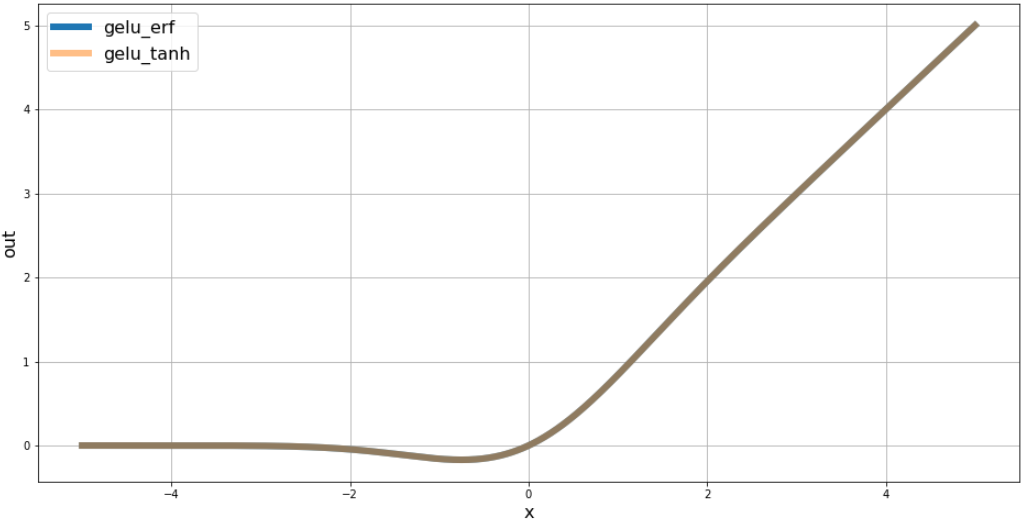

Plot comparison:

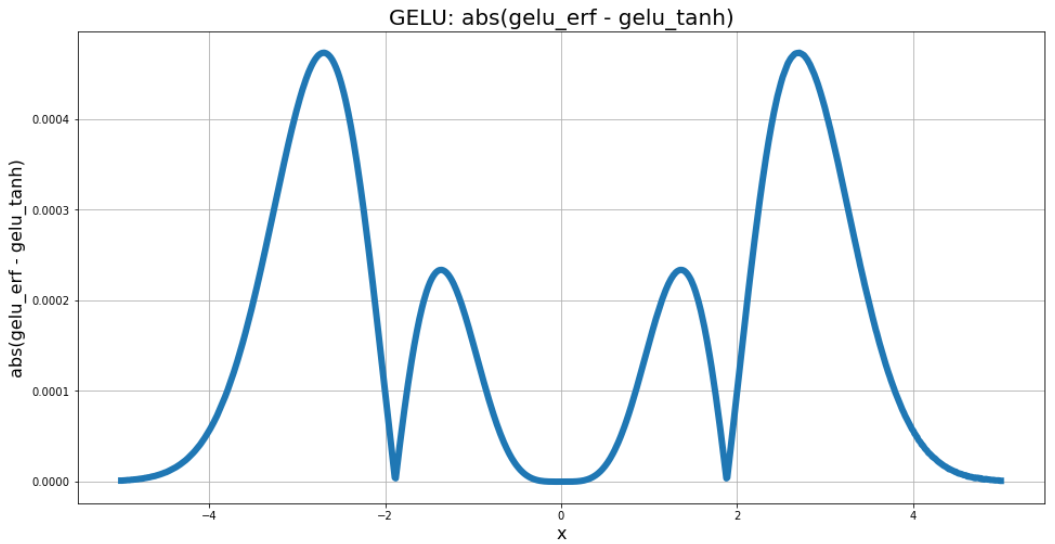

As it's not possible to distinguish these two implementations from each other on general graph, I plotted absolute difference between them:

As it's not possible to distinguish these two implementations from each other on general graph, I plotted absolute difference between them:

Maximum absolute error between 2 implementations:

0.00047326088