Created by: zhhsplendid

This PR fixes a lot of bugs. Because it touched a lot of appending backward stuff, I also run and pass CE: http://ce.paddlepaddle.org:8080/viewLog.html?buildId=69616&tab=buildResultsDiv&buildTypeId=PaddleModesl_ModelDebug_Model11Build

Detail of bug:

-

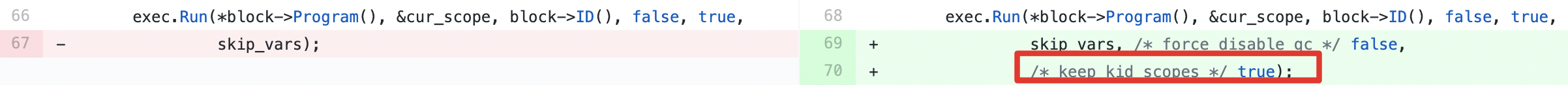

Executor will drop kid scopes but in conditional_block_op, we shouldn't drop kid scopes during conditional_block forward op since it can be used during backward. (But we can drop after grad_op)

-

Fix assigning inside gradient to outside gradient in conditional_block_op.

-

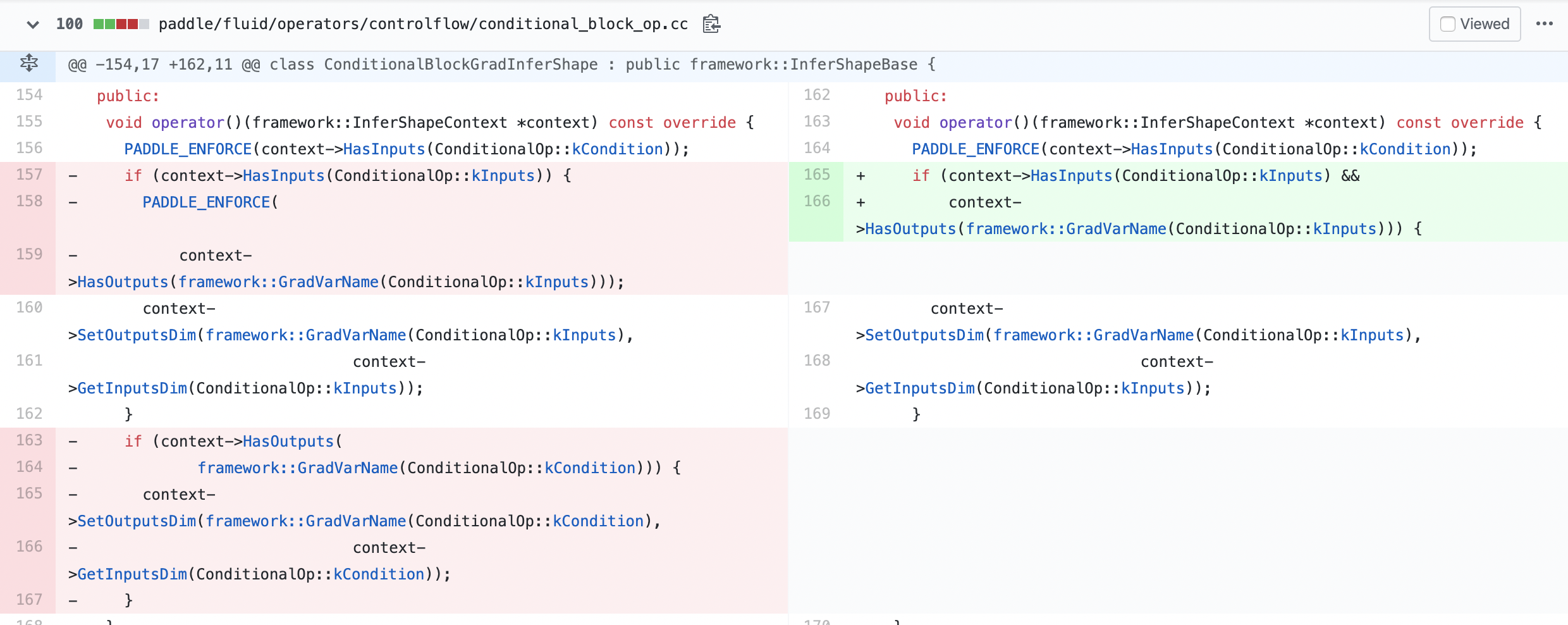

Fix a case that inputs may set stop_gradient and we shouldn't PADDLE_ENFORCE each input has GRAD when inferring shape

-

For conditional_block_op, if Cond is also one of Inputs, we should treat it as input so that GRAD of the input can be passed out.

-

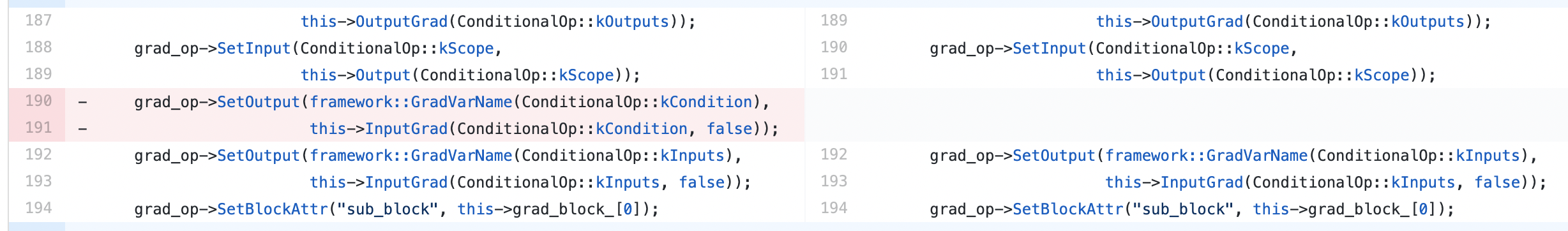

Delete the Output of grad of condition for conditional_block_op. Cond shouldn't have gradient.

-

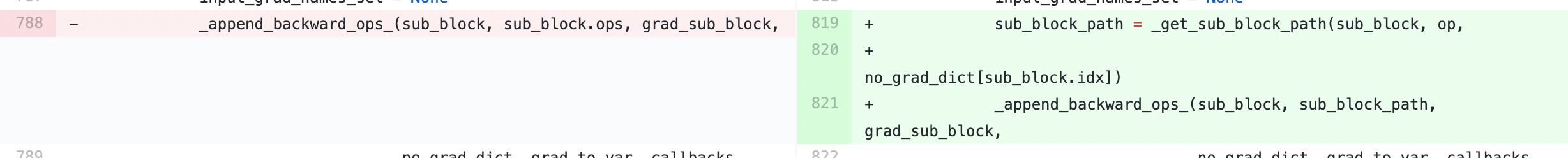

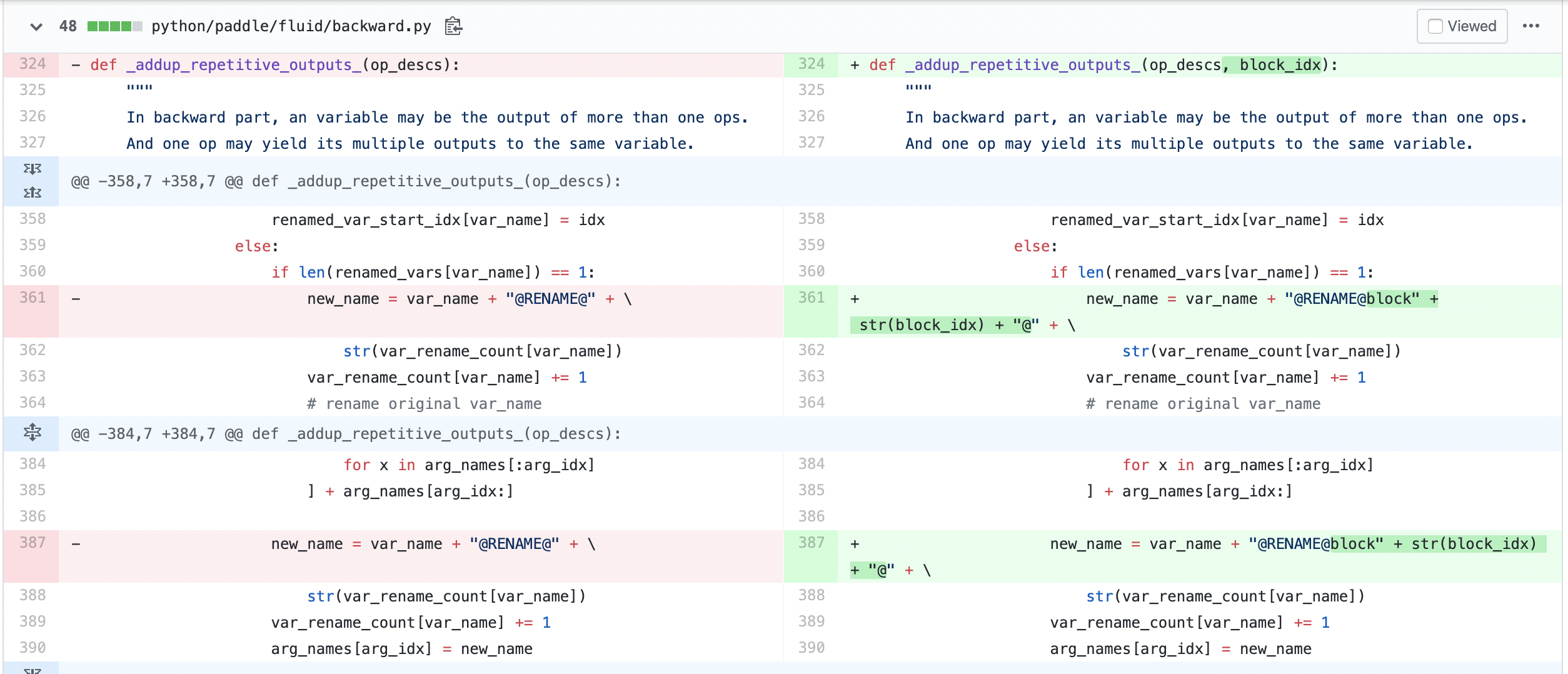

During appending backward for control flow, the old code didn't find path for sub-block. It was only done for global block and it can cause sub-block backward error.

-

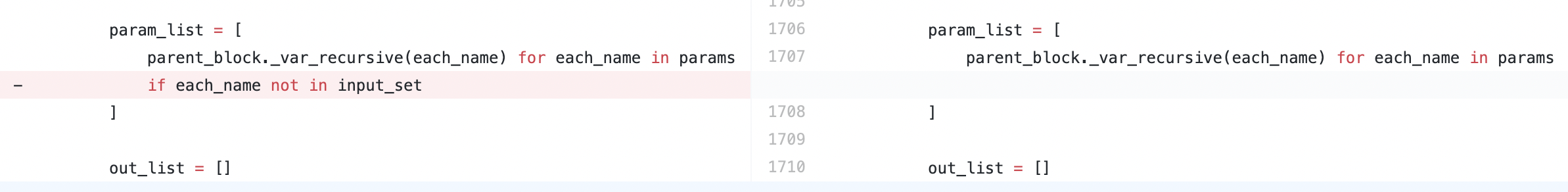

Use different @GRAD@RENAME in different block to avoid assigning GRAD values to parent GRAD. Otherwise child scope GRAD may be assigned to parent scope and causes gradient error. For example, at parent scope, var@GRAD = var@GRAD@RENAME@0 + var@GRAD@RENAME@1, where rename 0 and rename 1 come from conditional_block_op0,1. If conditional_block_op1 is not run, then the correct var@GRAD@RENAME@1 should be 0 but bug exists if the var@GRAD@RENAME@1 is assigned in conditional_block_op0, then var@GRAD@RENAME@1 is not 0 and it is wrong.

-

sum_op.cu has bug when input tensors are not initialized. For example, if in_0.numel() == 0 and in_1 is initialized with values, all three cases won't run.

Finally we can run nested control flow.