Created by: yihuaxu

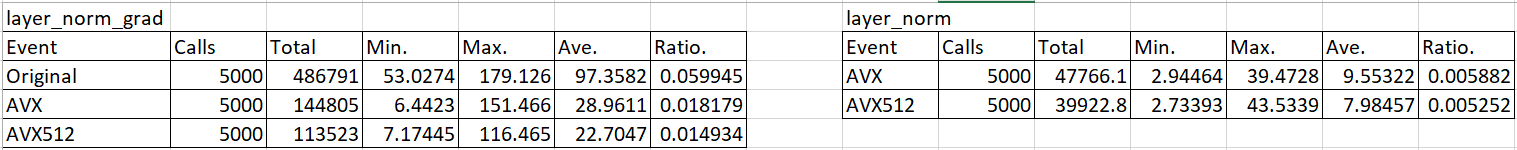

According to the training status of bert model, the intrinsic function was used to optimize the operator and to improve the performance of layer norm grad operator. it can speed up about 3x (avx2 instruction set) on bert training model.

Platform: Intel(R) Xeon(R) Gold 6148 CPU @ 2.40GHz Model Path: benchmark/NeuralMachineTranslation/BERT/fluid/train/chinese_L-12_H-768_A-12 Batch Size: 32 CPU NUM: 20 OMP_THREADS_NUM: 4 Command: CPU_NUM=20 OMP_NUM_THREADS=4 python -u run_classifier.py --task_name XNLI --use_cuda false --do_train true --do_val true --do_test true --batch_size 32 --in_tokens False --init_pretraining_params ../../chinese_L-12_H-768_A-12/params --data_dir ../../data --vocab_path ../../chinese_L-12_H-768_A-12/vocab.txt --checkpoints ../../save --save_steps 1000 --weight_decay 0.01 --warmup_proportion 0.1 --validation_steps 1000 --epoch 2 --max_seq_len 128 --bert_config_path ../../chinese_L-12_H-768_A-12/bert_config.json --learning_rate 5e-5 --skip_steps 10 --random_seed 1 Data Source: benchmark/NeuralMachineTranslation/BERT/fluid/train/data/XNLI-MT-1.0.zip benchmark/NeuralMachineTranslation/BERT/fluid/train/data/XNLI-1.0.zip

The following is the comparison with the different scenarios.

If you try to enable avx512 part, please use the below patch.

diff --git a/cmake/configure.cmake b/cmake/configure.cmake index 5f7b4a4..d2ee77b 100644 --- a/cmake/configure.cmake +++ b/cmake/configure.cmake @@ -28,7 +28,10 @@ if(NOT WITH_PROFILER) add_definitions(-DPADDLE_DISABLE_PROFILER) endif(NOT WITH_PROFILER)

-if(WITH_AVX AND AVX_FOUND) +if(WITH_AVX AND AVX512F_FOUND) +set(SIMD_FLAG ${AVX512F_FLAG}) +add_definitions(-DPADDLE_WITH_AVX) +elseif(WITH_AVX AND AVX_FOUND) set(SIMD_FLAG ${AVX_FLAG}) add_definitions(-DPADDLE_WITH_AVX) elseif(SSE3_FOUND)