Created by: yihuaxu

Used AVX register as the intermediate to save the delay during reading/writing memory frequently.

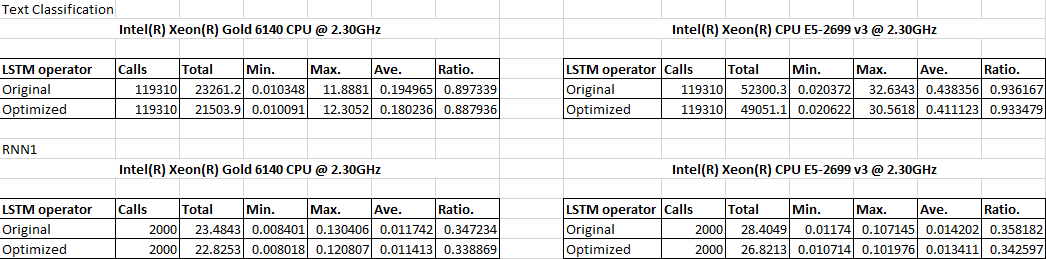

Platform: Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz / Intel(R) Xeon(R) CPU E5-2699 v3 @ 2.30GHz Model Path: Text Classification: third_party/inference_demo/text_classification/model RNN1: third_party/inference_demo/rnn1/model Batch Size: 1 Frame Size: Text Classification 128 / RNN1 15 Command: Text Classification: cd build && ./paddle/fluid/inference/tests/api/test_analyzer_text_classification --infer_model=third_party/inference_demo/text_classification/model --infer_data=third_party/inference_demo/text_classification/data.txt --paddle_num_threads=1 --repeat=1 --batch_size=1 --test_all_data --num_threads=1 RNN1: cd build && ./paddle/fluid/inference/tests/api/test_analyzer_rnn1 --infer_model=third_party/inference_demo/rnn1/model --infer_data=third_party/inference_demo/rnn1/data.txt --paddle_num_threads=1 --repeat=1000 --batch_size=1 --test_all_data --num_threads=1 --profiler=1 Data Source: Text Classification: build/third_party/inference_demo/text_classification/data.txt RNN: build/third_party/inference_demo/rnn1/data.txt

The following is the comparison with the different scenarios.