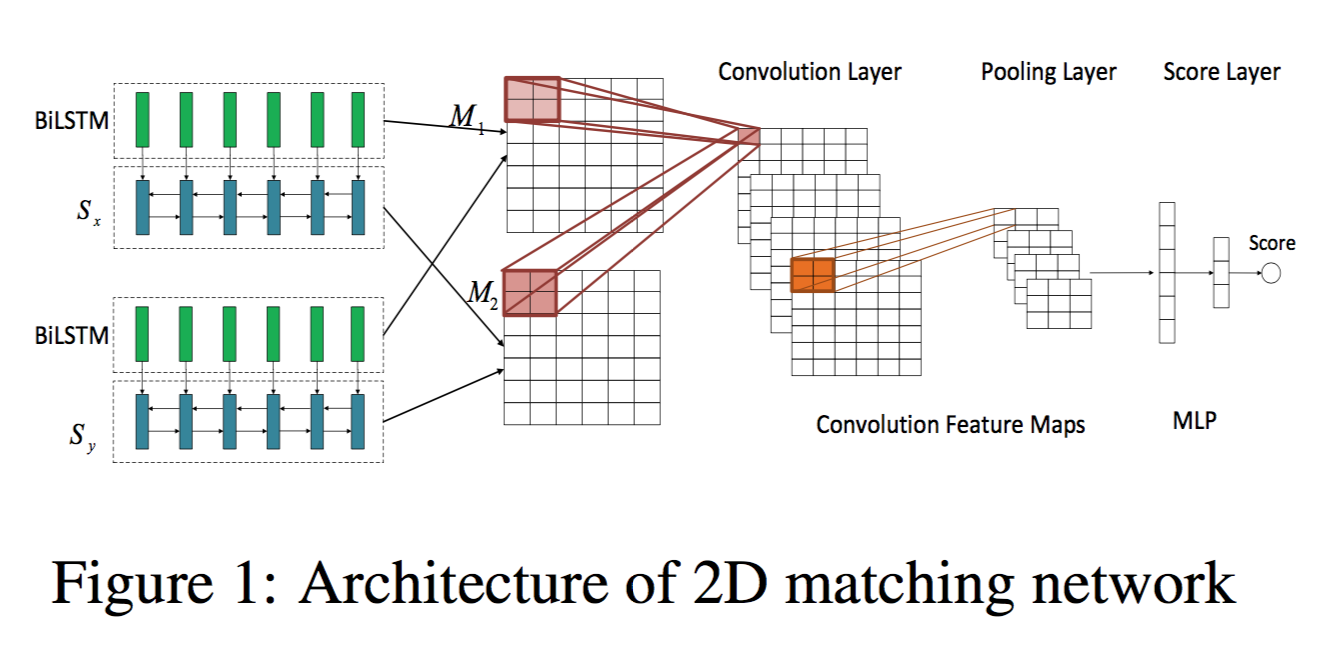

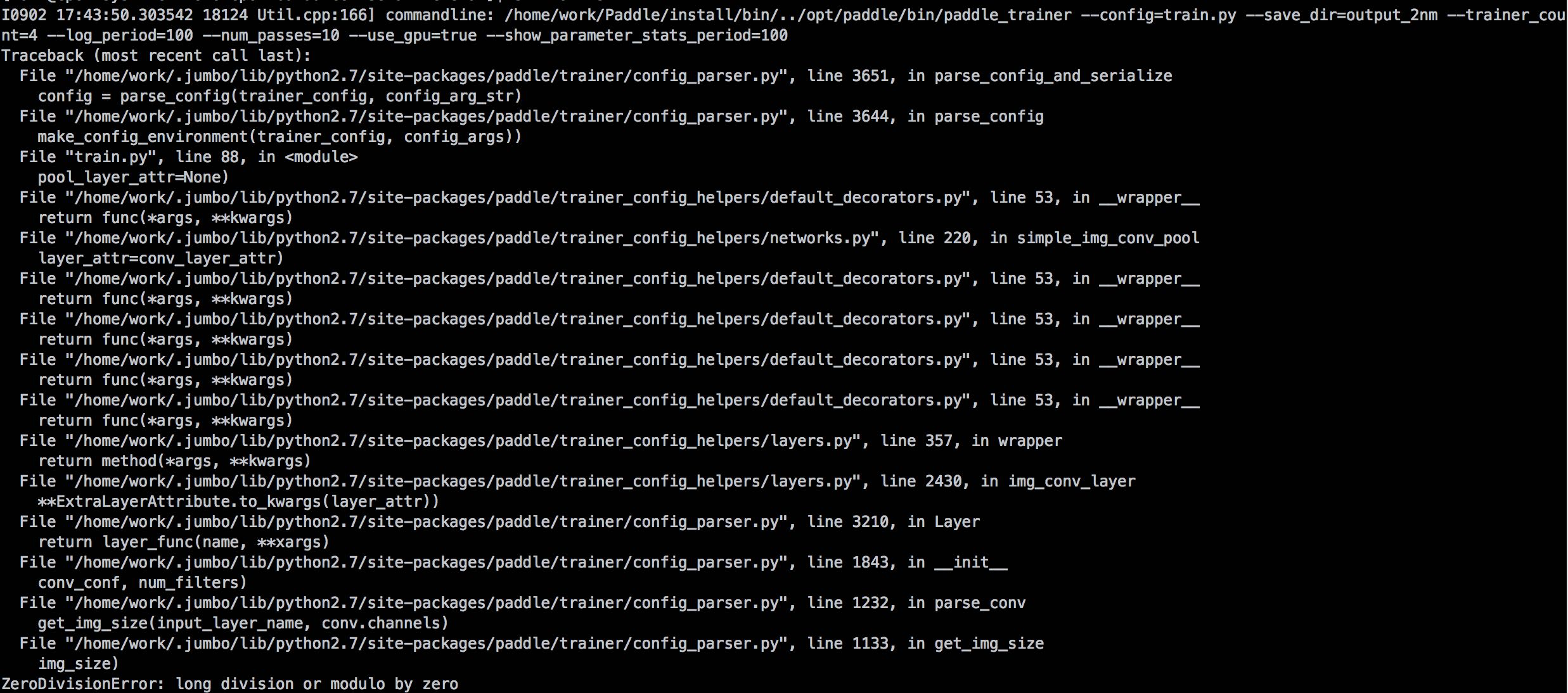

用conv2D网络做问答语义匹配,网络运行报错

Created by: stonyhu

网络配置脚本如下

import numpy as np

from paddle.trainer_config_helpers import *

emb_file = './embedding-vector.200d.txt'

dict_file = './data/dict.txt'

word_dict = dict()

word_idx = 0

with open(dict_file, 'r') as f:

for i, line in enumerate(f):

parts = line.strip().split('\t')

word_dict[parts[0]] = word_idx

word_idx += 1

word_dict["<unk>"] = len(word_dict)

word_dim = len(word_dict)

is_predict = get_config_arg('is_predict', bool, False)

trn = 'data/train.list' if not is_predict else None

tst = 'data/valid.list' if not is_predict else 'data/pred.list'

process = 'process' if not is_predict else 'process_predict'

define_py_data_sources2(

train_list=trn,

test_list=tst,

module="dataprovider.py",

obj=process,

args={"dict_file": dict_file})

batch_size = 512 if not is_predict else 1

settings(

batch_size=batch_size,

learning_rate=1e-4,

learning_method=AdamOptimizer(),

regularization=L2Regularization(8e-4),

gradient_clipping_threshold=25)

def load_parameter(filename=emb_file, height=word_dim, width=200):

return np.loadtxt(open(filename), dtype=np.float32, delimiter=" ")

emb_param = ParameterAttribute(

name="emb_param",

initial_std=0.,

is_static=False,

initializer=load_parameter)

q_word = data_layer(name="question_word", size=word_dim)

qw_emb = embedding_layer(input=q_word, size=200, param_attr=emb_param)

r_word = data_layer(name="reply_word", size=word_dim)

rw_emb = embedding_layer(input=r_word, size=200, param_attr=emb_param)

word_sim_layer = cos_sim(a=qw_emb, b=trans_layer(input=rw_emb))

q_bilstm = bidirectional_lstm(input=qw_emb, size=128)

r_bilstm = bidirectional_lstm(input=rw_emb, size=128)

fc = fc_layer(

input=q_bilstm,

size=256,

act=LinearActivation(),

layer_attr=None,

param_attr=None,

bias_attr=None)

seq_sim_layer = cos_sim(a=fc, b=trans_layer(input=r_bilstm))

conv_input_layer = addto_layer(input=[word_sim_layer, seq_sim_layer],

act=LinearActivation())

conv_pool_layer1 = simple_img_conv_pool(

input=conv_input_layer,

num_channel=2,

filter_size=3,

num_filters=8,

pool_size=3,

name=None,

pool_type=None,

act=ReluActivation(),

groups=1,

conv_stride=1,

conv_padding=0,

bias_attr=None,

param_attr=None,

shared_bias=True,

conv_layer_attr=None,

pool_stride=1,

pool_padding=0,

pool_layer_attr=None)

conv_pool_layer2 = simple_img_conv_pool(

input=conv_pool_layer1,

num_channel=8,

filter_size=3,

num_filters=8,

pool_size=3,

name=None,

pool_type=None,

act=ReluActivation(),

groups=1,

conv_stride=1,

conv_padding=0,

bias_attr=None,

param_attr=None,

shared_bias=True,

conv_layer_attr=None,

pool_stride=1,

pool_padding=0,

pool_layer_attr=None)

conv_pool_layer3 = simple_img_conv_pool(

input=conv_pool_layer2,

num_channel=8,

filter_size=3,

num_filters=8,

pool_size=3,

name=None,

pool_type=None,

act=ReluActivation(),

groups=1,

conv_stride=1,

conv_padding=0,

bias_attr=None,

param_attr=None,

shared_bias=True,

conv_layer_attr=None,

pool_stride=1,

pool_padding=0,

pool_layer_attr=None)

fc_output1 = fc_layer(input=conv_pool_layer3, size=400)

fc_output2 = fc_layer(input=fc_output1, size=50)

output = fc_layer(input=fc_output2, size=2, act=SoftmaxActivation())

if is_predict:

maxid = maxid_layer(output)

outputs([maxid, output])

else:

label = data_layer(name="label", size=2)

cls = classification_cost(input=output, label=label)

outputs(cls)