You need to sign in or sign up before continuing.

layers.lstm_unit使用问题

Created by: ARDUJS

环境

- paddle: 1.7.2

- python: 3.7.5

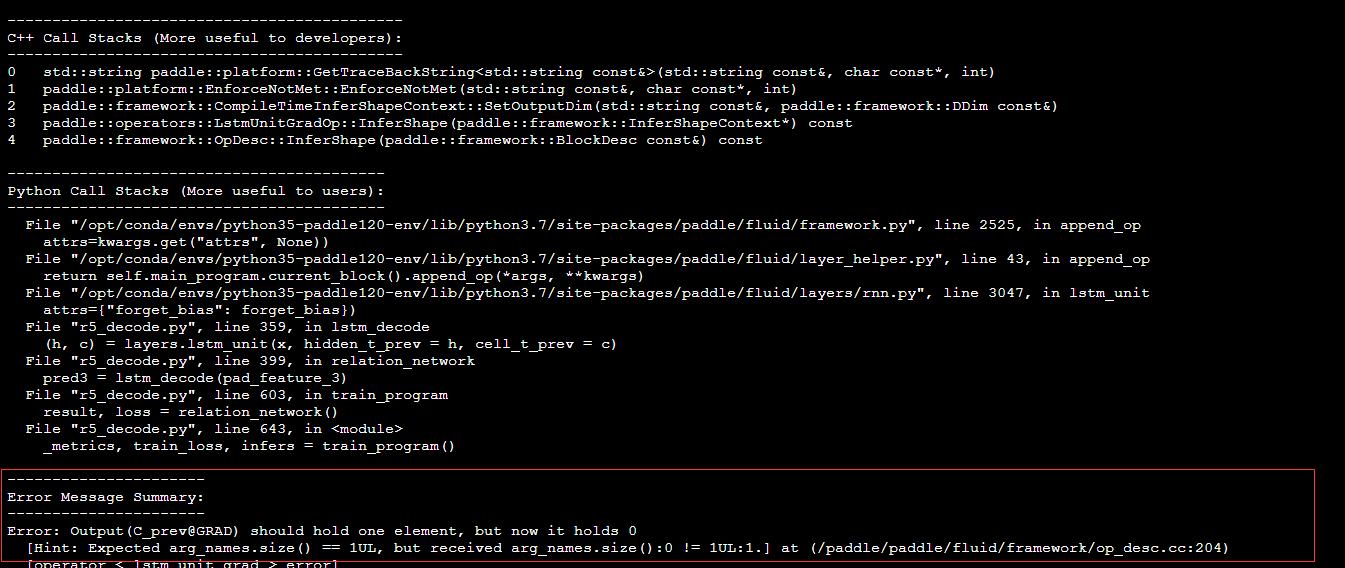

报错截图

报错处代码

# pad_feature.shape, batch_size * 256 * 128

def lstm_decode(pad_feature):

print("pad_feature", pad_feature.shape)

max_sequence_len = pad_feature.shape[1]

batch_size = pad_feature.shape[0]

# w_param_attrs = fluid.ParamAttr(name="label_embedding", learning_rate=0.95, trainable=True)

# b = np.full((batch_size, 1), class_num)

# t = fluid.Tensor()

# b = t.set(b, fluid.CPUPlace()).astype('int64')

ans = []

T = layers.fill_constant( [batch_size, 128], 'float32', 0.0 )

h = layers.fill_constant( [batch_size, 128], 'float32', 0.0 )

c = layers.fill_constant( [batch_size, 128], 'float32', 0.0 )

# layers.Print(x)

layers.Print(h)

layers.Print(c)

for i in range(max_sequence_len):

x = pad_feature[:, i, :] ## 当前token的表示 32*128

x = layers.concat([x, T], axis=-1)

(h, c) = layers.lstm_unit(x, hidden_t_prev = h, cell_t_prev = c)

T = layers.fc(h, size = char_size)

y = layers.fc(T, size = class_num)

y = layers.unsqueeze(input=y, axes=[1])

ans.append(y)

# print("Y", y)

ans = layers.concat(ans, 1) ## 32 * max_len * 108

layers.Print(ans)

# print(ans.shape)

return ans求大佬,help,help, 这错误大概是什么原因