官方文档中lstm接口,使用bilstm时无法得到正确的返回结果?

Created by: wu8320175

版本、环境信息: 1)PaddlePaddle版本:1.8-静态图 LSTM文档:https://www.paddlepaddle.org.cn/documentation/docs/zh/api_cn/layers_cn/lstm_cn.html#lstm

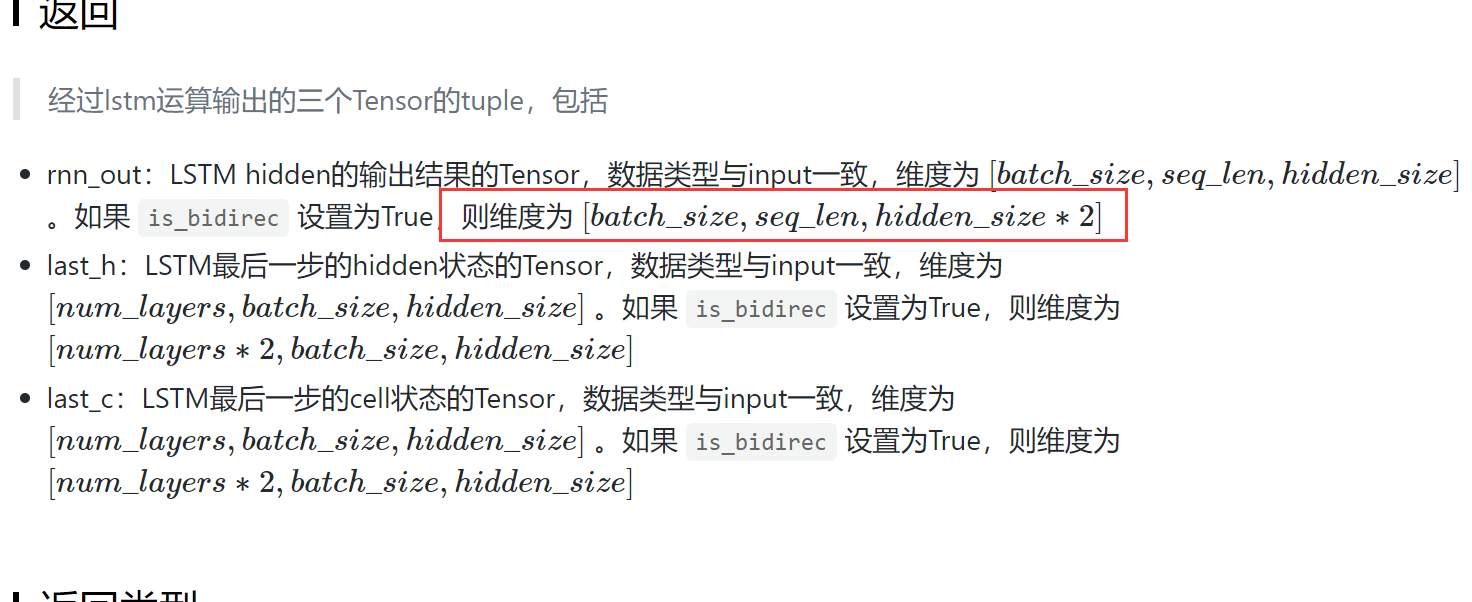

文档中说了配置好相应参数后应该返回2*hidden_size,但是我按下面的代码配置为啥得不到正确的返回呢?

文档中说了配置好相应参数后应该返回2*hidden_size,但是我按下面的代码配置为啥得不到正确的返回呢?

import paddle.fluid as fluid

import paddle.fluid.layers as layers

emb_dim = 256

vocab_size = 10000

data = fluid.layers.data(name='x1', shape=[-1, 100, 1],

dtype='int64')

emb = fluid.layers.embedding(input=data, size=[vocab_size, emb_dim], is_sparse=True)

batch_size = 20

max_len = 100

dropout_prob = 0.2

hidden_size = 150

num_layers = 1

init_h = layers.fill_constant( [num_layers*2, batch_size, hidden_size], 'float32', 0.0 )

init_c = layers.fill_constant( [num_layers*2, batch_size, hidden_size], 'float32', 0.0 )

rnn_out, last_h, last_c = layers.lstm(emb,init_h, init_c, max_len, hidden_size, num_layers,is_bidirec=True, dropout_prob=dropout_prob)

rnn_out.shape,last_h.shape,last_c.shape

# rnn_out.shape # (-1, 100, 150)

# last_h.shape # (2, 20, 150)

# last_c.shape # (2, 20, 150)lastc和lasth能得到正确结果但是rnn_out明显不对呀 [batch_size,seq_len,hidden_size∗2]应该是(-1, 100, 300)呀?