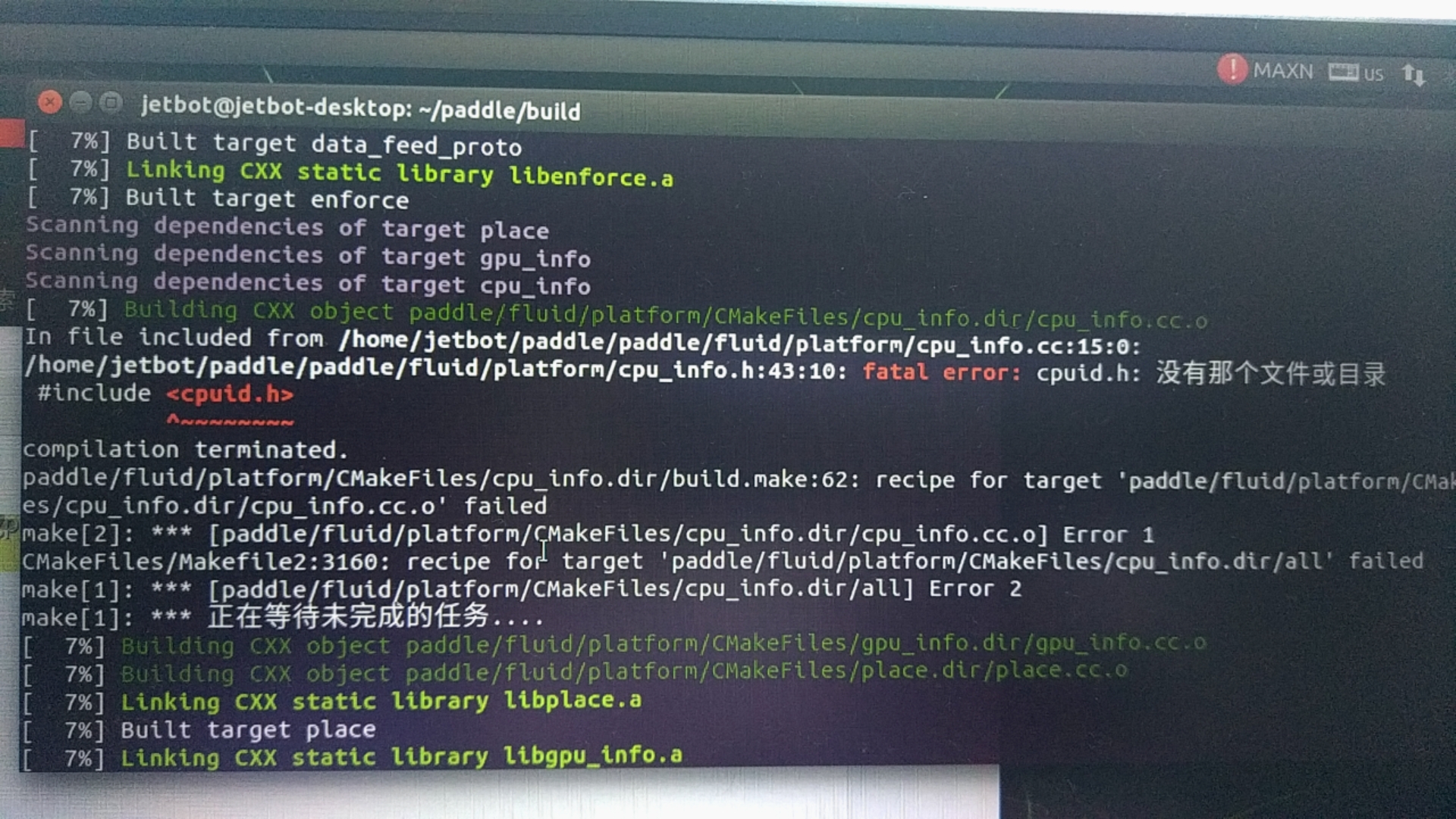

Jetson nano 编译Paddle inference报错缺少cpuid.h头文件

Created by: hang245141253

1)PaddlePaddle版本:v1.8.1 2)CPU:Cortex-A57 3)GPU:CUDA=10.0 CUDNN7.6 4)系统环境:Ubuntu18.04.4 、Python版本3.6.9

- 安装方式信息:

本地编译:cmake ..

-DWITH_CONTRIB=OFF

-DWITH_MKL=OFF

-DWITH_MKLDNN=OFF

-DWITH_TESTING=OFF

-DCMAKE_BUILD_TYPE=Release

-DON_INFER=ON

-DWITH_PYTHON=ON

-DWITH_XBYAK=OFF

-DWITH_NV_JETSON=ON

-DPY_VERSION=3.6

特殊环境请注明:armv8 Jetson nano开发板

- 复现信息:cmake后make -j4

- 问题描述:arm缺少cpuid.h头文件,查阅资料后貌似arm还不支持cpuid.h。从x86机器的ubuntu复制出cpuid.h到nano上,编译时依旧报错。arm不支持cpuid.h,但是源码编译Paddle inference还需要这个就很矛盾。

我把cpuid.h及其相关代码注释掉后继续编译,一段时间后又有报错,报错信息如下:

In file included from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/engine.h:32:0, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.h:21, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.cc:15: /home/jetbot/paddle/paddle/fluid/inference/tensorrt/plugin/trt_plugin_factory.h:35:7: error: base class ‘class nvinfer1::IPluginFactory’ has accessible non-virtual destructor [-Werror=non-virtual-dtor] class PluginFactoryTensorRT : public nvinfer1::IPluginFactory, ^~~~~~~~~~~~~~~~~~~~~ In file included from /usr/include/c++/7/memory:80:0, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.h:16, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.cc:15: /usr/include/c++/7/bits/unique_ptr.h: In instantiation of ‘void std::default_delete<_Tp>::operator()(_Tp*) const [with _Tp = nvinfer1::IOptimizationProfile]’: /usr/include/c++/7/bits/unique_ptr.h:263:17: required from ‘std::unique_ptr<_Tp, _Dp>::~unique_ptr() [with _Tp = nvinfer1::IOptimizationProfile; _Dp = std::default_deletenvinfer1::IOptimizationProfile]’ /home/jetbot/paddle/paddle/fluid/inference/tensorrt/engine.h:138:23: required from here /usr/include/c++/7/bits/unique_ptr.h:78:2: error: ‘nvinfer1::IOptimizationProfile::~IOptimizationProfile()’ is protected within this context delete __ptr; ^~~~~~ In file included from /usr/include/aarch64-linux-gnu/NvInfer.h:53:0, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/engine.h:17, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.h:21, from /home/jetbot/paddle/paddle/fluid/inference/tensorrt/op_teller.cc:15: /usr/include/aarch64-linux-gnu/NvInferRuntime.h:1098:5: note: declared protected here ~IOptimizationProfile() noexcept = default; ^ cc1plus: all warnings being treated as errors paddle/fluid/inference/tensorrt/CMakeFiles/tensorrt_op_teller.dir/build.make:62: recipe for target 'paddle/fluid/inference/tensorrt/CMakeFiles/tensorrt_op_teller.dir/op_teller.cc.o' failed make[2]: *** [paddle/fluid/inference/tensorrt/CMakeFiles/tensorrt_op_teller.dir/op_teller.cc.o] Error 1 CMakeFiles/Makefile2:84730: recipe for target 'paddle/fluid/inference/tensorrt/CMakeFiles/tensorrt_op_teller.dir/all' failed make[1]: *** [paddle/fluid/inference/tensorrt/CMakeFiles/tensorrt_op_teller.dir/all] Error 2 make[1]: *** 正在等待未完成的任务....