Develop branch accuracy drop after upgrading to DNNL1.2

Created by: lidanqing-intel

QAT Ernie accuracy drop in develop branch after upgrading to DNNL1.2

Reproduce the problem:

python python/paddle/fluid/contrib/slim/tests/qat_int8_nlp_comparison.py --only_model=${save_int8_model_path} --infer_data=${dataset_dir}/1.8w.bs1 --labels=${dataset_dir}/label.xnli.dev --batch_size=50 --batch_num=1 --quantized_ops="fc,reshape2,transpose2" --acc_diff_threshold=0.01Results:

develop branch:

2020-03-07 13:59:34,308-INFO: FP32: avg accuracy: 0.798394

2020-03-07 13:59:34,308-INFO: INT8: avg accuracy: 0.798795release/1.7:

2020-03-07 02:15:19,564-INFO: FP32: avg accuracy: 0.798394

2020-03-07 02:15:19,564-INFO: INT8: avg accuracy: 0.799598What has been done:

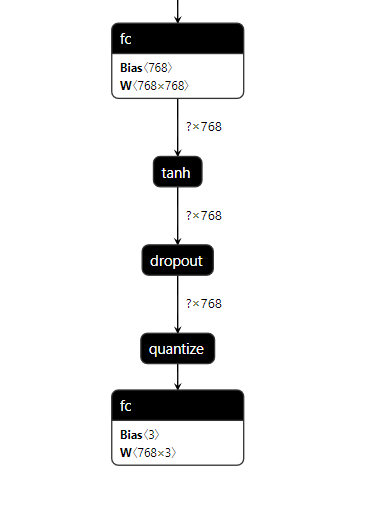

- Found it is tanh activation using MKL-DNN kenel eletwise_tanh changed output. All other ops behave the same.

- MKLDNN_VERBOSE=1 (Used kernel are the same)

develop:

dnnl_verbose,exec,cpu,inner_product,igemm_s8s8s32:jit,forward_inference,src_s8::blocked:ab:f0 wei_s8::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,oscale:2;,,mb50ic768oc768

dnnl_verbose,exec,cpu,eltwise,jit:avx512_common,forward_inference,data_f32::blocked:ab:f0 diff_undef::undef::f0,,alg:eltwise_tanh alpha:0 beta:0,50x768,0.0869141release/1.7:

dnnl_verbose,exec,cpu,inner_product,igemm_s8s8s32:jit,forward_inference,src_s8::blocked:ab:f0 wei_s8::blocked:ba:f0 bia_f32::blocked:a:f0 dst_f32::blocked:ab:f0,oscale:2;,,mb50ic768oc768,0.208984

dnnl_verbose,exec,cpu,eltwise,jit:avx512_common,forward_inference,data_f32::blocked:ab:f0 diff_undef::undef::f0,,alg:eltwise_tanh alpha:0 beta:0,50x768,0.0419922- To be done: 3.1 Use benchDNN to check single op accuracy 3.2 Contact MKL-DNN team