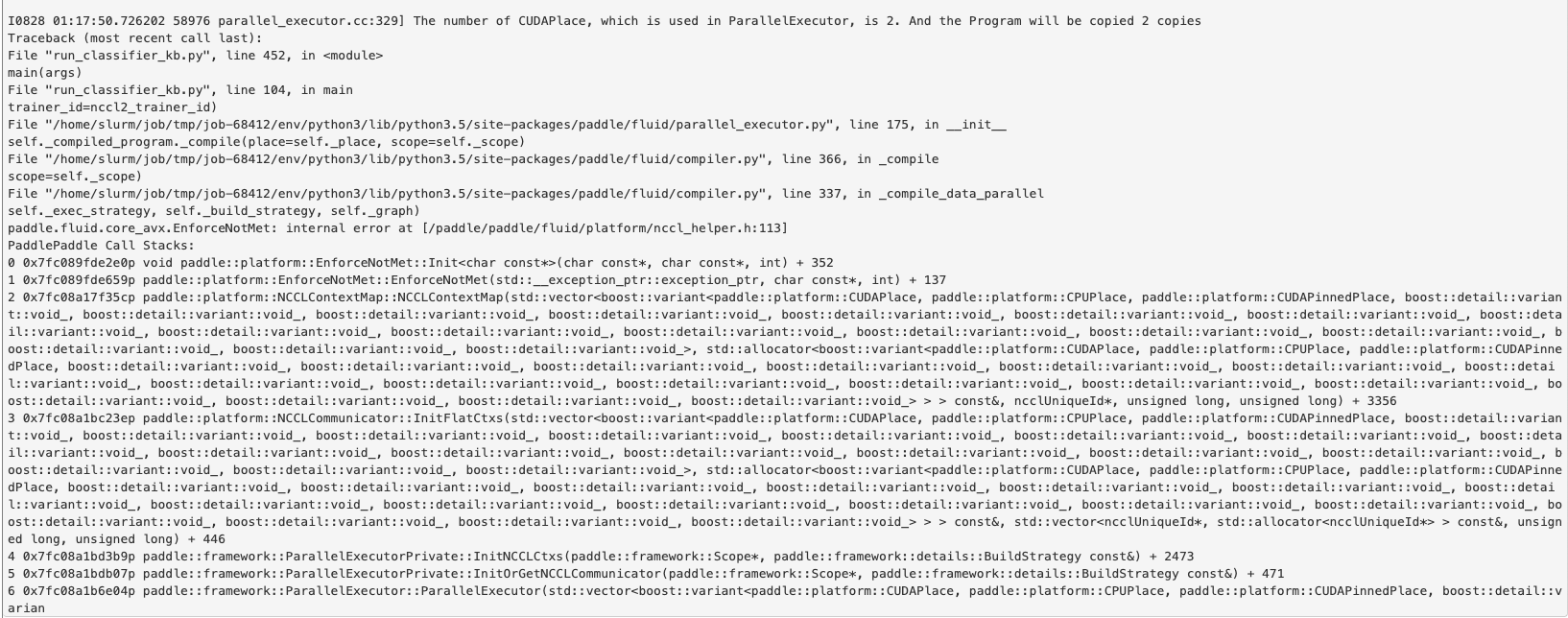

多卡训练时随机报错(nccl)

Created by: daizh

- 版本、环境信息: 1)PaddlePaddle版本:1.5.0 2)CPU: 3)GPU:V100, 2卡, cuda9, cudnn7 4)系统环境:centos,python 3.5.3

- 模型信息 1)模型名称: ERNIE上层加了一些attention模型 2)使用数据集名称 : GLUE(CoLA, RTE, MRPC都会报错)

- 问题描述:

if args.do_train:

exec_strategy = fluid.ExecutionStrategy()

if args.use_fast_executor:

exec_strategy.use_experimental_executor = True

exec_strategy.num_threads = dev_count

exec_strategy.num_iteration_per_drop_scope = args.num_iteration_per_drop_scope

train_exe = fluid.ParallelExecutor(use_cuda=args.use_cuda,

loss_name=graph_vars["loss"].name, exec_strategy=exec_strategy,

main_program=train_program, num_trainers=nccl2_num_trainers,

trainer_id=nccl2_trainer_id) # 104行, 报错位置

train_pyreader.decorate_tensor_provider(train_data_generator)

else:

train_exe = None

test_exe = exe

if args.do_val or args.do_test:

if args.use_multi_gpu_test:

test_exe = fluid.ParallelExecutor(use_cuda=args.use_cuda, main_program=test_prog,

share_vars_from=train_exe)

if args.do_train:

train_pyreader.start()

steps = 0

if warmup_steps > 0:

graph_vars["learning_rate"] = scheduled_lr

ce_info = []

time_begin = time.time()

last_epoch = 0

current_epoch = 0