Kubernetes cluster distributed training error

Created by: TheodoreG

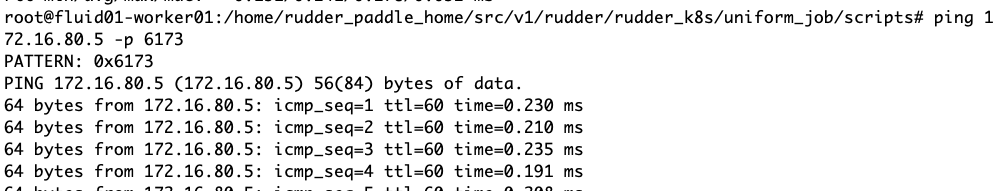

Hi, I am using paddle distributed training in "NCCL2" mode within a kubernetes cluster. Here I have two workers in one namespace and can ping each other successfully:

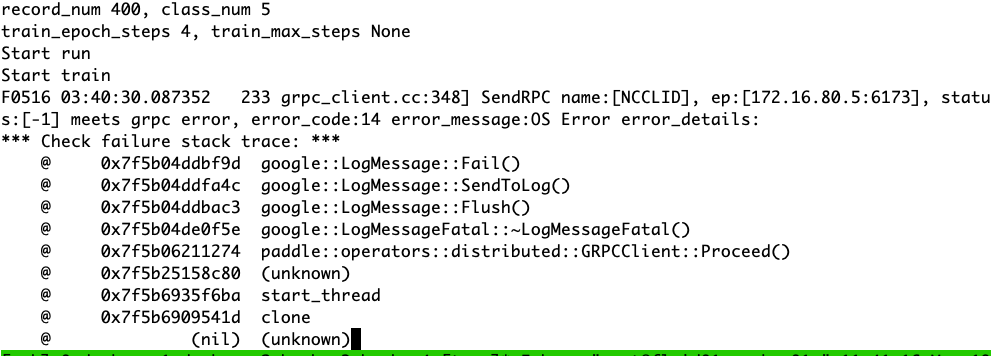

But when I start training it get errors:

Such wrong only happens when two pods are assigned to different nodes. When those two pods are assigned to the same node, which equals multi-GPUs training there's no such error. How to solve it ?