Conv_op fails on MM_DNN GPU inference

Created by: luotao1

MM_DNN在4w+样本上预测,

编译时需打开:-DWITH_INFERENCE_API_TEST=ON

运行命令:

cd build

./paddle/fluid/inference/tests/api/test_analyzer_mm_dnn --infer_model=third_party/inference_demo/mm_dnn/model/ --infer_data=third_party/inference_demo/mm_dnn/data.txt --gtest_filter=Analyzer_MM_DNN.profile --test_all_data环境:V100,镜像:paddlepaddle/paddle_manylinux_devel:cuda9.0_cudnn7

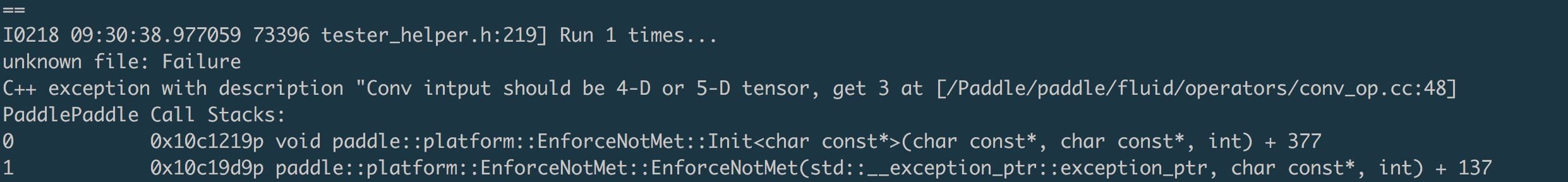

CPU可以跑通,GPU报错:

GPU 需要修改下

GPU 需要修改下 paddle/fluid/inference/tests/api/analyzer_mm_dnn_tester.cc

diff --git a/paddle/fluid/inference/tests/api/analyzer_mm_dnn_tester.cc b/paddle/fluid/inference/tests/api/analyzer_mm_dnn_tester.cc

index 089f655..e55f45d 100644

--- a/paddle/fluid/inference/tests/api/analyzer_mm_dnn_tester.cc

+++ b/paddle/fluid/inference/tests/api/analyzer_mm_dnn_tester.cc

@@ -76,7 +76,8 @@ void PrepareInputs(std::vector<PaddleTensor> *input_slots, DataRecord *data,

void SetConfig(AnalysisConfig *cfg) {

cfg->SetModel(FLAGS_infer_model);

- cfg->DisableGpu();

+ cfg->EnableUseGpu(600, 0);

cfg->SwitchSpecifyInputNames();

cfg->SwitchIrOptim();

}