paddle升级到gpu-0.14.0版本后原有训练任务出错

Created by: HugoLian

升级之后GPU运行原有任务(只是每天训练数据的差异,理论上不会有数据差异),报错:

I0718 14:57:56.227458 1672 Util.cpp:166] commandline: --use_gpu=True --trainer_count=1

I0718 14:58:02.015449 1672 GradientMachine.cpp:94] Initing parameters..

I0718 14:58:05.849926 1672 GradientMachine.cpp:101] Init parameters done.

F0718 14:58:24.706127 1672 Matrix.cpp:653] Not supported

*** Check failure stack trace: ***

@ 0x7f9fe05c868d google::LogMessage::Fail()

@ 0x7f9fe05cc13c google::LogMessage::SendToLog()

@ 0x7f9fe05c81b3 google::LogMessage::Flush()

@ 0x7f9fe05cd64e google::LogMessageFatal::~LogMessageFatal()

@ 0x7f9fe03df2c6 paddle::GpuMatrix::mul()

@ 0x7f9fe02e3235 paddle::FullyConnectedLayer::forward()

@ 0x7f9fe01da79d paddle::NeuralNetwork::forward()

@ 0x7f9fe01b1ee3 paddle::GradientMachine::forwardBackward()

@ 0x7f9fe05a44e4 GradientMachine::forwardBackward()

@ 0x7f9fe013cff9 _wrap_GradientMachine_forwardBackward

@ 0x7fa0173053d3 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa017307272 PyEval_EvalCode

@ 0x7fa01732165c run_mod

@ 0x7fa017321730 PyRun_FileExFlags

@ 0x7fa017322c3c PyRun_SimpleFileExFlags

@ 0x7fa0173344fc Py_Main

@ 0x38bfc21b45 (unknown)

Thread [140325559519040] Forwarding __fc_layer_0__,

*** Aborted at 1531897104 (unix time) try "date -d @1531897104" if you are using GNU date ***

PC: @ 0x0 (unknown)

*** SIGABRT (@0x1f400000688) received by PID 1672 (TID 0x7fa01720c740) from PID 1672; stack trace: ***

@ 0x38c040f130 (unknown)

@ 0x38bfc359d9 (unknown)

@ 0x38bfc370e8 (unknown)

@ 0x7f9fe05d2bcb google::FindSymbol()

@ 0x7f9fe05d358a google::GetSymbolFromObjectFile()

@ 0x7f9fe05d3c52 google::SymbolizeAndDemangle()

@ 0x7f9fe05d1458 google::DumpStackTrace()

@ 0x7f9fe05d1516 google::DumpStackTraceAndExit()

@ 0x7f9fe05c868d google::LogMessage::Fail()

@ 0x7f9fe05cc13c google::LogMessage::SendToLog()

@ 0x7f9fe05c81b3 google::LogMessage::Flush()

@ 0x7f9fe05cd64e google::LogMessageFatal::~LogMessageFatal()

@ 0x7f9fe03df2c6 paddle::GpuMatrix::mul()

@ 0x7f9fe02e3235 paddle::FullyConnectedLayer::forward()

@ 0x7f9fe01da79d paddle::NeuralNetwork::forward()

@ 0x7f9fe01b1ee3 paddle::GradientMachine::forwardBackward()

@ 0x7f9fe05a44e4 GradientMachine::forwardBackward()

@ 0x7f9fe013cff9 _wrap_GradientMachine_forwardBackward

@ 0x7fa0173053d3 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa0173054d1 PyEval_EvalFrameEx

@ 0x7fa017307160 PyEval_EvalCodeEx

@ 0x7fa017307272 PyEval_EvalCode

@ 0x7fa01732165c run_mod

@ 0x7fa017321730 PyRun_FileExFlags

@ 0x7fa017322c3c PyRun_SimpleFileExFlags

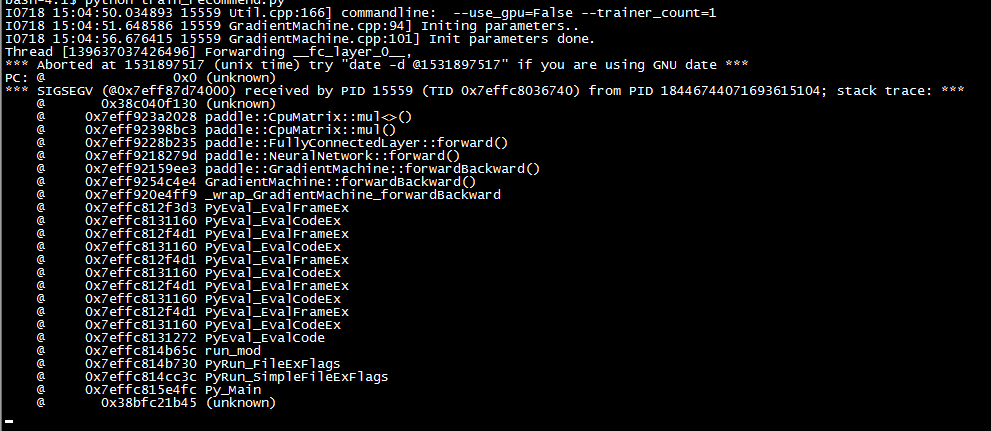

Aborted改用CPU运行时有时可以跑一些batch,多数情况下报错并卡死在这里:

请问这可能是因为什么呢? 另外之前的版本trainer_count > 1是可以正常使用的,新版本>1时不能训练么?