Loss with Parallel executor converge slower than MKLDNN

Created by: bingyanghuang

Hi,

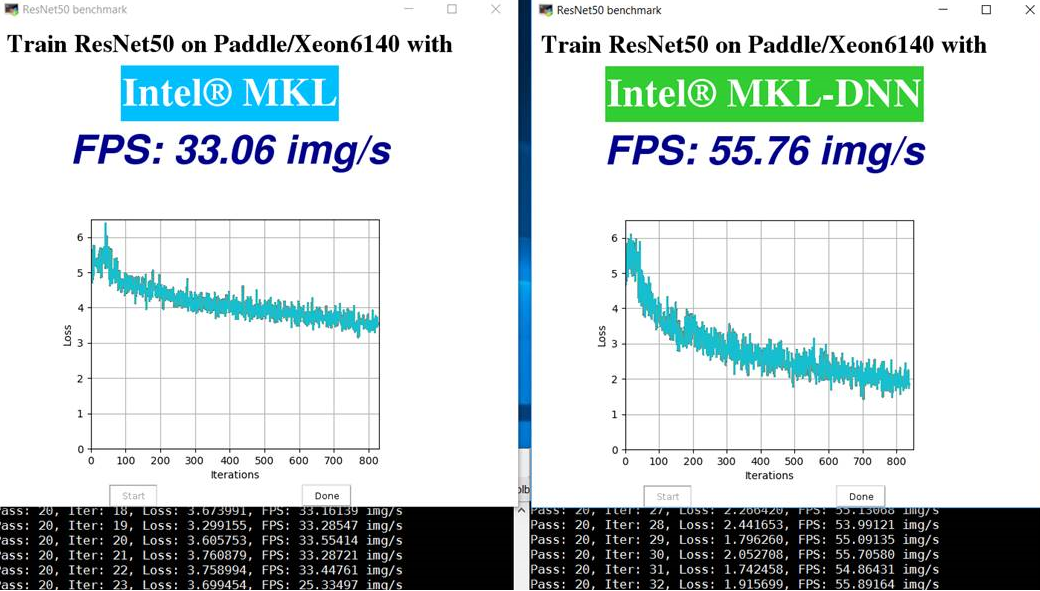

When I did the demo GUI with MKL optimizing on Parallel executor, and with MKLDNN, I found that after 600 iterations, MKL loss value is much slower than MKLDNN loss value. You can see it from figure below.

We can see from the figure that with MKL loss converges slower than MKLDNN.

The demo is a python script which I save in format of txt, you can convert it to python by changing from ".txt "to ".py". Just run it with python filename and click "start" in GUI.

This is the ResNet50 python script for MKL with Parallel executor:GUI MKL New.txt This is the ResNet50 python script for MKL-DNN:GUI training for ResNet50 benchmark.txt