Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into pad_op

Showing

doc/design/ops/dist_train.md

0 → 100644

文件已添加

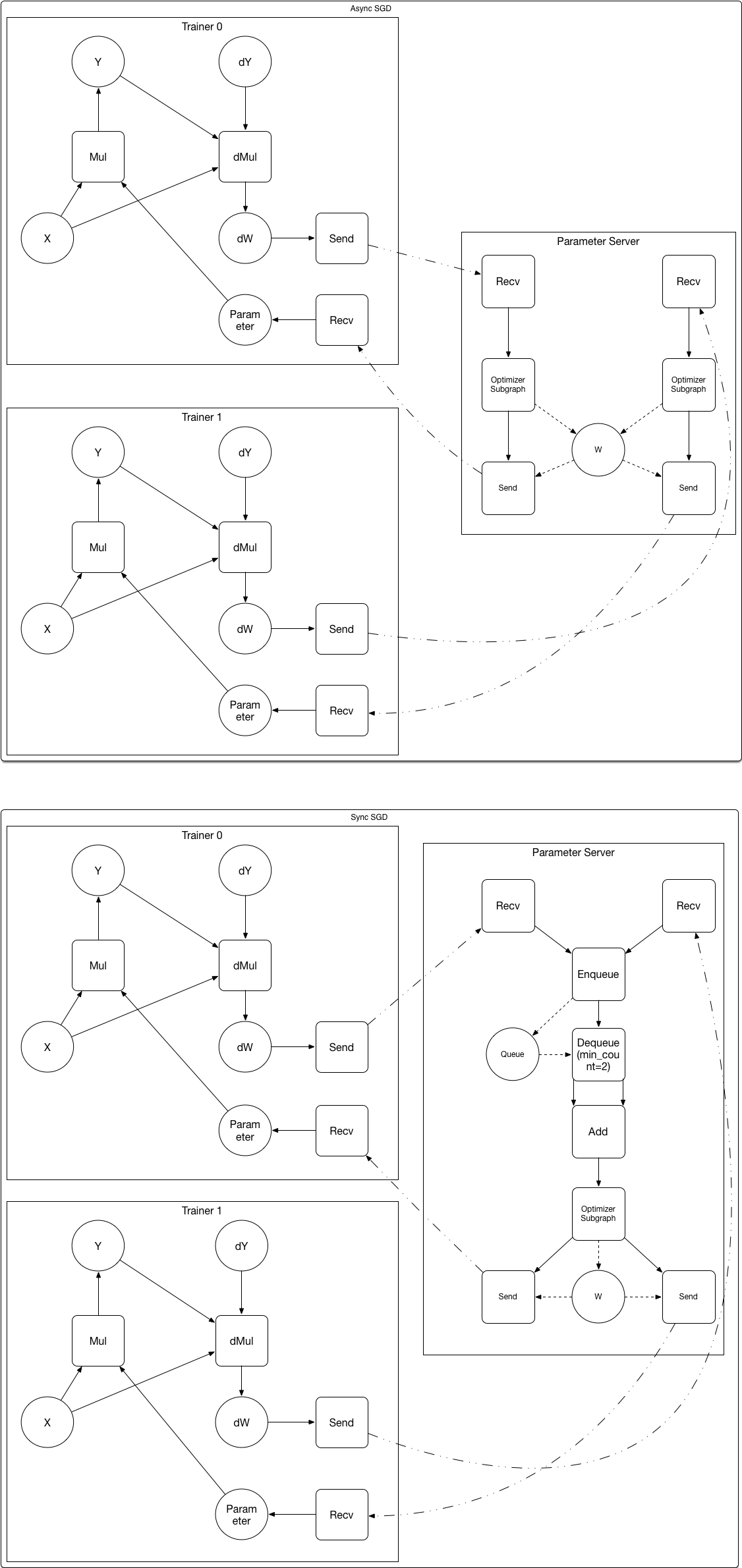

doc/design/ops/src/dist-graph.png

0 → 100644

222.2 KB

文件已添加

27.9 KB

paddle/operators/sum_op.cc

0 → 100644

paddle/operators/sum_op.cu

0 → 100644

paddle/operators/sum_op.h

0 → 100644