Apply IOU to test_parallel_executor_seresnext_base_gpu (#43812)

1. test_parallel_executor_seresnext_base_gpu failed on 2 P100 GPUs with `470.82` driver.

```

======================================================================

FAIL: test_seresnext_with_learning_rate_decay (test_parallel_executor_seresnext_base_gpu.TestResnetGPU)

----------------------------------------------------------------------

Traceback (most recent call last):

File "/opt/paddle/paddle/build/python/paddle/fluid/tests/unittests/test_parallel_executor_seresnext_base_gpu.py", line 32, in test_seresnext_with_learning_rate_decay

self._compare_result_with_origin_model(

File "/opt/paddle/paddle/build/python/paddle/fluid/tests/unittests/seresnext_test_base.py", line 56, in _compare_result_with_origin_model

self.assertAlmostEquals(

AssertionError: 6.8825445 != 6.882531 within 1e-05 delta (1.335144e-05 difference)

----------------------------------------------------------------------

```

2. To be more accuracte on evaluating loss convergence, we proposed to apply IOU as metric, instead of comparing first and last loss values.

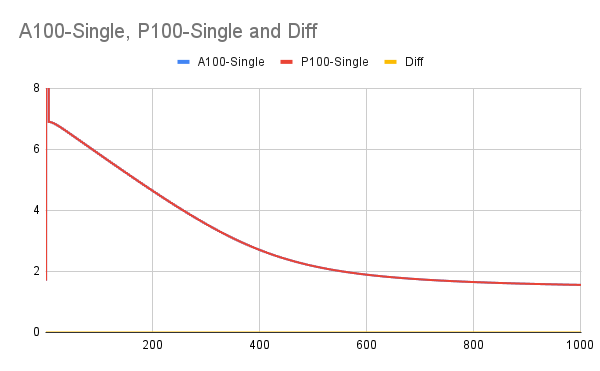

3. As offline discussion, we also evaluated convergence on P100 and A100 in 1000 interations to make sure this UT have the same convergence property on both devices. The curves are showed below.

Showing

想要评论请 注册 或 登录