Merge branch 'support_scalar_kernels' of https://github.com/Xreki/Paddle into...

Merge branch 'support_scalar_kernels' of https://github.com/Xreki/Paddle into support_scalar_kernels

Showing

| W: | H:

| W: | H:

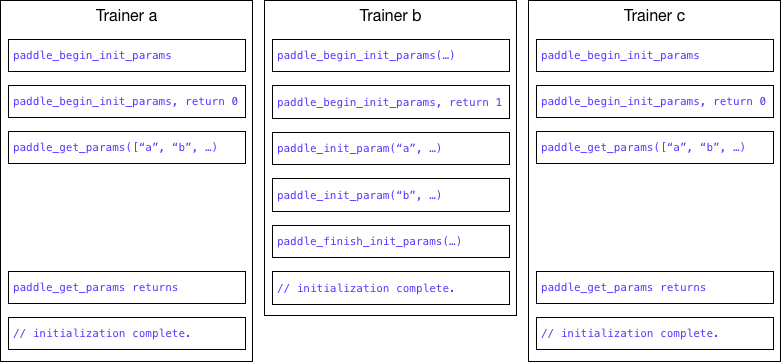

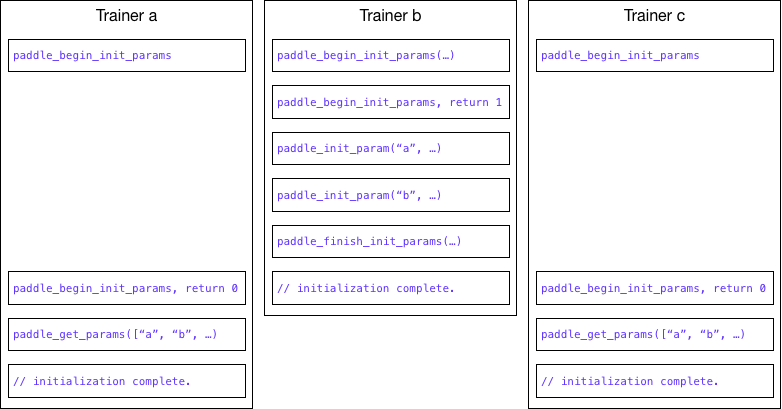

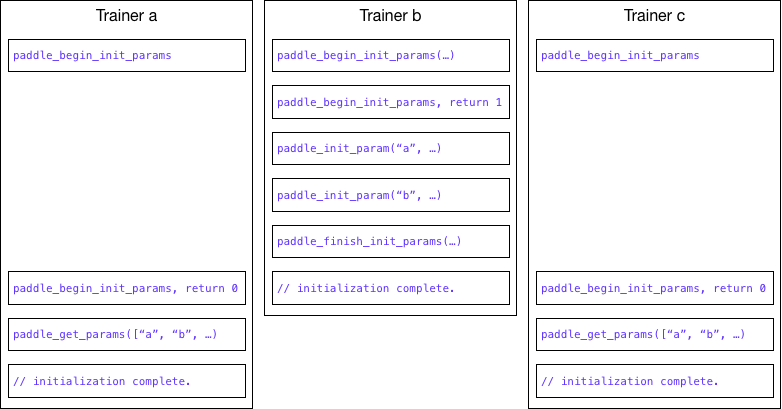

doc/design/parameters_in_cpp.md

0 → 100644

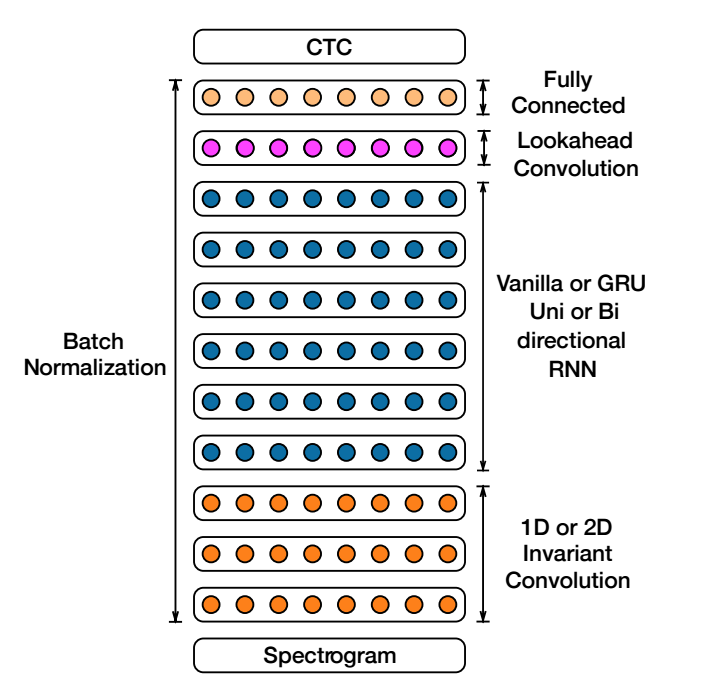

doc/design/speech/README.MD

0 → 100644

113.8 KB

paddle/go/cmd/master/master.go

0 → 100644

paddle/go/master/service.go

0 → 100644