Merge remote-tracking branch 'upstream/develop' into fluid

Conflicts: paddle/platform/place.h

Showing

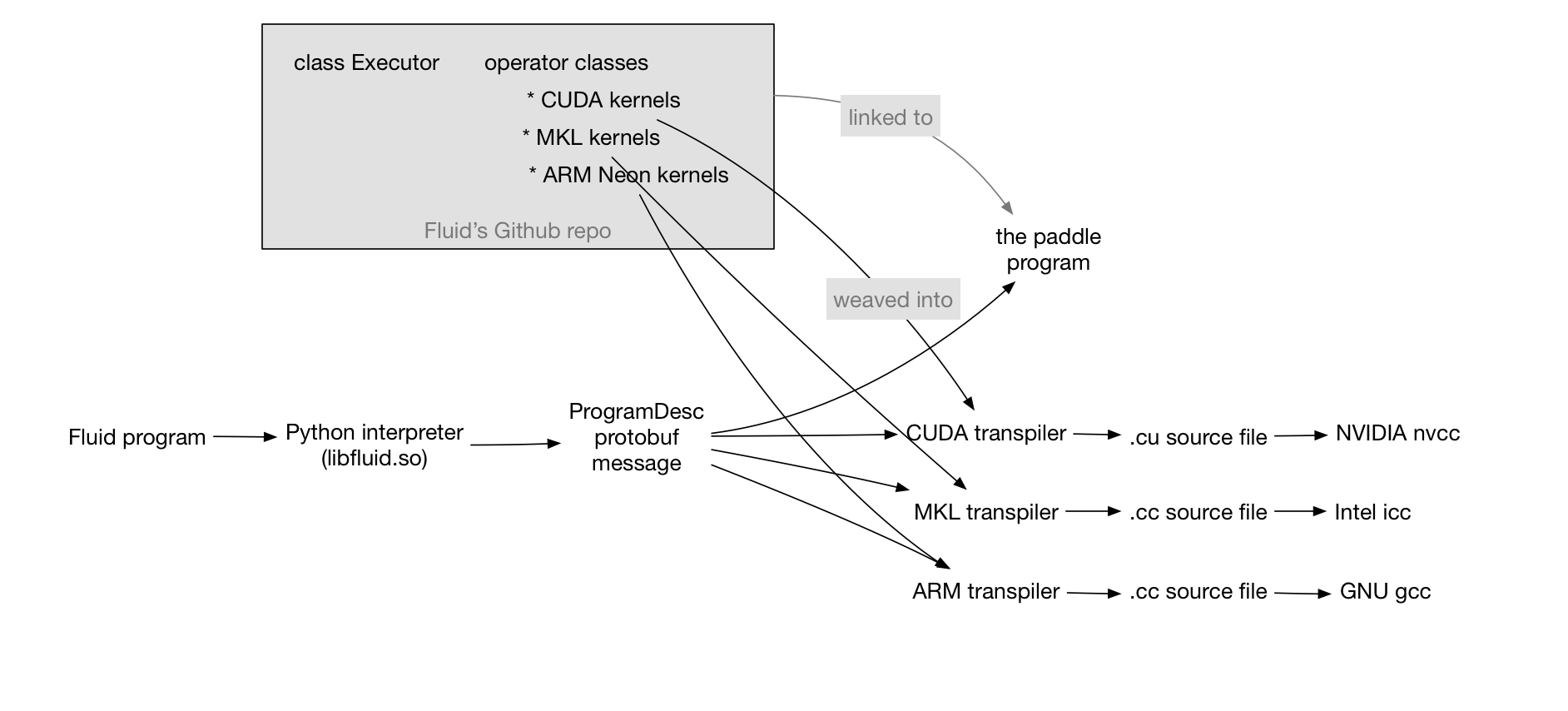

doc/design/fluid-compiler.graffle

0 → 100644

文件已添加

doc/design/fluid-compiler.png

0 → 100644

121.2 KB

doc/design/fluid.md

0 → 100644