Merge pull request #772 from codeWorm2015/metal

Metal

Showing

.gitmodules

0 → 100644

Dockerfile

0 → 100644

demo/ReadMe.md

0 → 100644

demo/getDemo.sh

0 → 100644

doc/build.md

0 → 100644

doc/design_doc.md

0 → 100644

doc/development_doc.md

0 → 100644

doc/images/devices.png

0 → 100644

116.0 KB

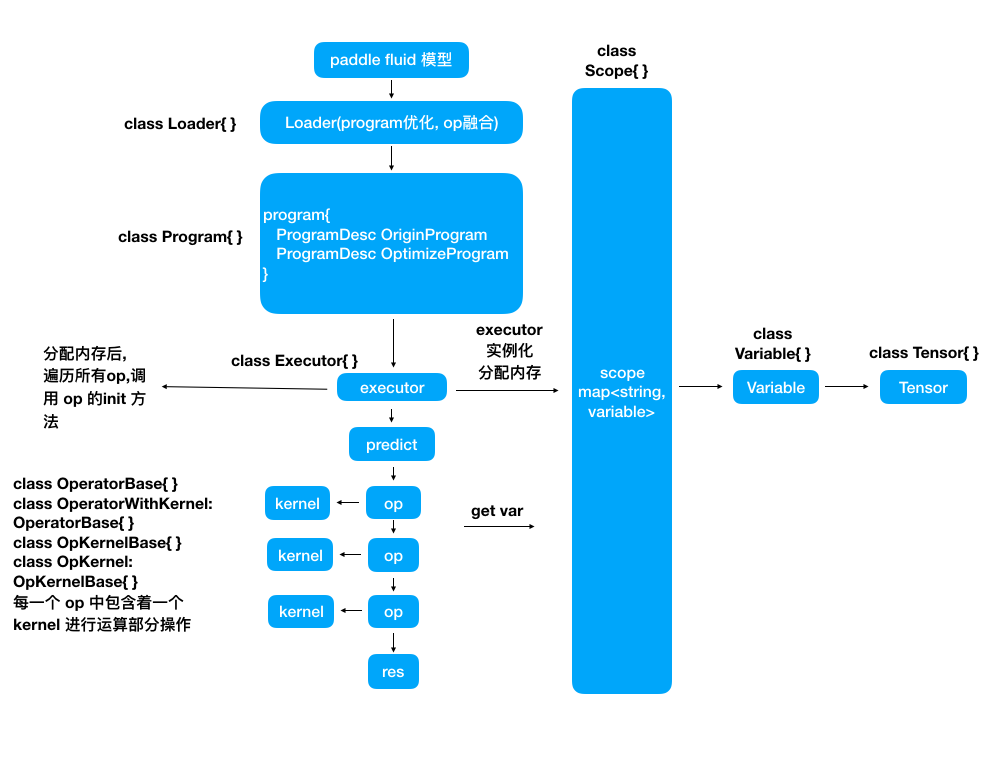

doc/images/flow_chart.png

0 → 100644

110.3 KB

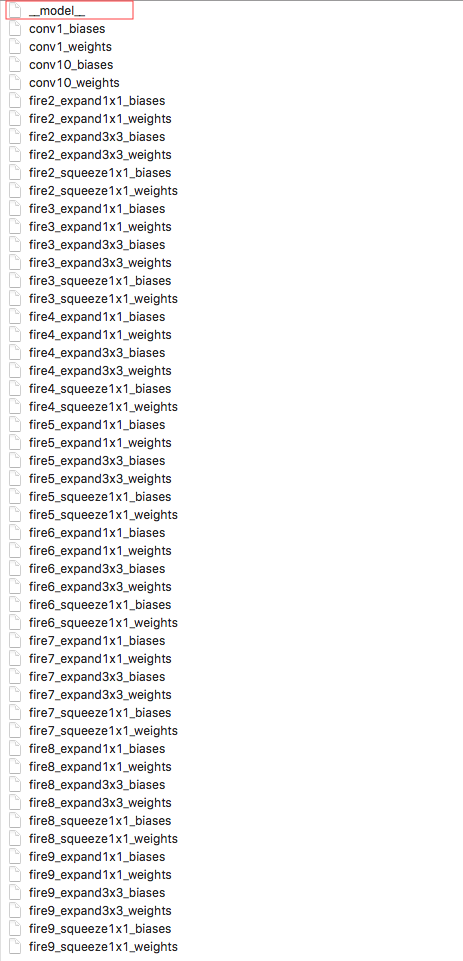

doc/images/model_desc.png

0 → 100644

162.1 KB

10.1 KB

doc/quantification.md

0 → 100644

src/common/common.h

0 → 100644

src/fpga/api/fpga_api.cpp

0 → 100644

src/fpga/api/fpga_api.h

0 → 100644

src/fpga/fpga_quantilization.cpp

0 → 100644

src/fpga/fpga_quantilization.h

0 → 100644

src/io/api.cc

0 → 100644

src/io/api_paddle_mobile.cc

0 → 100644

src/io/api_paddle_mobile.h

0 → 100644

此差异已折叠。

src/io/loader.cpp

0 → 100644

此差异已折叠。

src/io/loader.h

0 → 100644

此差异已折叠。

src/io/paddle_inference_api.h

0 → 100644

此差异已折叠。

src/io/paddle_mobile.cpp

0 → 100644

此差异已折叠。

src/io/paddle_mobile.h

0 → 100644

此差异已折叠。

src/ios_io/PaddleMobile.h

0 → 100644

此差异已折叠。

src/ios_io/PaddleMobile.mm

0 → 100644

此差异已折叠。

src/ios_io/op_symbols.h

0 → 100644

此差异已折叠。

src/operators/conv_transpose_op.h

0 → 100644

此差异已折叠。

src/operators/dropout_op.cpp

0 → 100644

此差异已折叠。

src/operators/dropout_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/operators/fusion_fc_relu_op.h

0 → 100644

此差异已折叠。

src/operators/im2sequence_op.cpp

0 → 100644

此差异已折叠。

src/operators/im2sequence_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/operators/kernel/mali/batchnorm_kernel.cpp

100644 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/operators/kernel/mali/conv_kernel.cpp

100644 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/operators/prelu_op.cpp

0 → 100644

此差异已折叠。

src/operators/prelu_op.h

0 → 100644

此差异已折叠。

src/operators/resize_op.cpp

0 → 100644

此差异已折叠。

src/operators/resize_op.h

0 → 100644

此差异已折叠。

src/operators/scale_op.cpp

0 → 100644

此差异已折叠。

src/operators/scale_op.h

0 → 100644

此差异已折叠。

src/operators/slice_op.cpp

0 → 100644

此差异已折叠。

src/operators/slice_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/fpga/test_tensor_quant.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/operators/test_prelu_op.cpp

0 → 100644

此差异已折叠。

test/operators/test_resize_op.cpp

0 → 100644

此差异已折叠。

test/operators/test_scale_op.cpp

0 → 100644

此差异已折叠。

test/operators/test_slice_op.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

文件已移动

tools/arm-platform.cmake

0 → 100644

此差异已折叠。

此差异已折叠。

tools/net-detail.awk

0 → 100644

此差异已折叠。

tools/net.awk

0 → 100644

此差异已折叠。

tools/op.cmake

0 → 100644

此差异已折叠。

此差异已折叠。

tools/quantification/README.md

0 → 100644

此差异已折叠。

tools/quantification/convert.cpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

tools/run.sh

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。