Skip to content

体验新版

项目

组织

正在加载...

登录

切换导航

打开侧边栏

PaddlePaddle

Paddle-Lite

提交

0cc72c42

P

Paddle-Lite

项目概览

PaddlePaddle

/

Paddle-Lite

通知

338

Star

4

Fork

1

代码

文件

提交

分支

Tags

贡献者

分支图

Diff

Issue

271

列表

看板

标记

里程碑

合并请求

78

Wiki

0

Wiki

分析

仓库

DevOps

项目成员

Pages

P

Paddle-Lite

项目概览

项目概览

详情

发布

仓库

仓库

文件

提交

分支

标签

贡献者

分支图

比较

Issue

271

Issue

271

列表

看板

标记

里程碑

合并请求

78

合并请求

78

Pages

分析

分析

仓库分析

DevOps

Wiki

0

Wiki

成员

成员

收起侧边栏

关闭侧边栏

动态

分支图

创建新Issue

提交

Issue看板

未验证

提交

0cc72c42

编写于

3月 05, 2020

作者:

H

huzhiqiang

提交者:

GitHub

3月 05, 2020

浏览文件

操作

浏览文件

下载

电子邮件补丁

差异文件

[x86 doc] add demo for x86 API (#3090)

上级

c505ab26

变更

2

隐藏空白更改

内联

并排

Showing

2 changed file

with

46 addition

and

27 deletion

+46

-27

docs/advanced_user_guides/x86.md

docs/advanced_user_guides/x86.md

+44

-25

docs/api_reference/cxx_api_doc.md

docs/api_reference/cxx_api_doc.md

+2

-2

未找到文件。

docs/advanced_user_guides/x86.md

浏览文件 @

0cc72c42

...

@@ -9,8 +9,8 @@ Paddle-Lite 支持在Docker或Linux环境编译x86预测库。环境搭建参考

...

@@ -9,8 +9,8 @@ Paddle-Lite 支持在Docker或Linux环境编译x86预测库。环境搭建参考

1、 下载代码

1、 下载代码

```

bash

```

bash

git clone https://github.com/PaddlePaddle/Paddle-Lite.git

git clone https://github.com/PaddlePaddle/Paddle-Lite.git

#

需要切换到 release/v2.0.0之后版本

#

切换到release分支

git checkout

<release_tag>

git checkout

release/v2.3

```

```

2、 源码编译

2、 源码编译

...

@@ -42,43 +42,56 @@ x86编译结果位于 `build.lite.x86/inference_lite_lib`

...

@@ -42,43 +42,56 @@ x86编译结果位于 `build.lite.x86/inference_lite_lib`

## x86预测API使用示例

## x86预测API使用示例

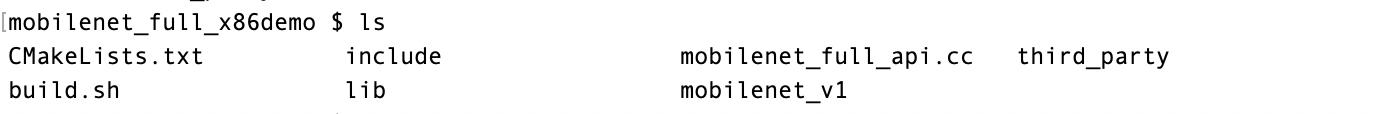

1、我们提供Linux环境下x86 API运行mobilenet_v1的示例:

[

mobilenet_full_x86demo

](

https://paddlelite-data.bj.bcebos.com/x86/mobilenet_full_x86demo.zip

)

。下载解压后内容如下:

`mobilenet_v1`

为模型文件、

`lib`

和

`include`

分别是Paddle-Lite的预测库和头文件、

`third_party`

下是编译时依赖的第三方库

`mklml`

、

`mobilenet_full_api.cc`

是x86示例的源代码、

`build.sh`

为编译的脚本。

2、demo内容与使用方法

```

bash

# 1、编译

sh build.sh

```

编译结果为当前目录下的

`mobilenet_full_api `

```

bash

# 2、执行预测

mobilenet_full_api mobilenet_v1

```

`mobilenet_v1`

为当前目录下的模型路径,

`mobilenet_full_api`

为第一步编译出的可执行文件。

3、示例源码

`mobilenet_full_api.cc`

```

c++

```

c++

#include <gflags/gflags.h>

#include <iostream>

#include <iostream>

#include <vector>

#include <vector>

#include "paddle_api.h" // NOLINT

#include "paddle_api.h"

#include "paddle_use_kernels.h" // NOLINT

#include "paddle_use_ops.h" // NOLINT

#include "paddle_use_passes.h" // NOLINT

using

namespace

paddle

::

lite_api

;

// NOLINT

DEFINE_string

(

model_dir

,

""

,

"Model dir path."

);

using

namespace

paddle

::

lite_api

;

// NOLINT

DEFINE_string

(

optimized_model_dir

,

""

,

"Optimized model dir."

);

DEFINE_bool

(

prefer_int8_kernel

,

false

,

"Prefer to run model with int8 kernels"

);

int64_t

ShapeProduction

(

const

shape_t

&

shape

)

{

int64_t

ShapeProduction

(

const

shape_t

&

shape

)

{

int64_t

res

=

1

;

int64_t

res

=

1

;

for

(

auto

i

:

shape

)

res

*=

i

;

for

(

auto

i

:

shape

)

res

*=

i

;

return

res

;

return

res

;

}

}

void

RunModel

()

{

// 1. Set CxxConfig

CxxConfig

config

;

config

.

set_model_file

(

FLAGS_model_dir

+

"model"

);

config

.

set_param_file

(

FLAGS_model_dir

+

"params"

);

config

.

set_valid_places

({

lite_api

::

Place

{

TARGET

(

kX86

),

PRECISION

(

kFloat

)}

});

void

RunModel

(

std

::

string

model_dir

)

{

// 1. Create CxxConfig

CxxConfig

config

;

config

.

set_model_dir

(

model_dir

);

config

.

set_valid_places

({

Place

{

TARGET

(

kX86

),

PRECISION

(

kFloat

)},

Place

{

TARGET

(

kHost

),

PRECISION

(

kFloat

)}

});

// 2. Create PaddlePredictor by CxxConfig

// 2. Create PaddlePredictor by CxxConfig

std

::

shared_ptr

<

PaddlePredictor

>

predictor

=

std

::

shared_ptr

<

PaddlePredictor

>

predictor

=

CreatePaddlePredictor

<

CxxConfig

>

(

config

);

CreatePaddlePredictor

<

CxxConfig

>

(

config

);

// 3. Prepare input data

// 3. Prepare input data

std

::

unique_ptr

<

Tensor

>

input_tensor

(

std

::

move

(

predictor

->

GetInput

(

0

)));

std

::

unique_ptr

<

Tensor

>

input_tensor

(

std

::

move

(

predictor

->

GetInput

(

0

)));

input_tensor

->

Resize

(

shape_t

({

1

,

3

,

224

,

224

})

);

input_tensor

->

Resize

(

{

1

,

3

,

224

,

224

}

);

auto

*

data

=

input_tensor

->

mutable_data

<

float

>

();

auto

*

data

=

input_tensor

->

mutable_data

<

float

>

();

for

(

int

i

=

0

;

i

<

ShapeProduction

(

input_tensor

->

shape

());

++

i

)

{

for

(

int

i

=

0

;

i

<

ShapeProduction

(

input_tensor

->

shape

());

++

i

)

{

data

[

i

]

=

1

;

data

[

i

]

=

1

;

...

@@ -90,15 +103,21 @@ void RunModel() {

...

@@ -90,15 +103,21 @@ void RunModel() {

// 5. Get output

// 5. Get output

std

::

unique_ptr

<

const

Tensor

>

output_tensor

(

std

::

unique_ptr

<

const

Tensor

>

output_tensor

(

std

::

move

(

predictor

->

GetOutput

(

0

)));

std

::

move

(

predictor

->

GetOutput

(

0

)));

std

::

cout

<<

"Output

dim:

"

<<

output_tensor

->

shape

()[

1

]

<<

std

::

endl

;

std

::

cout

<<

"Output

shape

"

<<

output_tensor

->

shape

()[

1

]

<<

std

::

endl

;

for

(

int

i

=

0

;

i

<

ShapeProduction

(

output_tensor

->

shape

());

i

+=

100

)

{

for

(

int

i

=

0

;

i

<

ShapeProduction

(

output_tensor

->

shape

());

i

+=

100

)

{

std

::

cout

<<

"Output["

<<

i

<<

"]:"

<<

output_tensor

->

data

<

float

>

()[

i

]

<<

std

::

endl

;

std

::

cout

<<

"Output["

<<

i

<<

"]: "

<<

output_tensor

->

data

<

float

>

()[

i

]

<<

std

::

endl

;

}

}

}

}

int

main

(

int

argc

,

char

**

argv

)

{

int

main

(

int

argc

,

char

**

argv

)

{

google

::

ParseCommandLineFlags

(

&

argc

,

&

argv

,

true

);

if

(

argc

<

2

)

{

RunModel

();

std

::

cerr

<<

"[ERROR] usage: ./"

<<

argv

[

0

]

<<

" naive_buffer_model_dir

\n

"

;

exit

(

1

);

}

std

::

string

model_dir

=

argv

[

1

];

RunModel

(

model_dir

);

return

0

;

return

0

;

}

}

```

```

docs/api_reference/cxx_api_doc.md

浏览文件 @

0cc72c42

...

@@ -277,13 +277,13 @@ config.set_power_mode(LITE_POWER_HIGH);

...

@@ -277,13 +277,13 @@ config.set_power_mode(LITE_POWER_HIGH);

std

::

shared_ptr

<

PaddlePredictor

>

predictor

=

CreatePaddlePredictor

<

MobileConfig

>

(

config

);

std

::

shared_ptr

<

PaddlePredictor

>

predictor

=

CreatePaddlePredictor

<

MobileConfig

>

(

config

);

```

```

### `set_model_from_file(model_

dir

)`

### `set_model_from_file(model_

file

)`

设置模型文件,当需要从磁盘加载模型时使用。

设置模型文件,当需要从磁盘加载模型时使用。

参数:

参数:

-

`model_

dir

(std::string)`

- 模型文件路径

-

`model_

file

(std::string)`

- 模型文件路径

返回:

`None`

返回:

`None`

...

...

编辑

预览

Markdown

is supported

0%

请重试

或

添加新附件

.

添加附件

取消

You are about to add

0

people

to the discussion. Proceed with caution.

先完成此消息的编辑!

取消

想要评论请

注册

或

登录