add introduction about abstractions and features in README and logo (#31)

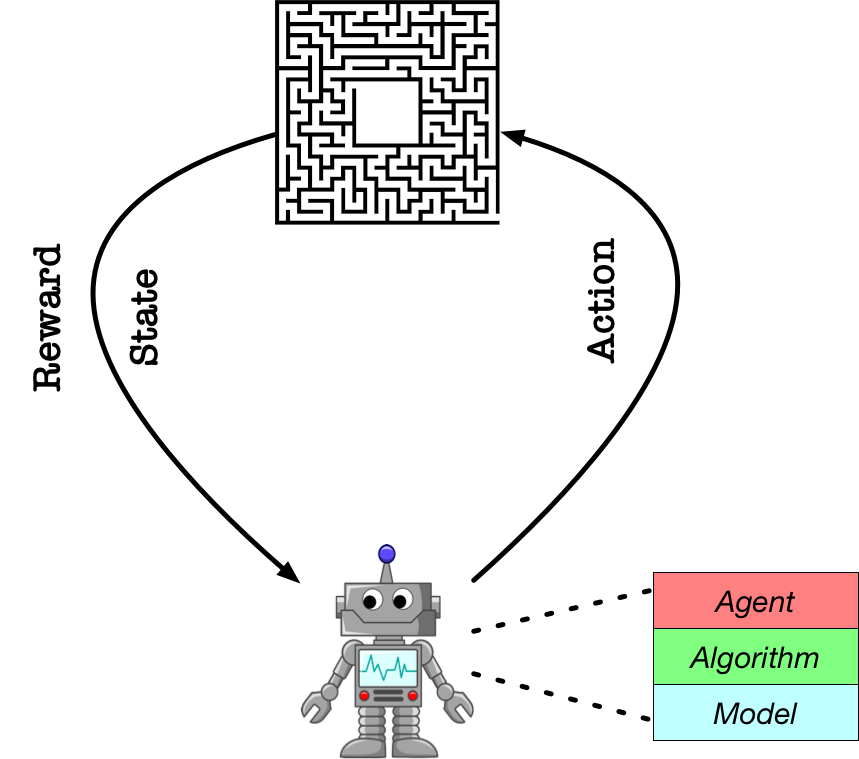

* Update README.md * Update README.md * add diagram/logo * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md

Showing

.github/PARL-logo.png

0 → 100644

27.4 KB

.github/abstractions.png

0 → 100644

88.6 KB