add the tutorial: getting started (#97)

* add the tutorial: getting started * fix commemt * minor change

Showing

docs/getting_started.rst

0 → 100644

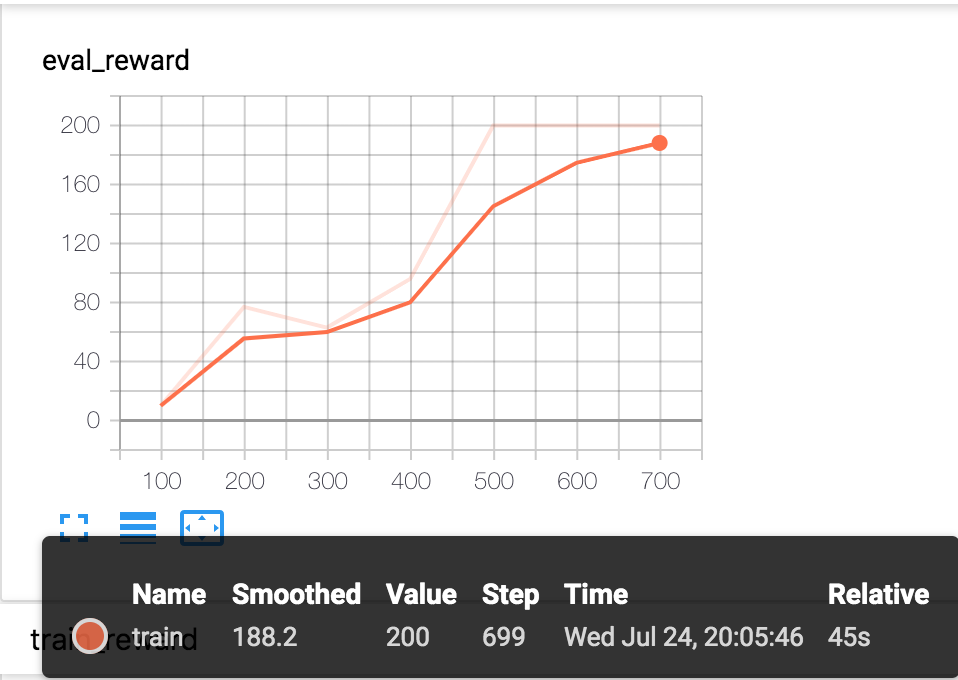

docs/images/quickstart.png

0 → 100644

71.2 KB

docs/tutorial.rst

已删除

100644 → 0