add sac (#188)

* add sac

Showing

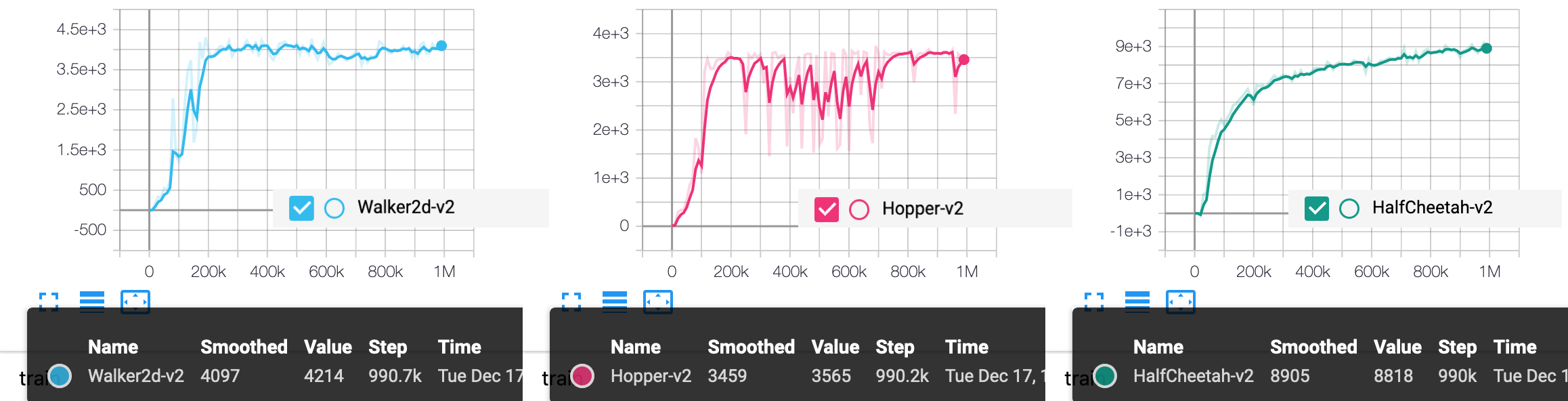

examples/SAC/.benchmark/merge.png

0 → 100644

186.6 KB

examples/SAC/README.md

0 → 100644

examples/SAC/mujoco_agent.py

0 → 100644

examples/SAC/mujoco_model.py

0 → 100644

examples/SAC/train.py

0 → 100644

parl/algorithms/fluid/sac.py

0 → 100644