fix minor problems in the docs (#138)

* fix minor probmels in the docs * typo * remove pip source * fix monitor * add performance of A2C * Update README.md * modify logger for GPU detection

Showing

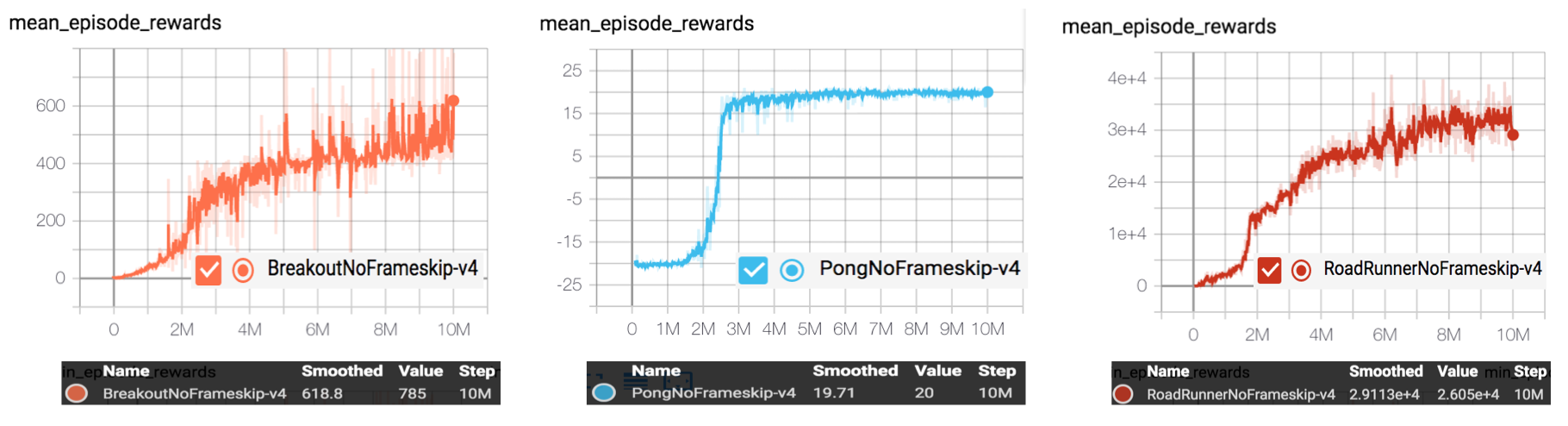

examples/A2C/learning_curve.png

0 → 100644

288.5 KB

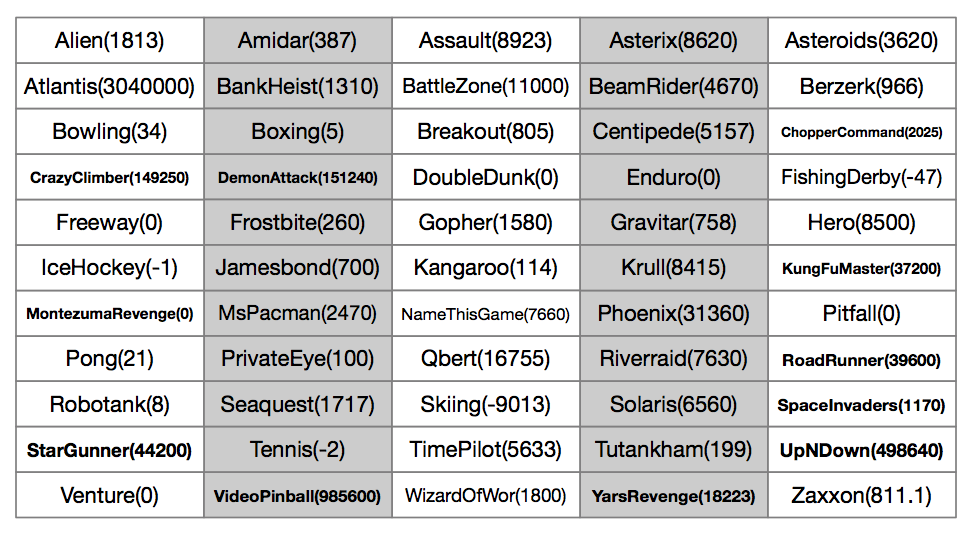

examples/A2C/result.png

0 → 100644

203.5 KB