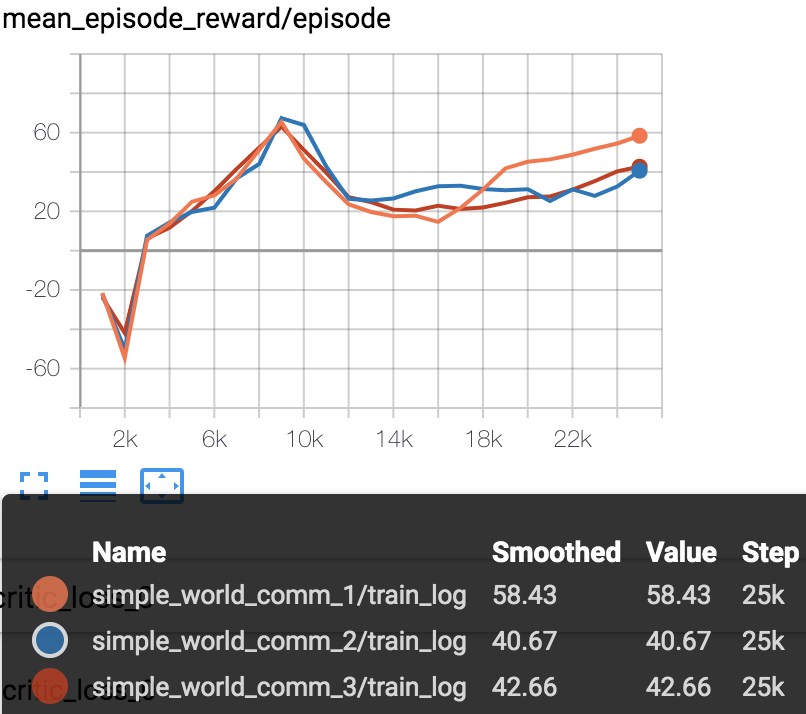

add maddpg example (#200)

* add maddpg example * format with yapf * fix coding style * fix coding style * unittest without import multiagent env * update maddpg code * update maddpg readme * add copyright comments

Showing

111.3 KB

75.0 KB

368.8 KB

78.0 KB

94.7 KB

138.3 KB

83.5 KB

132.6 KB

81.1 KB

115.6 KB

90.0 KB

273.5 KB

89.2 KB

202.7 KB

94.1 KB

231.8 KB

95.0 KB

examples/MADDPG/README.md

0 → 100644

examples/MADDPG/simple_agent.py

0 → 100644

examples/MADDPG/simple_model.py

0 → 100644

examples/MADDPG/train.py

0 → 100644

parl/algorithms/fluid/maddpg.py

0 → 100644

parl/env/multiagent_simple_env.py

0 → 100644