add design doc (#13)

add design doc

Showing

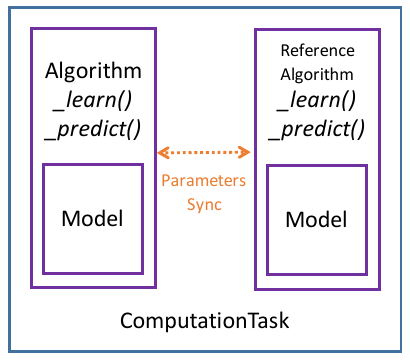

docs/ct.png

0 → 100644

19.0 KB

docs/design_doc.md

0 → 100644

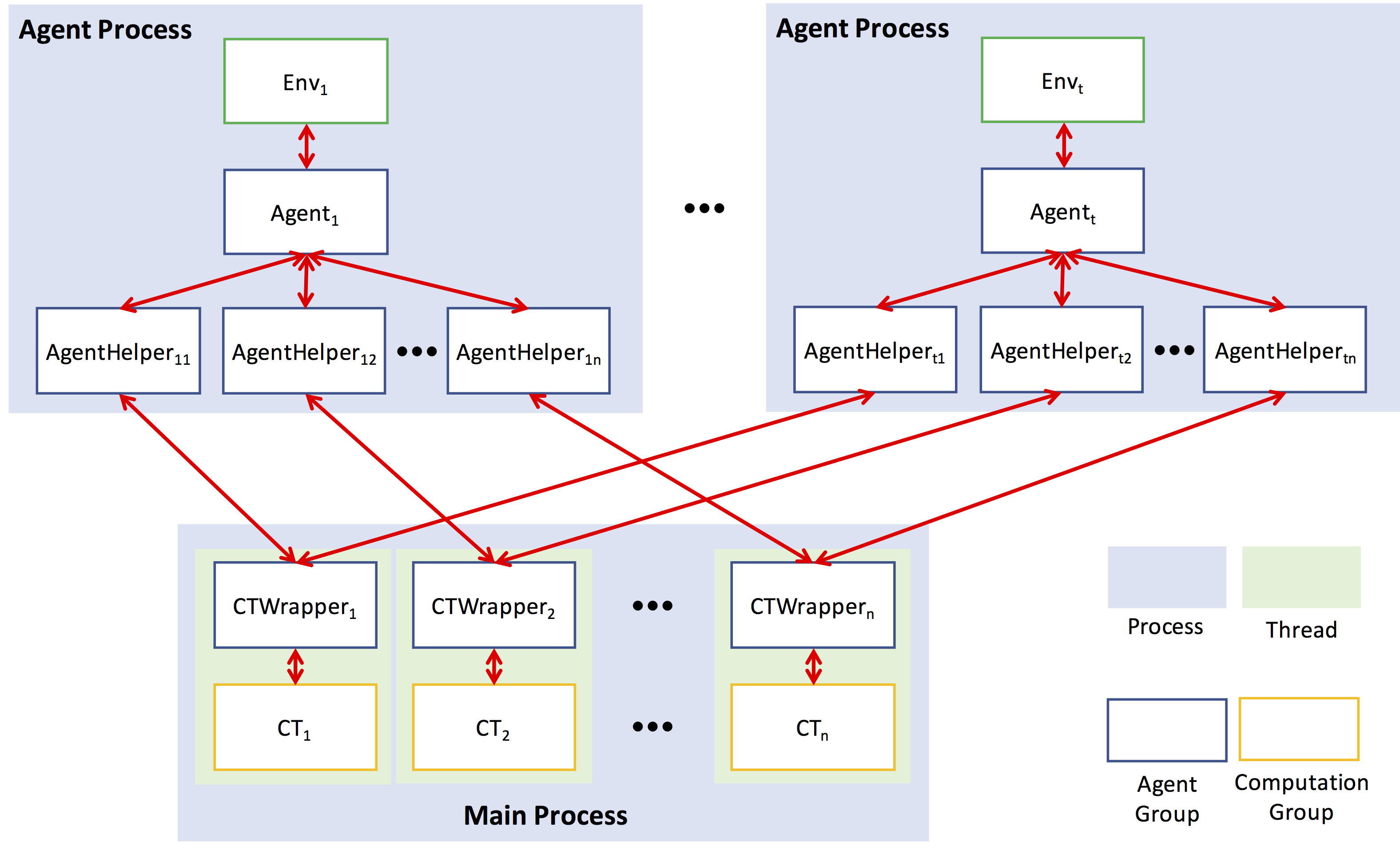

docs/framework.png

0 → 100644

328.6 KB

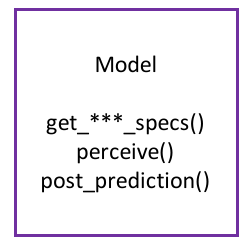

docs/model.png

0 → 100644

10.5 KB

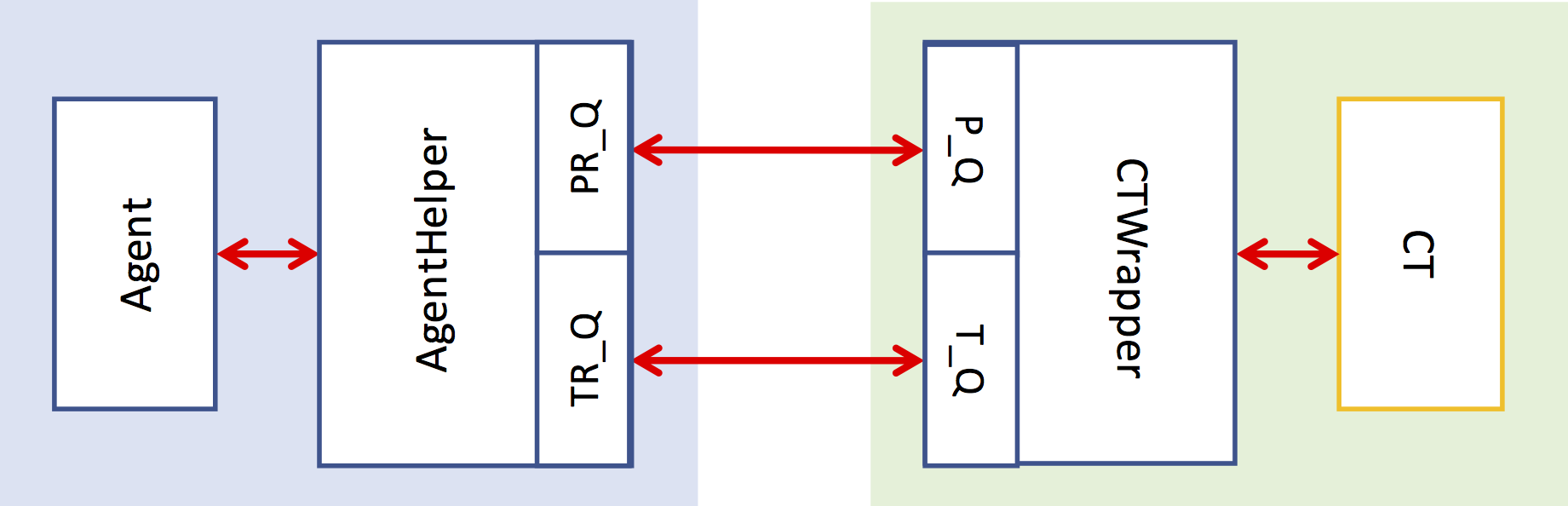

docs/relation.png

0 → 100644

61.6 KB

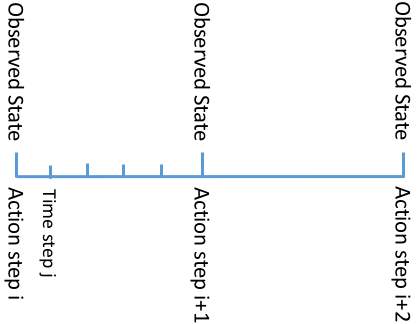

docs/step.png

0 → 100644

16.9 KB