add simple dqn demo (#254)

* add simple dqn * Update README.md * Update train.py * update * update image in README * update readme * simplify * yapf * Update README.md * Update README.md * Update README.md * Update train.py * yapf

Showing

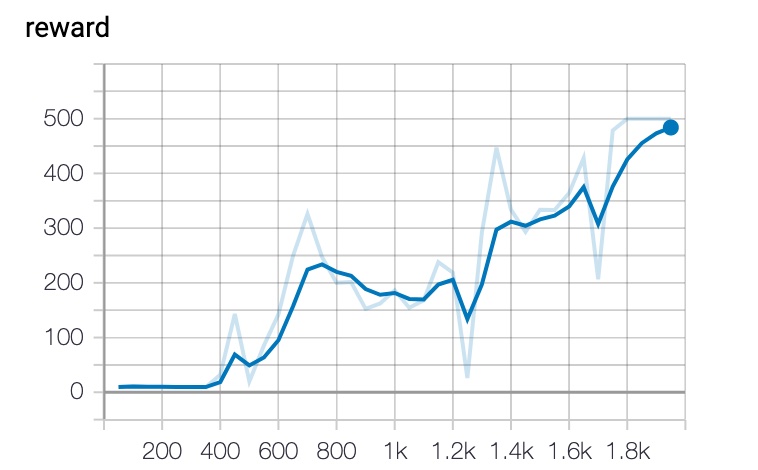

examples/DQN/cartpole.jpg

0 → 100644

64.3 KB

examples/DQN/cartpole_agent.py

0 → 100755

examples/DQN/cartpole_model.py

0 → 100755

examples/DQN/replay_memory.py

100644 → 100755

examples/DQN/train.py

100644 → 100755

examples/DQN_variant/README.md

0 → 100644

文件已移动

examples/DQN_variant/train.py

0 → 100644