单机单卡能跑通, 单机多卡会在fluid.ParallelExecutor处报SegmentationFault

Created by: daizh

[问题描述] 运行script/run_xnli.sh时, 单机单卡能够顺利运行, 但多卡会在fluid.ParallelExecutor处报SegmentationFault

[报错位置] run_classifier.py中

167 if args.do_train:

168 print("do_train:")

169 exec_strategy = fluid.ExecutionStrategy()

170 if args.use_fast_executor:

171 exec_strategy.use_experimental_executor = True

172 exec_strategy.num_threads = dev_count

173 exec_strategy.num_iteration_per_drop_scope = args.num_iteration_per_drop_scope

174

175 print("init fluid.ParallelExecutor") # 此处之后报错

176 train_exe = fluid.ParallelExecutor(

177 use_cuda=args.use_cuda,

178 loss_name=graph_vars["loss"].name,

179 exec_strategy=exec_strategy,

180 main_program=train_program)

181

182 print(" train_pyreader.decorate_tensor_provider(train_data_generator)")

183 train_pyreader.decorate_tensor_provider(train_data_generator)[错误信息]

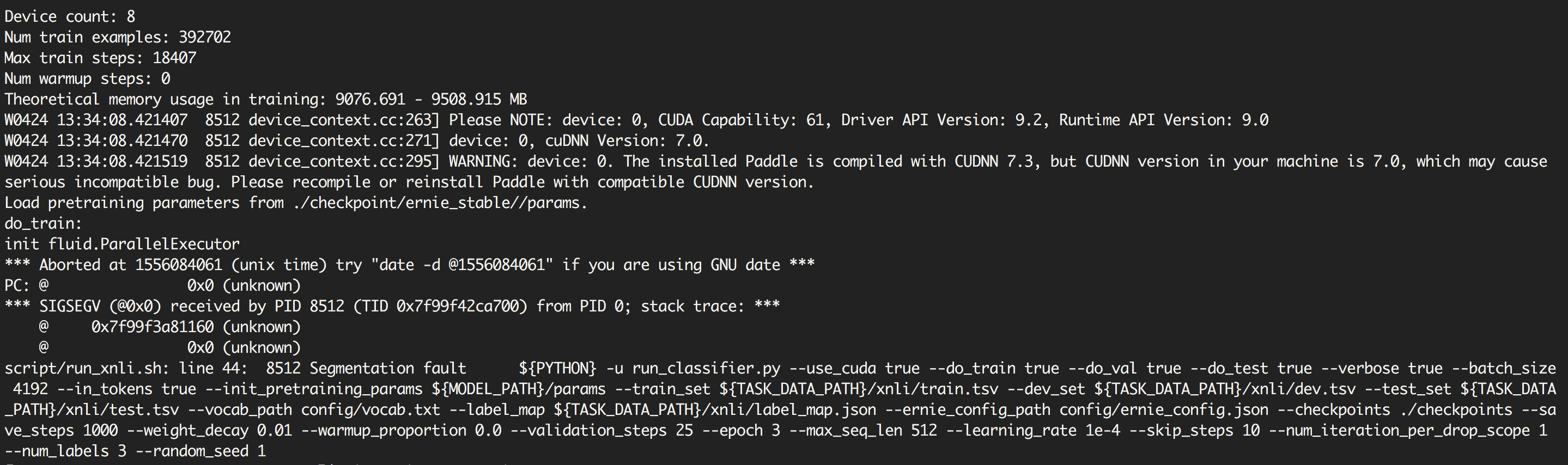

Theoretical memory usage in training: 9076.691 - 9508.915 MB W0424 13:34:08.421407 8512 device_context.cc:263] Please NOTE: device: 0, CUDA Capability: 61, Driver API Version: 9.2, Runtime API Version: 9.0 W0424 13:34:08.421470 8512 device_context.cc:271] device: 0, cuDNN Version: 7.0. W0424 13:34:08.421519 8512 device_context.cc:295] WARNING: device: 0. The installed Paddle is compiled with CUDNN 7.3, but CUDNN version in your machine is 7.0, which may cause serious incompatible bug. Please recompile or reinstall Paddle with compatible CUDNN version. Load pretraining parameters from ./checkpoint/ernie_stable//params. do_train: init fluid.ParallelExecutor

* Aborted at 1556084061 (unix time) try "date -d @1556084061" if you are using GNU date *

PC: @ 0x0 (unknown)* SIGSEGV (@0x0) received by PID 8512 (TID 0x7f99f42ca700) from PID 0; stack trace: *

@ 0x7f99f3a81160 (unknown) @ 0x0 (unknown) script/run_xnli.sh: line 44: 8512 Segmentation fault ${PYTHON} -u run_classifier.py --use_cuda true --do_train true --do_val true --do_test true --verbose true --batch_size 4192 --in_tokens true --init_pretraining_params ${MODEL_PATH}/params --train_set ${TASK_DATA_PATH}/xnli/train.tsv --dev_set ${TASK_DATA_PATH}/xnli/dev.tsv --test_set ${TASK_DATA_PATH}/xnli/test.tsv --vocab_path config/vocab.txt --label_map ${TASK_DATA_PATH}/xnli/label_map.json --ernie_config_path config/ernie_config.json --checkpoints ./checkpoints --save_steps 1000 --weight_decay 0.01 --warmup_proportion 0.0 --validation_steps 25 --epoch 3 --max_seq_len 512 --learning_rate 1e-4 --skip_steps 10 --num_iteration_per_drop_scope 1 --num_labels 3 --random_seed 1[运行环境]

paddlepaddle-gpu==1.3.0.post85

cuda-9.0

cudnn_v7[运行脚本(script/run_xnli.sh)]

export FLAGS_sync_nccl_allreduce=1

export CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7

export LD_LIBRARY_PATH=./env/lib/:$LD_LIBRARY_PATH

export LD_LIBRARY_PATH=/home/work/cuda-9.0/lib64/:/home/work/cudnn/cudnn_v7/cuda/lib64:/home/work/cuda-9.0/extras/CUPTI/lib64/:$LD_LIBRARY_PATH

TASK_DATA_PATH=./task_data

MODEL_PATH=./checkpoint/ernie_stable/

PYTHON=./env/python3/bin/python3

${PYTHON} -u run_classifier.py \

--use_cuda true \

--do_train true \

--do_val true \

--do_test true \

--verbose true \

--batch_size 8192 \

--in_tokens true \

--init_pretraining_params ${MODEL_PATH}/params \

--train_set ${TASK_DATA_PATH}/xnli/train.tsv \

--dev_set ${TASK_DATA_PATH}/xnli/dev.tsv \

--test_set ${TASK_DATA_PATH}/xnli/test.tsv \

--vocab_path config/vocab.txt \

--label_map ${TASK_DATA_PATH}/xnli/label_map.json \

--ernie_config_path config/ernie_config.json \

--checkpoints ./checkpoints \

--save_steps 1000 \

--weight_decay 0.01 \

--warmup_proportion 0.0 \

--validation_steps 25 \

--epoch 3 \

--max_seq_len 512 \

--learning_rate 1e-4 \

--skip_steps 10 \

--num_iteration_per_drop_scope 1 \

--num_labels 3 \

--random_seed 1