Merge branch 'master' of https://github.com/PaddlePaddle/AutoDL into add_more_config_for_lrc

Showing

.gitignore

0 → 100644

.pre-commit-config.yaml

0 → 100644

.style.yapf

0 → 100644

.travis/precommit.sh

0 → 100644

AutoDL Design/README.md

0 → 100644

AutoDL Design/__init__.py

0 → 100644

AutoDL Design/autodl.py

0 → 100644

AutoDL Design/autodl_agent.py

0 → 100755

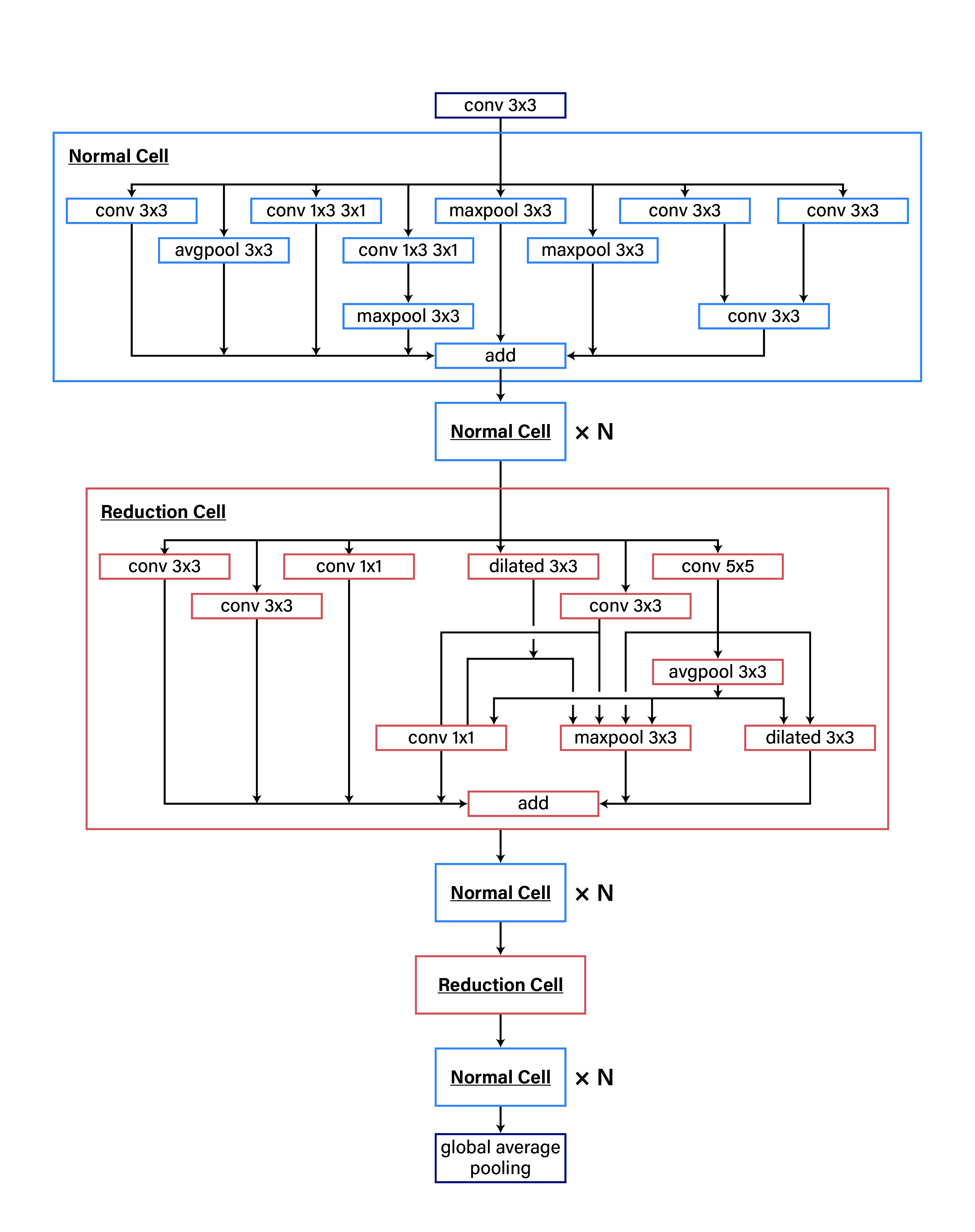

AutoDL Design/img/cnn_net.png

0 → 100644

322.7 KB

186.3 KB

AutoDL Design/main.py

0 → 100644

AutoDL Design/policy_model.py

0 → 100644

AutoDL Design/run.sh

0 → 100755

AutoDL Design/simple_main.py

0 → 100755

AutoDL Design/utils.py

0 → 100644

LRC/labels.npz

0 → 100644

文件已添加

LRC/paddle_predict/__init__.py

0 → 100644

LRC/run_cifar.sh

0 → 100644

LRC/run_cifar_test.sh

0 → 100644

LRC/test_mixup.py

0 → 100644

README.md

100644 → 100755