Merge branch 'docs/usage' into 'master'

feat: many updates, see details in long description See merge request platform/CloudNative4AI/cluster-lifecycle/di-orchestrator!60

Showing

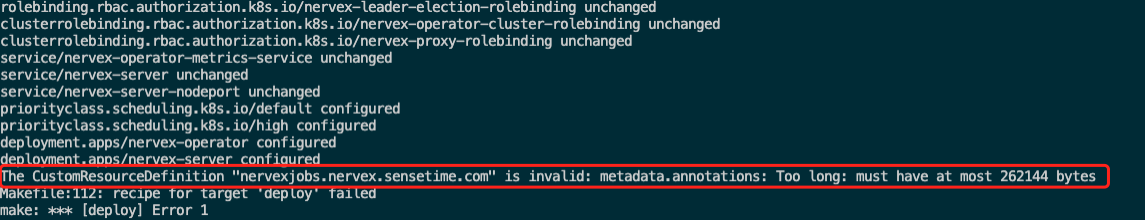

docs/images/deploy-failed.png

已删除

100644 → 0

72.5 KB

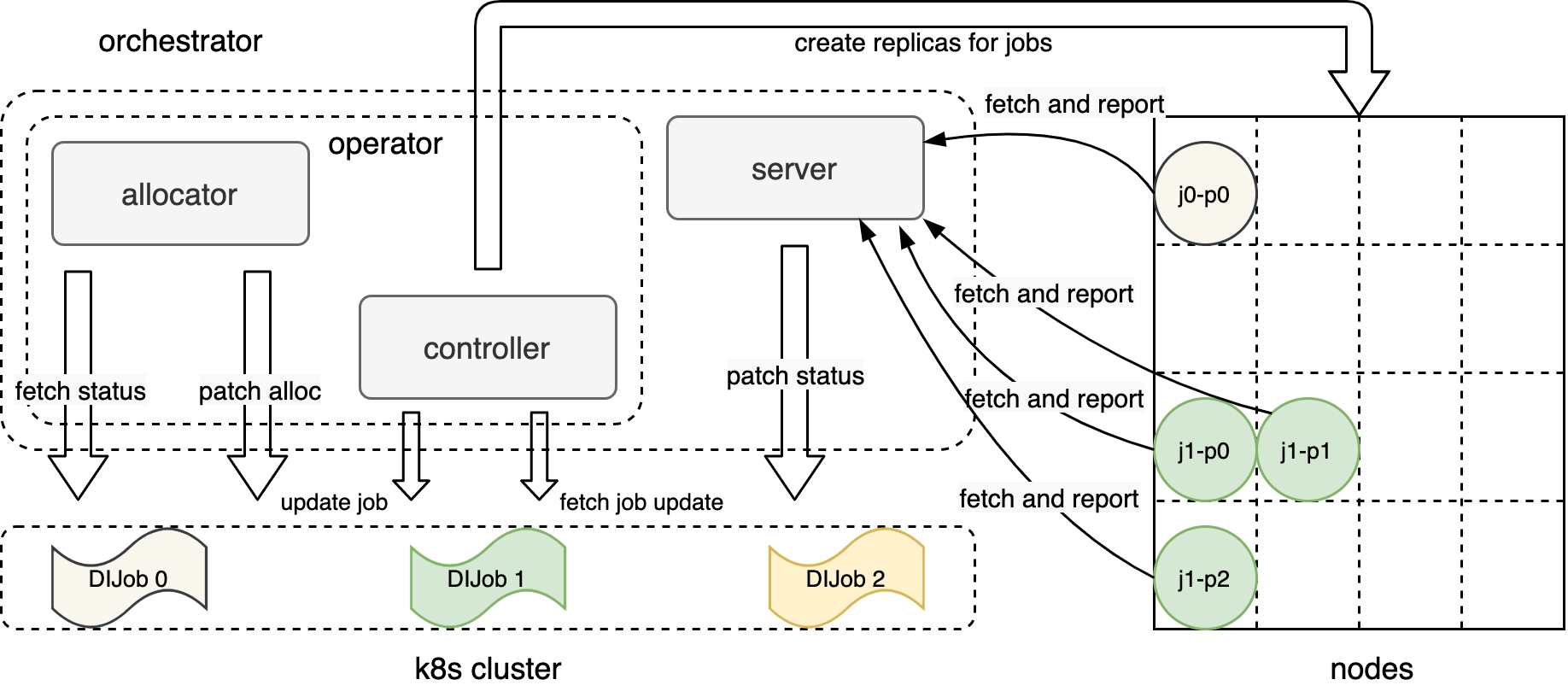

docs/images/di-api.png

已删除

100644 → 0

235.0 KB

docs/images/di-arch.svg

已删除

100644 → 0

因为 它太大了无法显示 source diff 。你可以改为 查看blob。

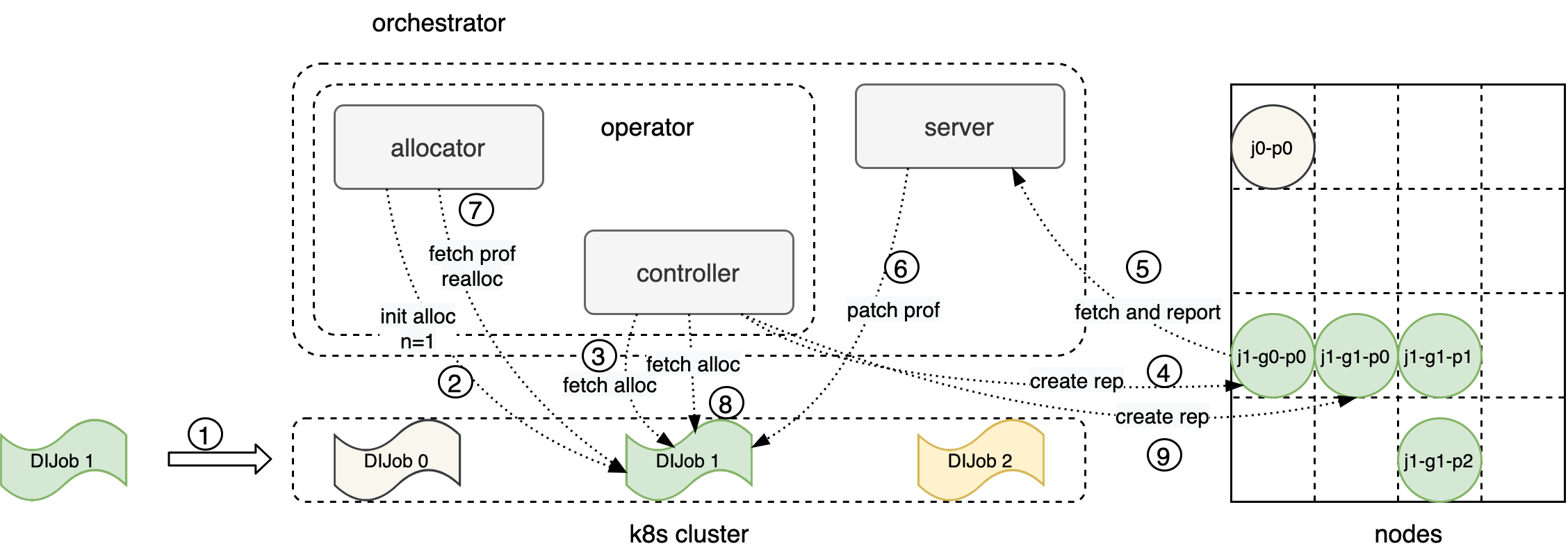

docs/images/di-engine-arch.png

0 → 100644

201.2 KB

221.9 KB

184.6 KB

| ... | ... | @@ -3,8 +3,7 @@ module opendilab.org/di-orchestrator |

| go 1.16 | ||

| require ( | ||

| github.com/deckarep/golang-set v1.7.1 | ||

| github.com/gin-gonic/gin v1.7.7 // indirect | ||

| github.com/gin-gonic/gin v1.7.7 | ||

| github.com/go-logr/logr v0.4.0 | ||

| github.com/onsi/ginkgo v1.16.4 | ||

| github.com/onsi/gomega v1.15.0 | ||

| ... | ... |

hack/update_replicas.go

0 → 100644