Deploying to gh-pages from @ 87705039902cb91c9eae26ea5842f0aa07160226 🚀

Showing

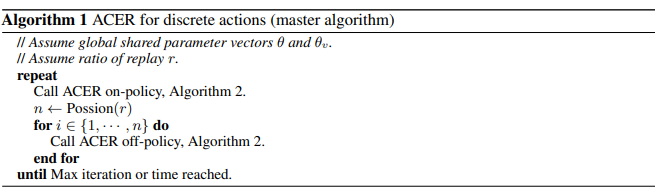

_images/ACER_alg1.png

0 → 100644

27.3 KB

_images/ACER_alg2.png

0 → 100644

106.8 KB

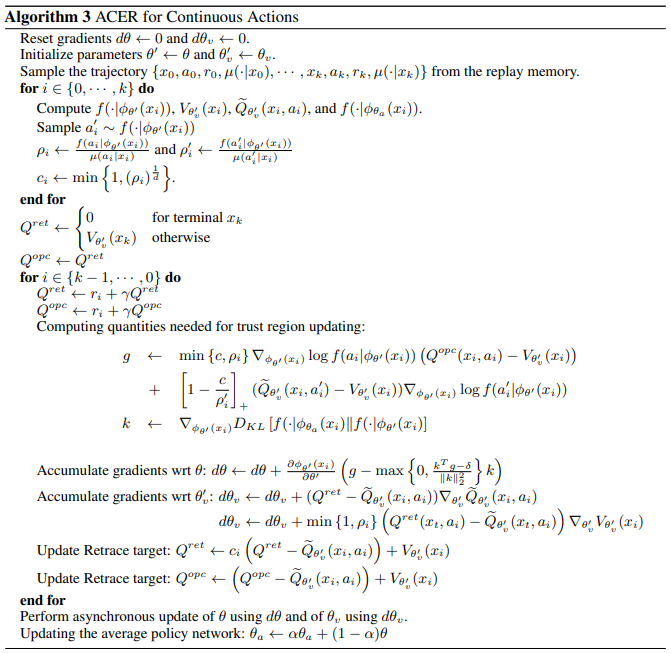

_images/ACER_alg3.png

0 → 100644

106.0 KB

_modules/ding/policy/acer.html

0 → 100644

此差异已折叠。

_sources/hands_on/acer.rst.txt

0 → 100644

hands_on/acer.html

0 → 100644

此差异已折叠。

无法预览此类型文件

此差异已折叠。