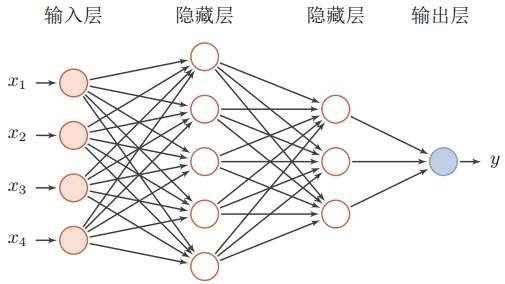

feedforward fashion-mnist

Showing

feedforward/README.md

0 → 100644

此差异已折叠。

feedforward/images/input_1.png

0 → 100644

112.4 KB

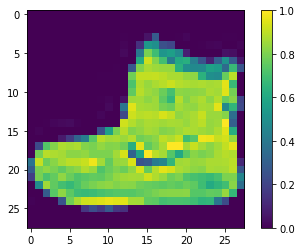

feedforward/images/output_1.png

0 → 100644

7.4 KB

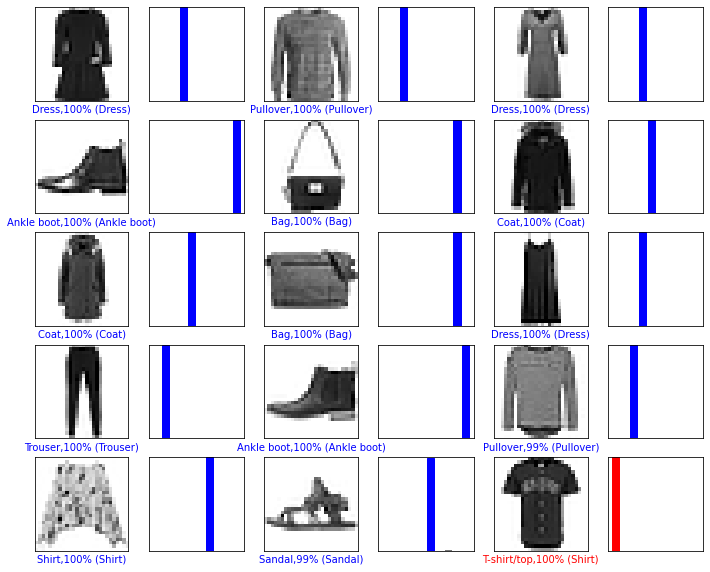

feedforward/images/output_2.png

0 → 100644

41.0 KB

feedforward/main.py

0 → 100644