!28 add nlp_lstm experiment

Merge pull request !28 from zhengnengjin2/master

Showing

nlp_lstm/README.md

0 → 100644

此差异已折叠。

nlp_lstm/config.py

0 → 100644

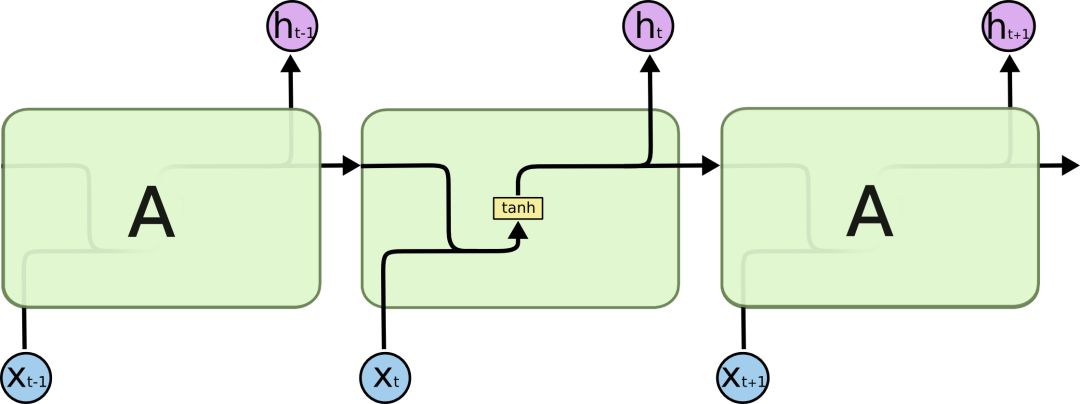

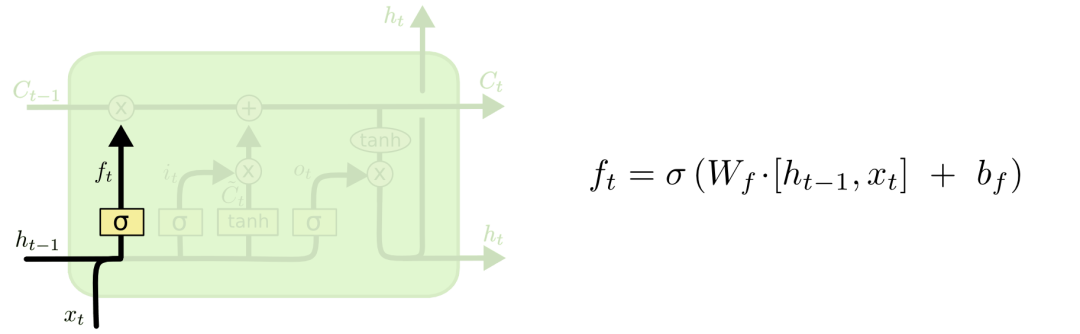

nlp_lstm/images/LSTM1.png

0 → 100644

25.0 KB

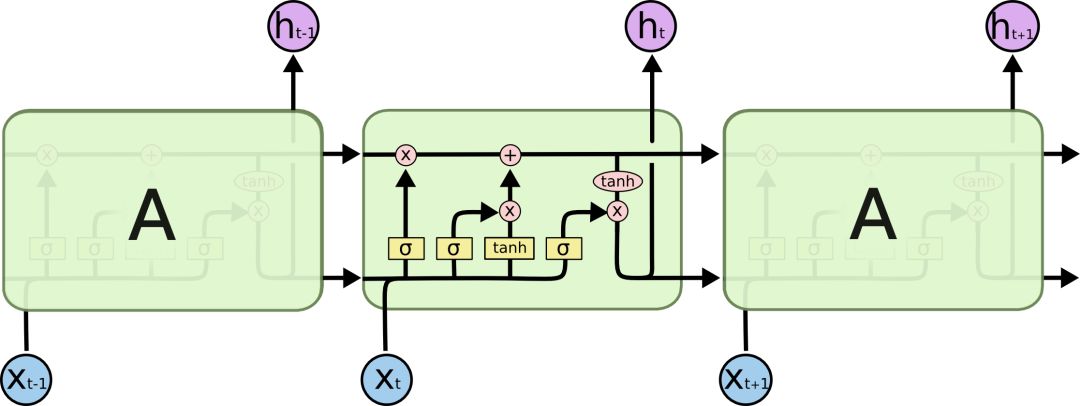

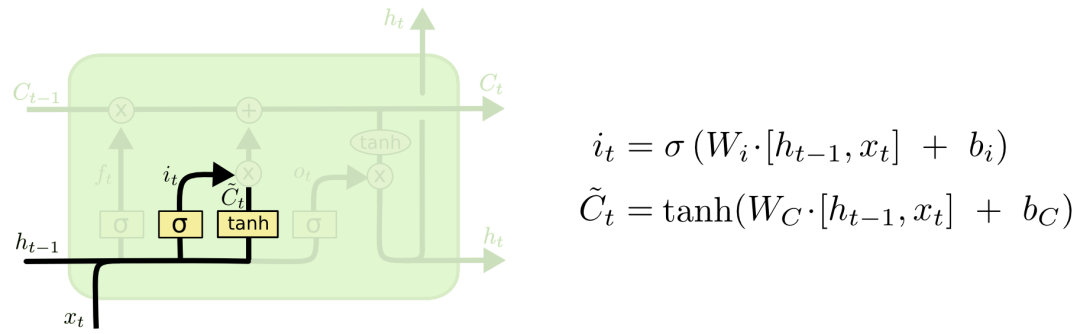

nlp_lstm/images/LSTM2.png

0 → 100644

34.9 KB

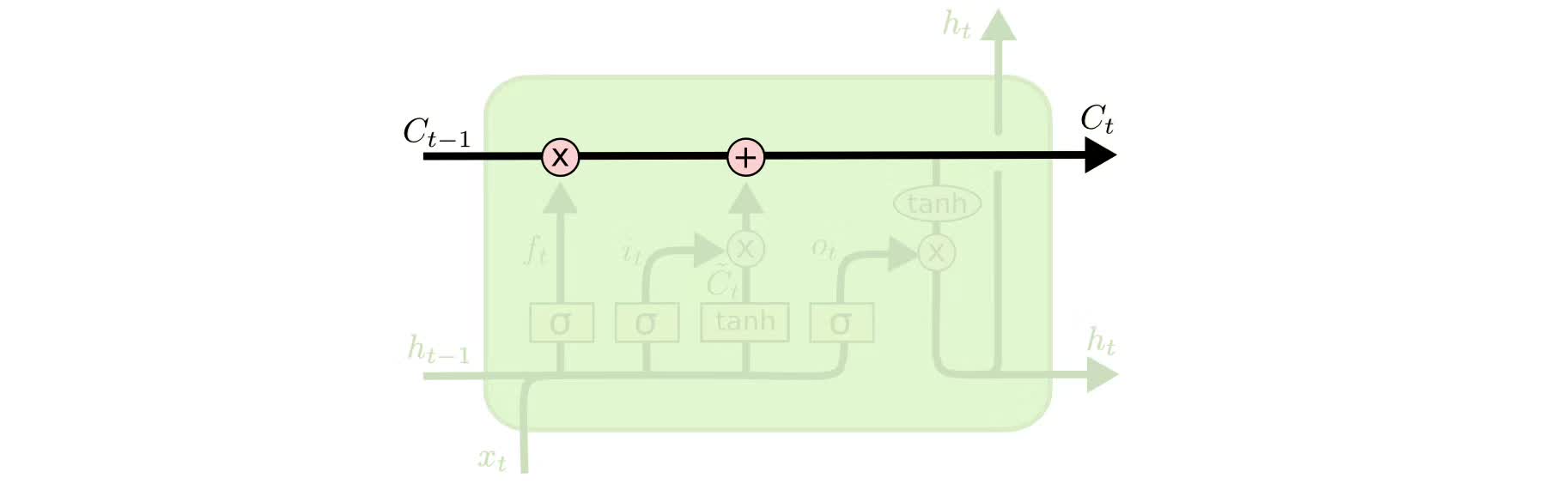

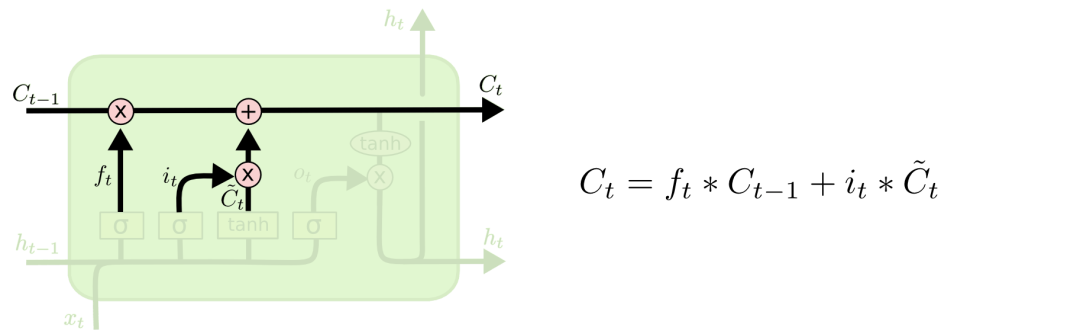

nlp_lstm/images/LSTM3.png

0 → 100644

10.9 KB

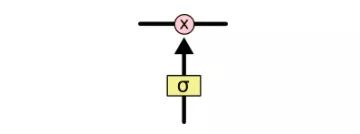

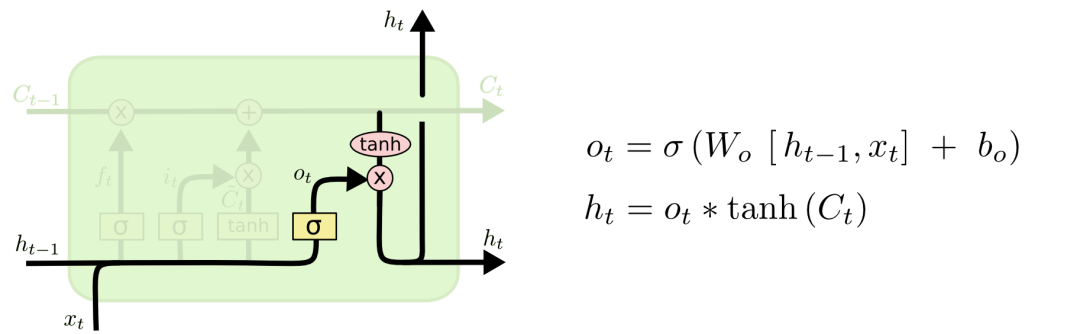

nlp_lstm/images/LSTM4.png

0 → 100644

27.4 KB

nlp_lstm/images/LSTM5.png

0 → 100644

2.2 KB

nlp_lstm/images/LSTM6.png

0 → 100644

38.7 KB

nlp_lstm/images/LSTM7.png

0 → 100644

47.2 KB

nlp_lstm/images/LSTM8.png

0 → 100644

41.8 KB

nlp_lstm/images/LSTM9.png

0 → 100644

47.6 KB

nlp_lstm/main.py

0 → 100644