[skip ci] Update readme and requirements.txt in milvus_benchmark (#7205)

Signed-off-by: Nzhenwu <zhenxiang.li@zilliz.com>

Showing

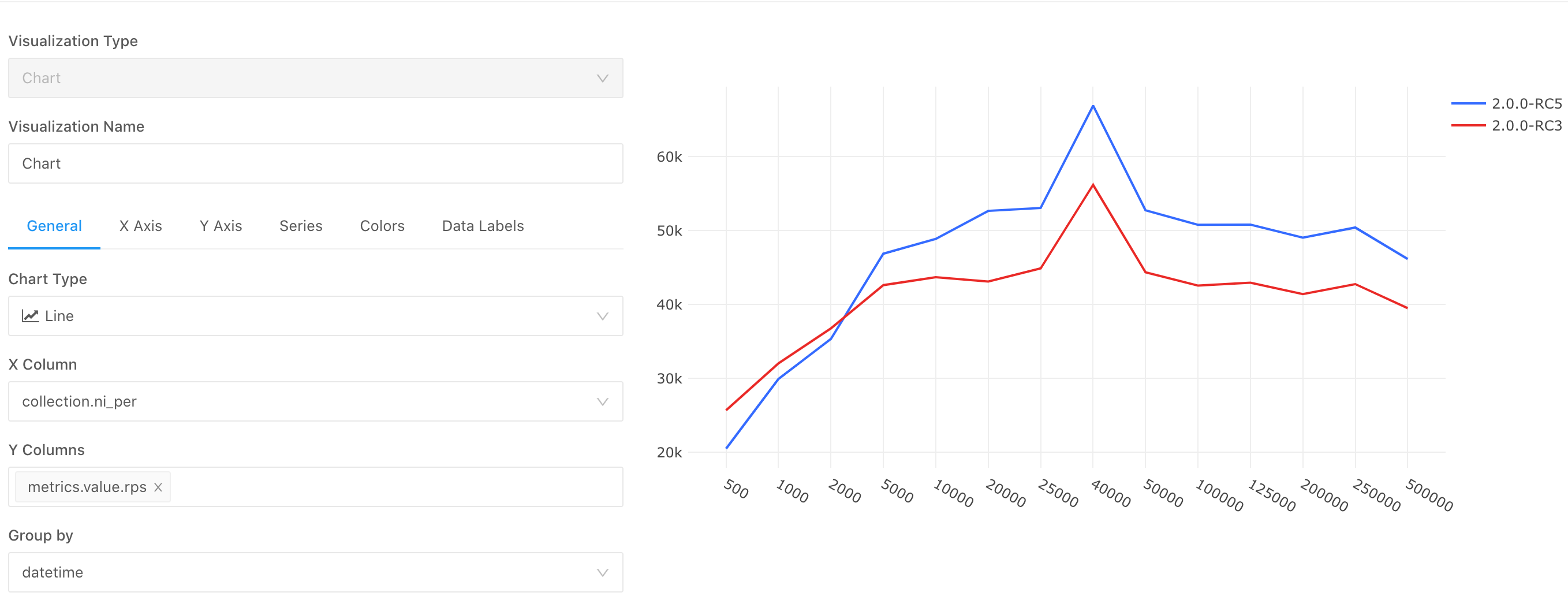

tests/benchmark/asserts/dash.png

0 → 100644

195.2 KB

Signed-off-by: Nzhenwu <zhenxiang.li@zilliz.com>

195.2 KB