Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into trt_cpp

Showing

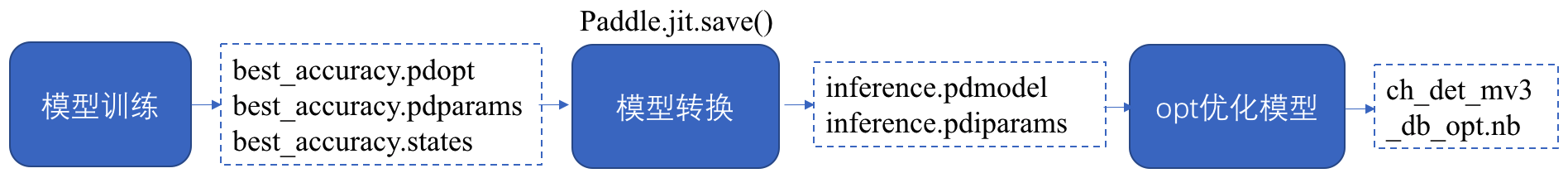

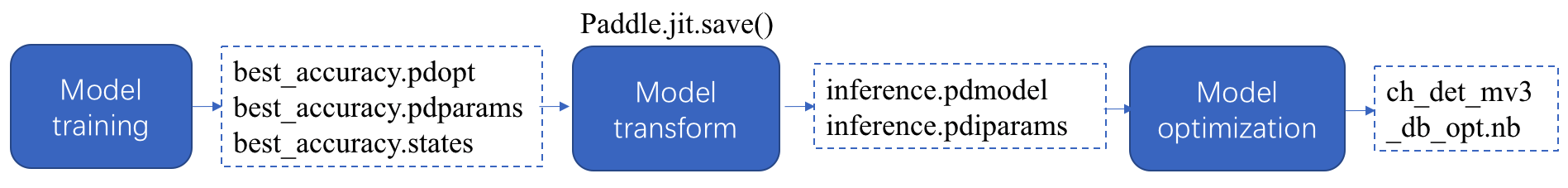

doc/imgs/model_prod_flow_ch.png

0 → 100644

64.6 KB

63.0 KB

60.6 KB

| W: | H:

| W: | H:

| shapely | ||

| imgaug | ||

| scikit-image==0.17.2 | ||

| imgaug==0.4.0 | ||

| pyclipper | ||

| lmdb | ||

| opencv-python==4.2.0.32 | ||

| ... | ... |