- 23 3月, 2022 2 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: Add async_io Read option in db_bench Pull Request resolved: https://github.com/facebook/rocksdb/pull/9735 Test Plan: ./db_bench -use_existing_db=true -db=/tmp/prefix_scan_prefetch_main -benchmarks="seekrandom" -key_size=32 -value_size=512 -num=5000000 -use_direct_reads=true -seek_nexts=327680 -duration=120 -ops_between_duration_checks=1 -async_io=1 Reviewed By: riversand963 Differential Revision: D35058482 Pulled By: akankshamahajan15 fbshipit-source-id: 1522b638c79f6d85bb7408c67f6ab76dbabeeee7

-

由 Mark Callaghan 提交于

Summary: …in order This fixes https://github.com/facebook/rocksdb/issues/9650 For db_bench --benchmarks=fillseq --num_multi_db=X it loads databases in sequence rather than randomly choosing a database per Put. The benefits are: 1) avoids long delays between flushing memtables 2) avoids flushing memtables for all of them at the same point in time 3) puts same number of keys per database so that query tests will find keys as expected Pull Request resolved: https://github.com/facebook/rocksdb/pull/9713 Test Plan: Using db_bench.1 without the change and db_bench.2 with the change: for i in 1 2; do rm -rf /data/m/rx/* ; time ./db_bench.$i --db=/data/m/rx --benchmarks=fillseq --num_multi_db=4 --num=10000000; du -hs /data/m/rx ; done --- without the change fillseq : 3.188 micros/op 313682 ops/sec; 34.7 MB/s real 2m7.787s user 1m52.776s sys 0m46.549s 2.7G /data/m/rx --- with the change fillseq : 3.149 micros/op 317563 ops/sec; 35.1 MB/s real 2m6.196s user 1m51.482s sys 0m46.003s 2.7G /data/m/rx Also, temporarily added a printf to confirm that the code switches to the next database at the right time ZZ switch to db 1 at 10000000 ZZ switch to db 2 at 20000000 ZZ switch to db 3 at 30000000 for i in 1 2; do rm -rf /data/m/rx/* ; time ./db_bench.$i --db=/data/m/rx --benchmarks=fillseq,readrandom --num_multi_db=4 --num=100000; du -hs /data/m/rx ; done --- without the change, smaller database, note that not all keys are found by readrandom because databases have < and > --num keys fillseq : 3.176 micros/op 314805 ops/sec; 34.8 MB/s readrandom : 1.913 micros/op 522616 ops/sec; 57.7 MB/s (99873 of 100000 found) --- with the change, smaller database, note that all keys are found by readrandom fillseq : 3.110 micros/op 321566 ops/sec; 35.6 MB/s readrandom : 1.714 micros/op 583257 ops/sec; 64.5 MB/s (100000 of 100000 found) Reviewed By: jay-zhuang Differential Revision: D35030168 Pulled By: mdcallag fbshipit-source-id: 2a18c4ec571d954cf5a57b00a11802a3608823ee

-

- 22 3月, 2022 1 次提交

-

-

由 Mark Callaghan 提交于

Summary: Changes: * improves monitoring by displaying average size of a Put value and average scan length * forces the minimum value size to be 10. Before this it was 0 if you didn't set the distribution parameters. * uses reasonable defaults for the distribution parameters that determine value size and scan length * includes seeks in "reads ... found" message, before this they were missing This is for https://github.com/facebook/rocksdb/issues/9672 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9711 Test Plan: Before this change: ./db_bench --benchmarks=fillseq,mixgraph --mix_get_ratio=50 --mix_put_ratio=25 --mix_seek_ratio=25 --num=100000 --value_k=0.2615 --value_sigma=25.45 --iter_k=2.517 --iter_sigma=14.236 fillseq : 4.289 micros/op 233138 ops/sec; 25.8 MB/s mixgraph : 18.461 micros/op 54166 ops/sec; 755.0 MB/s ( Gets:50164 Puts:24919 Seek:24917 of 50164 in 75081 found) After this change: ./db_bench --benchmarks=fillseq,mixgraph --mix_get_ratio=50 --mix_put_ratio=25 --mix_seek_ratio=25 --num=100000 --value_k=0.2615 --value_sigma=25.45 --iter_k=2.517 --iter_sigma=14.236 fillseq : 3.974 micros/op 251553 ops/sec; 27.8 MB/s mixgraph : 16.722 micros/op 59795 ops/sec; 833.5 MB/s ( Gets:50164 Puts:24919 Seek:24917, reads 75081 in 75081 found, avg size: 36.0 value, 504.9 scan) Reviewed By: jay-zhuang Differential Revision: D35030190 Pulled By: mdcallag fbshipit-source-id: d8f555f28d869f752ddb674a524108884511b151

-

- 19 3月, 2022 2 次提交

-

-

由 Peter Dillinger 提交于

Summary: The goal of this change is to allow changes to the "current" (in FileSystem) file temperatures to feed back into DB metadata, so that they can inform decisions and stats reporting. In part because of modular code factoring, it doesn't seem easy to do this automagically, where opening an SST file and observing current Temperature different from expected would trigger a change in metadata and DB manifest write (essentially giving the deep read path access to the write path). It is also difficult to do this while the DB is open because of the limitations of LogAndApply. This change allows updating file temperature metadata on a closed DB using an experimental utility function UpdateManifestForFilesState() or `ldb update_manifest --update_temperatures`. This should suffice for "migration" scenarios where outside tooling has placed or re-arranged DB files into a (different) tiered configuration without going through RocksDB itself (currently, only compaction can change temperature metadata). Some details: * Refactored and added unit test for `ldb unsafe_remove_sst_file` because of shared functionality * Pulled in autovector.h changes from https://github.com/facebook/rocksdb/issues/9546 to fix SuperVersionContext move constructor (related to an older draft of this change) Possible follow-up work: * Support updating manifest with file checksums, such as when a new checksum function is used and want existing DB metadata updated for it. * It's possible that for some repair scenarios, lighter weight than full repair, we might want to support UpdateManifestForFilesState() to modify critical file details like size or checksum using same algorithm. But let's make sure these are differentiated from modifying file details in ways that don't suspect corruption (or require extreme trust). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9683 Test Plan: unit tests added Reviewed By: jay-zhuang Differential Revision: D34798828 Pulled By: pdillinger fbshipit-source-id: cfd83e8fb10761d8c9e7f9c020d68c9106a95554

-

由 Peter Dillinger 提交于

Summary: The primary goal of this change is to add support for backing up and restoring (applying on restore) file temperature metadata, without committing to either the DB manifest or the FS reported "current" temperatures being exclusive "source of truth". To achieve this goal, we need to add temperature information to backup metadata, which requires updated backup meta schema. Fortunately I prepared for this in https://github.com/facebook/rocksdb/issues/8069, which began forward compatibility in version 6.19.0 for this kind of schema update. (Previously, backup meta schema was not extensible! Making this schema update public will allow some other "nice to have" features like taking backups with hard links, and avoiding crc32c checksum computation when another checksum is already available.) While schema version 2 is newly public, the default schema version is still 1. Until we change the default, users will need to set to 2 to enable features like temperature data backup+restore. New metadata like temperature information will be ignored with a warning in versions before this change and since 6.19.0. The metadata is considered ignorable because a functioning DB can be restored without it. Some detail: * Some renaming because "future schema" is now just public schema 2. * Initialize some atomics in TestFs (linter reported) * Add temperature hint support to SstFileDumper (used by BackupEngine) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9660 Test Plan: related unit test majorly updated for the new functionality, including some shared testing support for tracking temperatures in a FS. Some other tests and testing hooks into production code also updated for making the backup meta schema change public. Reviewed By: ajkr Differential Revision: D34686968 Pulled By: pdillinger fbshipit-source-id: 3ac1fa3e67ee97ca8a5103d79cc87d872c1d862a

-

- 17 3月, 2022 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Test only, no change to functionality. Extremely low risk of library regression. Update test key generation by maintaining existing and non-existing keys. Update db_crashtest.py to drive multiops_txn stress test for both write-committed and write-prepared. Add a make target 'blackbox_crash_test_with_multiops_txn'. Running the following commands caught the bug exposed in https://github.com/facebook/rocksdb/issues/9571. ``` $rm -rf /tmp/rocksdbtest/* $./db_stress -progress_reports=0 -test_multi_ops_txns -use_txn -clear_column_family_one_in=0 \ -column_families=1 -writepercent=0 -delpercent=0 -delrangepercent=0 -customopspercent=60 \ -readpercent=20 -prefixpercent=0 -iterpercent=20 -reopen=0 -ops_per_thread=1000 -ub_a=10000 \ -ub_c=100 -destroy_db_initially=0 -key_spaces_path=/dev/shm/key_spaces_desc -threads=32 -read_fault_one_in=0 $./db_stress -progress_reports=0 -test_multi_ops_txns -use_txn -clear_column_family_one_in=0 -column_families=1 -writepercent=0 -delpercent=0 -delrangepercent=0 -customopspercent=60 -readpercent=20 \ -prefixpercent=0 -iterpercent=20 -reopen=0 -ops_per_thread=1000 -ub_a=10000 -ub_c=100 -destroy_db_initially=0 \ -key_spaces_path=/dev/shm/key_spaces_desc -threads=32 -read_fault_one_in=0 ``` Running the following command caught a bug which will be fixed in https://github.com/facebook/rocksdb/issues/9648 . ``` $TEST_TMPDIR=/dev/shm make blackbox_crash_test_with_multiops_wc_txn ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9568 Reviewed By: jay-zhuang Differential Revision: D34308154 Pulled By: riversand963 fbshipit-source-id: 99ff1b65c19b46c471d2f2d3b47adcd342a1b9e7

-

- 12 3月, 2022 1 次提交

-

-

由 Baptiste Lemaire 提交于

Summary: Set `experimental_mempurge_threshold` back to `lambda: 10.0*random.random()` in crash test, reverting https://github.com/facebook/rocksdb/issues/8958 after fix provided in https://github.com/facebook/rocksdb/issues/9671 . Pull Request resolved: https://github.com/facebook/rocksdb/pull/9684 Reviewed By: pdillinger Differential Revision: D34820257 Pulled By: bjlemaire fbshipit-source-id: 1e5ae8c872c4ac4c4267c990ac5e3e793d77908c

-

- 09 3月, 2022 1 次提交

-

-

由 Hui Xiao 提交于

Summary: **Context:** WAL flush is currently not rate-limited by `Options::rate_limiter`. This PR is to provide rate-limiting to auto WAL flush, the one that automatically happen after each user write operation (i.e, `Options::manual_wal_flush == false`), by adding `WriteOptions::rate_limiter_options`. Note that we are NOT rate-limiting WAL flush that do NOT automatically happen after each user write, such as `Options::manual_wal_flush == true + manual FlushWAL()` (rate-limiting multiple WAL flushes), for the benefits of: - being consistent with [ReadOptions::rate_limiter_priority](https://github.com/facebook/rocksdb/blob/7.0.fb/include/rocksdb/options.h#L515) - being able to turn off some WAL flush's rate-limiting but not all (e.g, turn off specific the WAL flush of a critical user write like a service's heartbeat) `WriteOptions::rate_limiter_options` only accept `Env::IO_USER` and `Env::IO_TOTAL` currently due to an implementation constraint. - The constraint is that we currently queue parallel writes (including WAL writes) based on FIFO policy which does not factor rate limiter priority into this layer's scheduling. If we allow lower priorities such as `Env::IO_HIGH/MID/LOW` and such writes specified with lower priorities occurs before ones specified with higher priorities (even just by a tiny bit in arrival time), the former would have blocked the latter, leading to a "priority inversion" issue and contradictory to what we promise for rate-limiting priority. Therefore we only allow `Env::IO_USER` and `Env::IO_TOTAL` right now before improving that scheduling. A pre-requisite to this feature is to support operation-level rate limiting in `WritableFileWriter`, which is also included in this PR. **Summary:** - Renamed test suite `DBRateLimiterTest to DBRateLimiterOnReadTest` for adding a new test suite - Accept `rate_limiter_priority` in `WritableFileWriter`'s private and public write functions - Passed `WriteOptions::rate_limiter_options` to `WritableFileWriter` in the path of automatic WAL flush. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9607 Test Plan: - Added new unit test to verify existing flush/compaction rate-limiting does not break, since `DBTest, RateLimitingTest` is disabled and current db-level rate-limiting tests focus on read only (e.g, `db_rate_limiter_test`, `DBTest2, RateLimitedCompactionReads`). - Added new unit test `DBRateLimiterOnWriteWALTest, AutoWalFlush` - `strace -ftt -e trace=write ./db_bench -benchmarks=fillseq -db=/dev/shm/testdb -rate_limit_auto_wal_flush=1 -rate_limiter_bytes_per_sec=15 -rate_limiter_refill_period_us=1000000 -write_buffer_size=100000000 -disable_auto_compactions=1 -num=100` - verified that WAL flush(i.e, system-call _write_) were chunked into 15 bytes and each _write_ was roughly 1 second apart - verified the chunking disappeared when `-rate_limit_auto_wal_flush=0` - crash test: `python3 tools/db_crashtest.py blackbox --disable_wal=0 --rate_limit_auto_wal_flush=1 --rate_limiter_bytes_per_sec=10485760 --interval=10` killed as normal **Benchmarked on flush/compaction to ensure no performance regression:** - compaction with rate-limiting (see table 1, avg over 1280-run): pre-change: **915635 micros/op**; post-change: **907350 micros/op (improved by 0.106%)** ``` #!/bin/bash TEST_TMPDIR=/dev/shm/testdb START=1 NUM_DATA_ENTRY=8 N=10 rm -f compact_bmk_output.txt compact_bmk_output_2.txt dont_care_output.txt for i in $(eval echo "{$START..$NUM_DATA_ENTRY}") do NUM_RUN=$(($N*(2**($i-1)))) for j in $(eval echo "{$START..$NUM_RUN}") do ./db_bench --benchmarks=fillrandom -db=$TEST_TMPDIR -disable_auto_compactions=1 -write_buffer_size=6710886 > dont_care_output.txt && ./db_bench --benchmarks=compact -use_existing_db=1 -db=$TEST_TMPDIR -level0_file_num_compaction_trigger=1 -rate_limiter_bytes_per_sec=100000000 | egrep 'compact' done > compact_bmk_output.txt && awk -v NUM_RUN=$NUM_RUN '{sum+=$3;sum_sqrt+=$3^2}END{print sum/NUM_RUN, sqrt(sum_sqrt/NUM_RUN-(sum/NUM_RUN)^2)}' compact_bmk_output.txt >> compact_bmk_output_2.txt done ``` - compaction w/o rate-limiting (see table 2, avg over 640-run): pre-change: **822197 micros/op**; post-change: **823148 micros/op (regressed by 0.12%)** ``` Same as above script, except that -rate_limiter_bytes_per_sec=0 ``` - flush with rate-limiting (see table 3, avg over 320-run, run on the [patch](https://github.com/hx235/rocksdb/commit/ee5c6023a9f6533fab9afdc681568daa21da4953) to augment current db_bench ): pre-change: **745752 micros/op**; post-change: **745331 micros/op (regressed by 0.06 %)** ``` #!/bin/bash TEST_TMPDIR=/dev/shm/testdb START=1 NUM_DATA_ENTRY=8 N=10 rm -f flush_bmk_output.txt flush_bmk_output_2.txt for i in $(eval echo "{$START..$NUM_DATA_ENTRY}") do NUM_RUN=$(($N*(2**($i-1)))) for j in $(eval echo "{$START..$NUM_RUN}") do ./db_bench -db=$TEST_TMPDIR -write_buffer_size=1048576000 -num=1000000 -rate_limiter_bytes_per_sec=100000000 -benchmarks=fillseq,flush | egrep 'flush' done > flush_bmk_output.txt && awk -v NUM_RUN=$NUM_RUN '{sum+=$3;sum_sqrt+=$3^2}END{print sum/NUM_RUN, sqrt(sum_sqrt/NUM_RUN-(sum/NUM_RUN)^2)}' flush_bmk_output.txt >> flush_bmk_output_2.txt done ``` - flush w/o rate-limiting (see table 4, avg over 320-run, run on the [patch](https://github.com/hx235/rocksdb/commit/ee5c6023a9f6533fab9afdc681568daa21da4953) to augment current db_bench): pre-change: **487512 micros/op**, post-change: **485856 micors/ops (improved by 0.34%)** ``` Same as above script, except that -rate_limiter_bytes_per_sec=0 ``` | table 1 - compact with rate-limiting| #-run | (pre-change) avg micros/op | std micros/op | (post-change) avg micros/op | std micros/op | change in avg micros/op (%) -- | -- | -- | -- | -- | -- 10 | 896978 | 16046.9 | 901242 | 15670.9 | 0.475373978 20 | 893718 | 15813 | 886505 | 17544.7 | -0.8070778478 40 | 900426 | 23882.2 | 894958 | 15104.5 | -0.6072681153 80 | 906635 | 21761.5 | 903332 | 23948.3 | -0.3643141948 160 | 898632 | 21098.9 | 907583 | 21145 | 0.9960695813 3.20E+02 | 905252 | 22785.5 | 908106 | 25325.5 | 0.3152713278 6.40E+02 | 905213 | 23598.6 | 906741 | 21370.5 | 0.1688000504 **1.28E+03** | **908316** | **23533.1** | **907350** | **24626.8** | **-0.1063506533** average over #-run | 901896.25 | 21064.9625 | 901977.125 | 20592.025 | 0.008967217682 | table 2 - compact w/o rate-limiting| #-run | (pre-change) avg micros/op | std micros/op | (post-change) avg micros/op | std micros/op | change in avg micros/op (%) -- | -- | -- | -- | -- | -- 10 | 811211 | 26996.7 | 807586 | 28456.4 | -0.4468627768 20 | 815465 | 14803.7 | 814608 | 28719.7 | -0.105093413 40 | 809203 | 26187.1 | 797835 | 25492.1 | -1.404839082 80 | 822088 | 28765.3 | 822192 | 32840.4 | 0.01265071379 160 | 821719 | 36344.7 | 821664 | 29544.9 | -0.006693285661 3.20E+02 | 820921 | 27756.4 | 821403 | 28347.7 | 0.05871454135 **6.40E+02** | **822197** | **28960.6** | **823148** | **30055.1** | **0.1156657103** average over #-run | 8.18E+05 | 2.71E+04 | 8.15E+05 | 2.91E+04 | -0.25 | table 3 - flush with rate-limiting| #-run | (pre-change) avg micros/op | std micros/op | (post-change) avg micros/op | std micros/op | change in avg micros/op (%) -- | -- | -- | -- | -- | -- 10 | 741721 | 11770.8 | 740345 | 5949.76 | -0.1855144994 20 | 735169 | 3561.83 | 743199 | 9755.77 | 1.09226586 40 | 743368 | 8891.03 | 742102 | 8683.22 | -0.1703059588 80 | 742129 | 8148.51 | 743417 | 9631.58| 0.1735547324 160 | 749045 | 9757.21 | 746256 | 9191.86 | -0.3723407806 **3.20E+02** | **745752** | **9819.65** | **745331** | **9840.62** | **-0.0564530836** 6.40E+02 | 749006 | 11080.5 | 748173 | 10578.7 | -0.1112140624 average over #-run | 743741.4286 | 9004.218571 | 744117.5714 | 9090.215714 | 0.05057441238 | table 4 - flush w/o rate-limiting| #-run | (pre-change) avg micros/op | std micros/op | (post-change) avg micros/op | std micros/op | change in avg micros/op (%) -- | -- | -- | -- | -- | -- 10 | 477283 | 24719.6 | 473864 | 12379 | -0.7163464863 20 | 486743 | 20175.2 | 502296 | 23931.3 | 3.195320734 40 | 482846 | 15309.2 | 489820 | 22259.5 | 1.444352858 80 | 491490 | 21883.1 | 490071 | 23085.7 | -0.2887139108 160 | 493347 | 28074.3 | 483609 | 21211.7 | -1.973864238 **3.20E+02** | **487512** | **21401.5** | **485856** | **22195.2** | **-0.3396839462** 6.40E+02 | 490307 | 25418.6 | 485435 | 22405.2 | -0.9936631539 average over #-run | 4.87E+05 | 2.24E+04 | 4.87E+05 | 2.11E+04 | 0.00E+00 Reviewed By: ajkr Differential Revision: D34442441 Pulled By: hx235 fbshipit-source-id: 4790f13e1e5c0a95ae1d1cc93ffcf69dc6e78bdd

-

- 02 3月, 2022 1 次提交

-

-

由 sdong 提交于

Summary: BlockBasedTableOptions.hash_index_allow_collision is already deprecated and has no effect. Delete it for preparing 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9454 Test Plan: Run all existing tests. Reviewed By: ajkr Differential Revision: D33805827 fbshipit-source-id: ed8a436d1d083173ec6aef2a762ba02e1eefdc9d

-

- 26 2月, 2022 1 次提交

-

-

由 Changneng Chen 提交于

Summary: Add the configuration options and help messages of BlobDB to `ldb` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9630 Test Plan: `python ./tools/ldb_test.py` Reviewed By: ltamasi Differential Revision: D34443176 Pulled By: changneng fbshipit-source-id: 5b3f185cdfc2561e06dd37215c7edfbca07dbe80

-

- 24 2月, 2022 1 次提交

-

-

由 Bo Wang 提交于

Summary: **Summary:** RocksDB uses a block cache to reduce IO and make queries more efficient. The block cache is based on the LRU algorithm (LRUCache) and keeps objects containing uncompressed data, such as Block, ParsedFullFilterBlock etc. It allows the user to configure a second level cache (rocksdb::SecondaryCache) to extend the primary block cache by holding items evicted from it. Some of the major RocksDB users, like MyRocks, use direct IO and would like to use a primary block cache for uncompressed data and a secondary cache for compressed data. The latter allows us to mitigate the loss of the Linux page cache due to direct IO. This PR includes a concrete implementation of rocksdb::SecondaryCache that integrates with compression libraries such as LZ4 and implements an LRU cache to hold compressed blocks. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9518 Test Plan: In this PR, the lru_secondary_cache_test.cc includes the following tests: 1. The unit tests for the secondary cache with either compression or no compression, such as basic tests, fails tests. 2. The integration tests with both primary cache and this secondary cache . **Follow Up:** 1. Statistics (e.g. compression ratio) will be added in another PR. 2. Once this implementation is ready, I will do some shadow testing and benchmarking with UDB to measure the impact. Reviewed By: anand1976 Differential Revision: D34430930 Pulled By: gitbw95 fbshipit-source-id: 218d78b672a2f914856d8a90ff32f2f5b5043ded

-

- 18 2月, 2022 1 次提交

-

-

由 Siddhartha Roychowdhury 提交于

Summary: When WAL compression is enabled, add a record (new record type) to store the compression type to indicate that all subsequent records are compressed. The log reader will store the compression type when this record is encountered and use the type to uncompress the subsequent records. Compress and uncompress to be implemented in subsequent diffs. Enabled WAL compression in some WAL tests to check for regressions. Some tests that rely on offsets have been disabled. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9556 Reviewed By: anand1976 Differential Revision: D34308216 Pulled By: sidroyc fbshipit-source-id: 7f10595e46f3277f1ea2d309fbf95e2e935a8705

-

- 17 2月, 2022 2 次提交

-

-

由 Andrew Kryczka 提交于

Summary: Users can set the priority for file reads associated with their operation by setting `ReadOptions::rate_limiter_priority` to something other than `Env::IO_TOTAL`. Rate limiting `VerifyChecksum()` and `VerifyFileChecksums()` is the motivation for this PR, so it also includes benchmarks and minor bug fixes to get that working. `RandomAccessFileReader::Read()` already had support for rate limiting compaction reads. I changed that rate limiting to be non-specific to compaction, but rather performed according to the passed in `Env::IOPriority`. Now the compaction read rate limiting is supported by setting `rate_limiter_priority = Env::IO_LOW` on its `ReadOptions`. There is no default value for the new `Env::IOPriority` parameter to `RandomAccessFileReader::Read()`. That means this PR goes through all callers (in some cases multiple layers up the call stack) to find a `ReadOptions` to provide the priority. There are TODOs for cases I believe it would be good to let user control the priority some day (e.g., file footer reads), and no TODO in cases I believe it doesn't matter (e.g., trace file reads). The API doc only lists the missing cases where a file read associated with a provided `ReadOptions` cannot be rate limited. For cases like file ingestion checksum calculation, there is no API to provide `ReadOptions` or `Env::IOPriority`, so I didn't count that as missing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9424 Test Plan: - new unit tests - new benchmarks on ~50MB database with 1MB/s read rate limit and 100ms refill interval; verified with strace reads are chunked (at 0.1MB per chunk) and spaced roughly 100ms apart. - setup command: `./db_bench -benchmarks=fillrandom,compact -db=/tmp/testdb -target_file_size_base=1048576 -disable_auto_compactions=true -file_checksum=true` - benchmarks command: `strace -ttfe pread64 ./db_bench -benchmarks=verifychecksum,verifyfilechecksums -use_existing_db=true -db=/tmp/testdb -rate_limiter_bytes_per_sec=1048576 -rate_limit_bg_reads=1 -rate_limit_user_ops=true -file_checksum=true` - crash test using IO_USER priority on non-validation reads with https://github.com/facebook/rocksdb/issues/9567 reverted: `python3 tools/db_crashtest.py blackbox --max_key=1000000 --write_buffer_size=524288 --target_file_size_base=524288 --level_compaction_dynamic_level_bytes=true --duration=3600 --rate_limit_bg_reads=true --rate_limit_user_ops=true --rate_limiter_bytes_per_sec=10485760 --interval=10` Reviewed By: hx235 Differential Revision: D33747386 Pulled By: ajkr fbshipit-source-id: a2d985e97912fba8c54763798e04f006ccc56e0c

-

由 sdong 提交于

Summary: Opening DB as seconeary instance has been supported in ldb but it is not mentioned in --help. Mention it there. The part of the help message after the modification: ``` commands MUST specify --db=<full_path_to_db_directory> when necessary commands can optionally specify --env_uri=<uri_of_environment> or --fs_uri=<uri_of_filesystem> if necessary --secondary_path=<secondary_path> to open DB as secondary instance. Operations not supported in secondary instance will fail. ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9582 Test Plan: Build and run ldb --help Reviewed By: riversand963 Differential Revision: D34286427 fbshipit-source-id: e56c5290d0548098ab6acc6dde2167f5a64f34f3

-

- 16 2月, 2022 1 次提交

-

-

由 Andrew Kryczka 提交于

Summary: I did another pass through running CI jobs. It is uncommon now to see `db_stress` stuck in the setup phase but still happen. One reason was repeatedly reading/verifying checksum on filter blocks when `-cache_index_and_filter_blocks=1` and `-cache_size=1048576`. To address that I increased the cache size. Another reason was having a WAL with many range tombstones and every `db_stress` run using `-avoid_flush_during_recovery=1` (in that scenario, the setup phase spent too much CPU in `rocksdb::MemTable::NewRangeTombstoneIteratorInternal()`). To address that I fixed the `-avoid_flush_during_recovery` setting so it is reevaluated for every `db_stress` run. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9483 Reviewed By: riversand963 Differential Revision: D33922929 Pulled By: ajkr fbshipit-source-id: 0a298ec7c4df6f6b44620233996047a2dc7ee5f3

-

- 15 2月, 2022 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9566 Reviewed By: riversand963 Differential Revision: D34226256 Pulled By: ltamasi fbshipit-source-id: 4374b819e937c35e3a866ba5b5eafba87ff20af3

-

- 12 2月, 2022 1 次提交

-

-

由 Peter Dillinger 提交于

Summary: This change removes the ability to configure the deprecated, inefficient block-based filter in the public API. Options that would have enabled it now use "full" (and optionally partitioned) filters. Existing block-based filters can still be read and used, and a "back door" way to build them still exists, for testing and in case of trouble. About the only way this removal would cause an issue for users is if temporary memory for filter construction greatly increases. In HISTORY.md we suggest a few possible mitigations: partitioned filters, smaller SST files, or setting reserve_table_builder_memory=true. Or users who have customized a FilterPolicy using the CreateFilter/KeyMayMatch mechanism removed in https://github.com/facebook/rocksdb/issues/9501 will have to upgrade their code. (It's long past time for people to move to the new builder/reader customization interface.) This change also introduces some internal-use-only configuration strings for testing specific filter implementations while bypassing some compatibility / intelligence logic. This is intended to hint at a path toward making FilterPolicy Customizable, but it also gives us a "back door" way to configure block-based filter. Aside: updated db_bench so that -readonly implies -use_existing_db Pull Request resolved: https://github.com/facebook/rocksdb/pull/9535 Test Plan: Unit tests updated. Specifically, * BlockBasedTableTest.BlockReadCountTest is tweaked to validate the back door configuration interface and ignoring of `use_block_based_builder`. * BlockBasedTableTest.TracingGetTest is migrated from testing block-based filter access pattern to full filter access patter, by re-ordering some things. * Options test (pretty self-explanatory) Performance test - create with `./db_bench -db=/dev/shm/rocksdb1 -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=fillrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0` with and without `-use_block_based_filter`, which creates a DB with 21 SST files in L0. Read with `./db_bench -db=/dev/shm/rocksdb1 -readonly -bloom_bits=10 -cache_index_and_filter_blocks=1 -benchmarks=readrandom -num=10000000 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -duration=30` Without -use_block_based_filter: readrandom 464 ops/sec, 689280 KB DB With -use_block_based_filter: readrandom 169 ops/sec, 690996 KB DB No consistent difference with fillrandom Reviewed By: jay-zhuang Differential Revision: D34153871 Pulled By: pdillinger fbshipit-source-id: 31f4a933c542f8f09aca47fa64aec67832a69738

-

- 09 2月, 2022 3 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d

-

由 Akanksha Mahajan 提交于

Summary: Add releases till 6.29.fb to compatibility check for forward and backward compatibility Pull Request resolved: https://github.com/facebook/rocksdb/pull/9529 Test Plan: run locally Reviewed By: hx235 Differential Revision: D34086063 Pulled By: akankshamahajan15 fbshipit-source-id: 4ccff513c99cf2d0e41da0b76ab27ffcfdffe7df

-

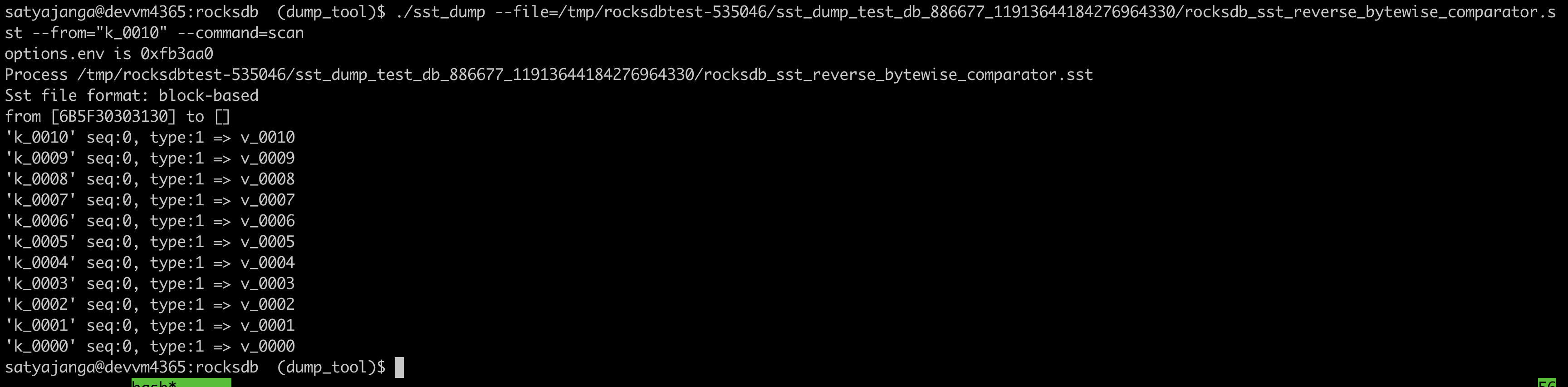

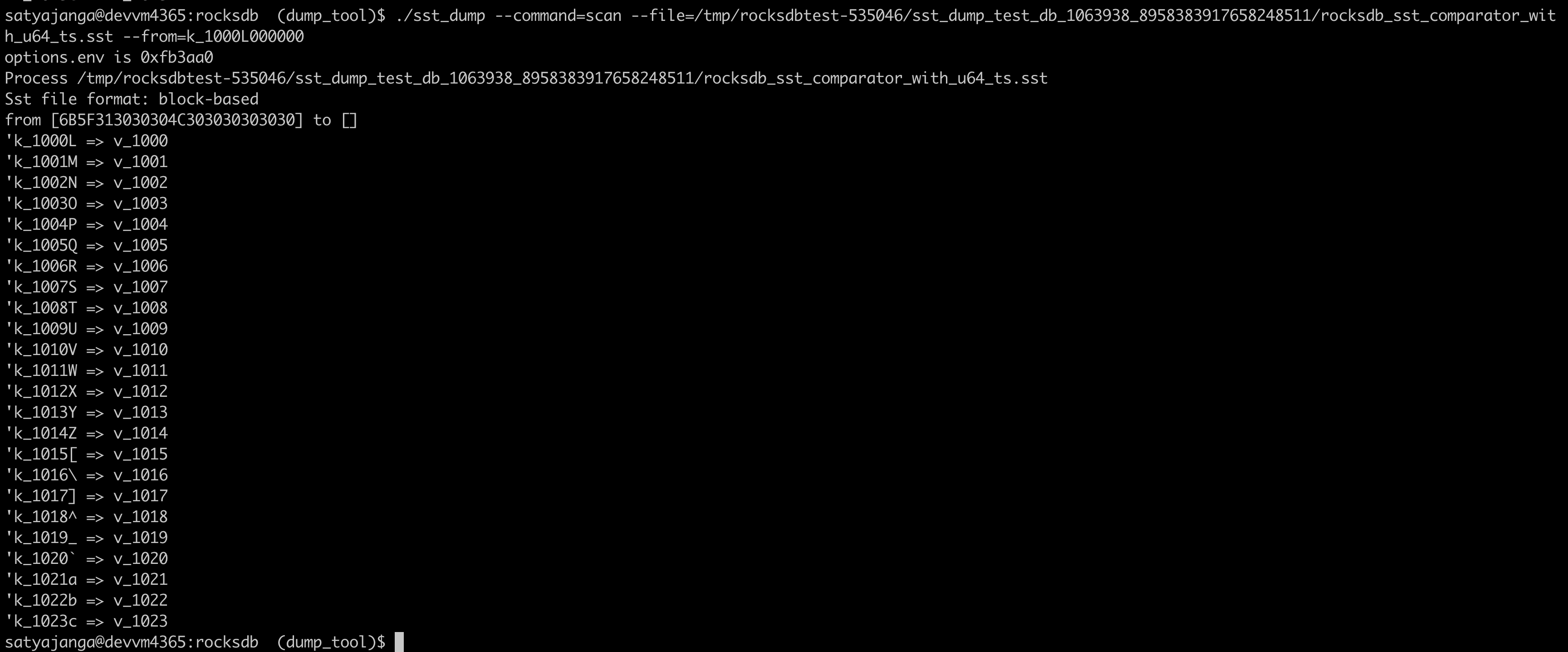

由 satyajanga 提交于

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536

-

- 08 2月, 2022 1 次提交

-

-

由 Peter Dillinger 提交于

Summary: After https://github.com/facebook/rocksdb/issues/9481, we are using newer default compiler for build-format-compatible CircleCI nightly job, which fails on building 2.2.fb.branch branch because it tries to use a pre-compiled libsnappy.a that is checked into the repo (!). This works around that by setting SNAPPY_LDFLAGS=-lsnappy, which is only understood by such old versions. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9517 Test Plan: Run check_format_compatible.sh on Ubuntu 20 AWS machine, watch nightly run Reviewed By: hx235 Differential Revision: D34055561 Pulled By: pdillinger fbshipit-source-id: 45f9d428dd082f026773bfa8d9dd4dad66fc9378

-

- 05 2月, 2022 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch does some cleanup in and around `VersionStorageInfo`: * Renames the method `PrepareApply` to `PrepareAppend` in `Version` to make it clear that it is to be called before appending the `Version` to `VersionSet` (via `AppendVersion`), not before applying any `VersionEdit`s. * Introduces a helper method `VersionStorageInfo::PrepareForVersionAppend` (called by `Version::PrepareAppend`) that encapsulates the population of the various derived data structures in `VersionStorageInfo`, and turns the methods computing the derived structures (`UpdateNumNonEmptyLevels`, `CalculateBaseBytes` etc.) into private helpers. * Changes `Version::PrepareAppend` so it only calls `UpdateAccumulatedStats` if the `update_stats` flag is set. (Earlier, this was checked by the callee.) Related to this, it also moves the call to `ComputeCompensatedSizes` to `VersionStorageInfo::PrepareForVersionAppend`. * Updates and cleans up `version_builder_test`, `version_set_test`, and `compaction_picker_test` so `PrepareForVersionAppend` is called anytime a new `VersionStorageInfo` is set up or saved. This cleanup also involves splitting `VersionStorageInfoTest.MaxBytesForLevelDynamic` into multiple smaller test cases. * Fixes up a bunch of comments that were outdated or just plain incorrect. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9494 Test Plan: Ran `make check` and the crash test script for a while. Reviewed By: riversand963 Differential Revision: D33971666 Pulled By: ltamasi fbshipit-source-id: fda52faac7783041126e4f8dec0fe01bdcadf65a

-

- 04 2月, 2022 2 次提交

-

-

由 Yanqin Jin 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9497 Reviewed By: ajkr Differential Revision: D33990256 Pulled By: riversand963 fbshipit-source-id: 268ce16b037e23e42b14fa0fcb45535582e1a0d6

-

由 mrambacher 提交于

Summary: Added a CountedFileSystem that tracks a number of file operations (opens, closes, deletes, renames, flushes, syncs, fsyncs, reads, writes). This class was based on the ReportFileOpEnv from db_bench. This is a stepping stone PR to be able to change the SpecialEnv into a SpecialFileSystem, where several of the file varieties wish to do operation counting. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9283 Reviewed By: pdillinger Differential Revision: D33062004 Pulled By: mrambacher fbshipit-source-id: d0d297a7fb9c48c06cbf685e5fa755c27193b6f5

-

- 02 2月, 2022 2 次提交

-

-

由 Yanqin Jin 提交于

Summary: ajkr reminded me that we have a rule of not including per-kv related data in `WriteOptions`. Namely, `WriteOptions` should not include information about "what-to-write", but should just include information about "how-to-write". According to this rule, `WriteOptions::timestamp` (experimental) is clearly a violation. Therefore, this PR removes `WriteOptions::timestamp` for compliance. After the removal, we need to pass timestamp info via another set of APIs. This PR proposes a set of overloaded functions `Put(write_opts, key, value, ts)`, `Delete(write_opts, key, ts)`, and `SingleDelete(write_opts, key, ts)`. Planned to add `Write(write_opts, batch, ts)`, but its complexity made me reconsider doing it in another PR (maybe). For better checking and returning error early, we also add a new set of APIs to `WriteBatch` that take extra `timestamp` information when writing to `WriteBatch`es. These set of APIs in `WriteBatchWithIndex` are currently not supported, and are on our TODO list. Removed `WriteBatch::AssignTimestamps()` and renamed `WriteBatch::AssignTimestamp()` to `WriteBatch::UpdateTimestamps()` since this method require that all keys have space for timestamps allocated already and multiple timestamps can be updated. The constructor of `WriteBatch` now takes a fourth argument `default_cf_ts_sz` which is the timestamp size of the default column family. This will be used to allocate space when calling APIs that do not specify a column family handle. Also, updated `DB::Get()`, `DB::MultiGet()`, `DB::NewIterator()`, `DB::NewIterators()` methods, replacing some assertions about timestamp to returning Status code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8946 Test Plan: make check ./db_bench -benchmarks=fillseq,fillrandom,readrandom,readseq,deleterandom -user_timestamp_size=8 ./db_stress --user_timestamp_size=8 -nooverwritepercent=0 -test_secondary=0 -secondary_catch_up_one_in=0 -continuous_verification_interval=0 Make sure there is no perf regression by running the following ``` ./db_bench_opt -db=/dev/shm/rocksdb -use_existing_db=0 -level0_stop_writes_trigger=256 -level0_slowdown_writes_trigger=256 -level0_file_num_compaction_trigger=256 -disable_wal=1 -duration=10 -benchmarks=fillrandom ``` Before this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.831 micros/op 546235 ops/sec; 60.4 MB/s ``` After this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.820 micros/op 549404 ops/sec; 60.8 MB/s ``` Reviewed By: ltamasi Differential Revision: D33721359 Pulled By: riversand963 fbshipit-source-id: c131561534272c120ffb80711d42748d21badf09

-

由 Hui Xiao 提交于

Summary: Note: rebase on and merge after https://github.com/facebook/rocksdb/pull/9349, https://github.com/facebook/rocksdb/pull/9345, (optional) https://github.com/facebook/rocksdb/pull/9393 **Context:** (Quoted from pdillinger) Layers of information during new Bloom/Ribbon Filter construction in building block-based tables includes the following: a) set of keys to add to filter b) set of hashes to add to filter (64-bit hash applied to each key) c) set of Bloom indices to set in filter, with duplicates d) set of Bloom indices to set in filter, deduplicated e) final filter and its checksum This PR aims to detect corruption (e.g, unexpected hardware/software corruption on data structures residing in the memory for a long time) from b) to e) and leave a) as future works for application level. - b)'s corruption is detected by verifying the xor checksum of the hash entries calculated as the entries accumulate before being added to the filter. (i.e, `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()`) - c) - e)'s corruption is detected by verifying the hash entries indeed exists in the constructed filter by re-querying these hash entries in the filter (i.e, `FilterBitsBuilder::MaybePostVerify()`) after computing the block checksum (except for PartitionFilter, which is done right after each `FilterBitsBuilder::Finish` for impl simplicity - see code comment for more). For this stage of detection, we assume hash entries are not corrupted after checking on b) since the time interval from b) to c) is relatively short IMO. Option to enable this feature of detection is `BlockBasedTableOptions::detect_filter_construct_corruption` which is false by default. **Summary:** - Implemented new functions `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()` and `FilterBitsBuilder::MaybePostVerify()` - Ensured hash entries, final filter and banding and their [cache reservation ](https://github.com/facebook/rocksdb/issues/9073) are released properly despite corruption - See [Filter.construction.artifacts.release.point.pdf ](https://github.com/facebook/rocksdb/files/7923487/Design.Filter.construction.artifacts.release.point.pdf) for high-level design - Bundled and refactored hash entries's related artifact in XXPH3FilterBitsBuilder into `HashEntriesInfo` for better control on lifetime of these artifact during `SwapEntires`, `ResetEntries` - Ensured RocksDB block-based table builder calls `FilterBitsBuilder::MaybePostVerify()` after constructing the filter by `FilterBitsBuilder::Finish()` - When encountering such filter construction corruption, stop writing the filter content to files and mark such a block-based table building non-ok by storing the corruption status in the builder. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9342 Test Plan: - Added new unit test `DBFilterConstructionCorruptionTestWithParam.DetectCorruption` - Included this new feature in `DBFilterConstructionReserveMemoryTestWithParam.ReserveMemory` as this feature heavily touch ReserveMemory's impl - For fallback case, I run `./filter_bench -impl=3 -detect_filter_construct_corruption=true -reserve_table_builder_memory=true -strict_capacity_limit=true -quick -runs 10 | grep 'Build avg'` to make sure nothing break. - Added to `filter_bench`: increased filter construction time by **30%**, mostly by `MaybePostVerify()` - FastLocalBloom - Before change: `./filter_bench -impl=2 -quick -runs 10 | grep 'Build avg'`: **28.86643s** - After change: - `./filter_bench -impl=2 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless): **27.6644s (-4% perf improvement might be due to now we don't drop bloom hash entry in `AddAllEntries` along iteration but in bulk later, same with the bypassing-MaybePostVerify case below)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (expect acceptable increase): **34.41159s (+20%)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (by-passing MaybePostVerify, expect minor increase): **27.13431s (-6%)** - Standard128Ribbon - Before change: `./filter_bench -impl=3 -quick -runs 10 | grep 'Build avg'`: **122.5384s** - After change: - `./filter_bench -impl=3 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless - verified by removing MaybePostVerify under this case and found only +-1ns difference): **124.3588s (+2%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(expect acceptable increase): **159.4946s (+30%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(by-passing MaybePostVerify, expect minor increase) : **125.258s (+2%)** - Added to `db_stress`: `make crash_test`, `./db_stress --detect_filter_construct_corruption=true` - Manually smoke-tested: manually corrupted the filter construction in some db level tests with basic PUT and background flush. As expected, the error did get returned to users in subsequent PUT and Flush status. Reviewed By: pdillinger Differential Revision: D33746928 Pulled By: hx235 fbshipit-source-id: cb056426be5a7debc1cd16f23bc250f36a08ca57

-

- 01 2月, 2022 2 次提交

-

-

由 Peter Dillinger 提交于

Summary: Apparently setting total_order_seek=true for DB::Get was intended to allow accurate read semantics if the current prefix extractor doesn't match what was used to generate SST files on disk. But since prefix_extractor was made a mutable option in 5.14.0, we have been able to detect this case and provide the correct semantics regardless of the total_order_seek option. Since that time, the option has only made Get() slower in a reasonably common case: prefix_extractor unchanged and whole_key_filtering=false. So this change primarily removes unnecessary effect of total_order_seek on Get. Also cleans up some related comments. Also adds a -total_order_seek option to db_bench and canonicalizes handling of ReadOptions in db_bench so that command line options have the expected association with library features. (There is potential for change in regression test behavior, but the old behavior is likely indefensible, or some other inconsistency would need to be fixed.) TODO in follow-up work: there should be no reason for Get() to depend on current prefix extractor at all. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9427 Test Plan: Unit tests updated. Performance (using db_bench update) Create DB with `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=10000000 -disable_wal=1 -write_buffer_size=10000000 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12 -whole_key_filtering=0` Test with and without `-total_order_seek` on `TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=readrandom -num=10000000 -duration=40 -disable_wal=1 -bloom_bits=16 -compaction_style=2 -fifo_compaction_max_table_files_size_mb=10000 -fifo_compaction_allow_compaction=0 -prefix_size=12` Before this change, total_order_seek=false: 25188 ops/sec Before this change, total_order_seek=true: 1222 ops/sec (~20x slower) After this change, total_order_seek=false: 24570 ops/sec After this change, total_order_seek=true: 25012 ops/sec (indistinguishable) Reviewed By: siying Differential Revision: D33753458 Pulled By: pdillinger fbshipit-source-id: bf892f34907a5e407d9c40bd4d42f0adbcbe0014

-

由 Andrew Kryczka 提交于

Summary: Despite attempts to optimize `db_stress` setup phase (i.e., pre-`OperateDb()`) latency in https://github.com/facebook/rocksdb/issues/9470 and https://github.com/facebook/rocksdb/issues/9475, it still always took tens of seconds. Since we still aren't able to setup a 100M key `db_stress` quickly, we should reduce the number of keys. This PR reduces it 4x while increasing `value_size_mult` 4x (from its default value of 8) so that memtables and SST files fill at a similar rate compared to before this PR. Also disabled bzip2 compression since we'll probably never use it and I noticed many CI runs spending majority of CPU on bzip2 decompression. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9476 Reviewed By: siying Differential Revision: D33898520 Pulled By: ajkr fbshipit-source-id: 855021784ad9664f2be5bce21f0339a1cf93230d

-

- 29 1月, 2022 1 次提交

-

-

由 Hui Xiao 提交于

Summary: **Context/Summary:** AdvancedColumnFamilyOptions::rate_limit_delay_max_milliseconds has been marked as deprecated and it's time to actually remove the code. - Keep `soft_rate_limit`/`hard_rate_limit` in `cf_mutable_options_type_info` to prevent throwing `InvalidArgument` in `GetColumnFamilyOptionsFromMap` when reading an option file still with these options (e.g, old option file generated from RocksDB before the deprecation) - Keep `soft_rate_limit`/`hard_rate_limit` in under `OptionsOldApiTest.GetOptionsFromMapTest` to test the case mentioned above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9455 Test Plan: Rely on my eyeball and CI Reviewed By: ajkr Differential Revision: D33811664 Pulled By: hx235 fbshipit-source-id: 866859427fe710354a90f1095057f80116365ff0

-

- 28 1月, 2022 8 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: In RocksDB DBOptions::skip_log_error_on_recovery is marked as "NOT SUPPORTED" for a long time, and setting this option does not have any effect on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9434 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33763015 Pulled By: akankshamahajan15 fbshipit-source-id: 11f09643298da6c02d3dcdb090b996f4c3cfdd76

-

由 Peter Dillinger 提交于

Summary: Even after https://github.com/facebook/rocksdb/issues/9461 could see ``` Error: please specify prefix_size for test_batches_snapshots test! ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9463 Test Plan: run `make blackbox_crashtest` for a long time. (Unfortunately, it's taking a long time to reproduce these failures) Reviewed By: akankshamahajan15 Differential Revision: D33838152 Pulled By: pdillinger fbshipit-source-id: b9a73c5bbb68df53f14c22b9b52f61d1f7ef38af

-

由 Jay Zhuang 提交于

Summary: The API is deprecated long time ago. Clean up the codebase by removing it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9462 Test Plan: CI, fake release: D33835220 Reviewed By: riversand963 Differential Revision: D33835103 Pulled By: jay-zhuang fbshipit-source-id: 6d2dc12c8e7fdbe2700865a3e61f0e3f78bd8184

-

由 Peter Dillinger 提交于

Summary: Changes in https://github.com/facebook/rocksdb/issues/9453 could trigger ``` stderr: Error: prefixpercent is non-zero while prefix_size is not positive! ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9461 Test Plan: run `make blackbox_crashtest` for a long time Reviewed By: ajkr Differential Revision: D33830751 Pulled By: pdillinger fbshipit-source-id: be88377dcaa47e4bb7adb0347762639eff8f1476

-

由 Peter Dillinger 提交于

Summary: This also removes the obsolete names BackupableDBOptions and UtilityDB. API users must now use BackupEngineOptions and DBWithTTL::Open. In C API, `rocksdb_backupable_db_*` is replaced `rocksdb_backup_engine_*`. Similar renaming in Java API. In reference to https://github.com/facebook/rocksdb/issues/9389 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9438 Test Plan: CI Reviewed By: mrambacher Differential Revision: D33780269 Pulled By: pdillinger fbshipit-source-id: 4a6cfc5c1b4c78bcad790b9d3dd13c5fdf4a1fac

-

由 Peter Dillinger 提交于

Summary: MemTable::MultiGet was not considering range tombstones before querying Bloom filter. This means range tombstones would be skipped for keys (or prefixes) with no other entries in the memtable. This could cause old values for a key (in SST files) to still show up until the range tombstone covering it has been flushed. This is fixed by essentially disabling the memtable Bloom filter when there are any range tombstones. (This could be better optimized in the future, but good enough for now.) Did some other cleanup/optimization in the same code to (more than) offset the cost of checking on range tombstones in more cases. There is now notable improvement when memtable_whole_key_filtering and prefix_extractor are used together (unusual), and this makes MultiGet closer to the Get implementation. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9453 Test Plan: new unit test added. Added memtable Bloom to crash test. Performance testing -------------------- Build WAL-only DB (recovers to memtable): ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -benchmarks=fillrandom -num=1000000 -write_buffer_size=250000000 ``` Query test command, to maximize sensitivity to the changed code: ``` TEST_TMPDIR=/dev/shm/rocksdb ./db_bench -use_existing_db -readonly -benchmarks=multireadrandom -num=10000000 -write_buffer_size=250000000 -memtable_bloom_size_ratio=0.015 -multiread_batched -batch_size=24 -threads=8 -memtable_whole_key_filtering=$MWKF -prefix_size=$PXS ``` (Note -num here is 10x larger for mostly memtable misses) Before & after run simultaneously, average over 10 iterations per data point, ops/sec. MWKF=0 PXS=0 (Bloom disabled) Before: 5724844 After: 6722066 MWKF=0 PXS=7 (prefixes hardly unique; Bloom not useful) Before: 9981319 After: 10237990 MWKF=0 PXS=8 (prefixes unique; Bloom useful) Before: 12081715 After: 12117603 MWKF=1 PXS=0 (whole key Bloom useful) Before: 11944354 After: 12096085 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes not useful in old version) Before: 9444299 After: 11826029 MWKF=1 PXS=7 (whole key Bloom useful in new version; prefixes useful in old version) Before: 11784465 After: 11778591 Only in this last case is the 'before' *slightly* faster, perhaps because hashing prefixes is slightly faster than hashing whole keys. Otherwise, 'after' is faster. Reviewed By: ajkr Differential Revision: D33805025 Pulled By: pdillinger fbshipit-source-id: 597523cae4f4eafdf6ae6bb2bc6cb46f83b017bf

-

由 Hui Xiao 提交于

Summary: **Context/Summary:** AdvancedColumnFamilyOptions::soft_rate_limit/hard_rate_limit have been marked as deprecated and it's time to actually remove the code. - Keep `soft_rate_limit`/`hard_rate_limit` in `cf_mutable_options_type_info` to prevent throwing `InvalidArgument` in `GetColumnFamilyOptionsFromMap` when reading an option file still with these options (e.g, old option file generated from RocksDB before the deprecation) - Keep `soft_rate_limit`/`hard_rate_limit` in under `OptionsOldApiTest.GetOptionsFromMapTest` to test the case mentioned above. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9452 Test Plan: Rely on my eyeball and CI Reviewed By: ajkr Differential Revision: D33804938 Pulled By: hx235 fbshipit-source-id: 133d49f7ec5238d7efceeb0a3122a5792a2b9945

-

由 yaphet 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9415 Reviewed By: ajkr Differential Revision: D33773673 Pulled By: riversand963 fbshipit-source-id: 52b59ec5a6b01a91d3f990b7f2b0f16320afb49b

-

- 27 1月, 2022 2 次提交

-

-

由 Siddhartha Roychowdhury 提交于

Summary: Add an option to set the WAL compression algorithm - wal_compression. TODO: WAL compression is not implemented and will only support zstd initially. Will be added in subsequent diffs. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9432 Reviewed By: pdillinger Differential Revision: D33797275 Pulled By: sidroyc fbshipit-source-id: 8db81d9c9cea5e2e4f1445d3aecad8106137b8e7

-

由 Jay Zhuang 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9429 Test Plan: fake release for test: D33754513 Reviewed By: riversand963 Differential Revision: D33753637 Pulled By: jay-zhuang fbshipit-source-id: 18db4701e8f28dda8f1ab660c2be9890a8312c12

-

- 25 1月, 2022 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: This PR is one proposal to resolve https://github.com/facebook/rocksdb/issues/9382. Looking at the code, I can't think of a reason why rdb is an internal component of RocksDB: it does not require any header files NOT in `include/rocksdb`. It's a better idea to host it somewhere else. Plus, rdb requires python2 which is not supported any more. No fixes or improvements will be made, even for potential security bugs (https://www.python.org/doc/sunset-python-2/). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9399 Test Plan: make check Reviewed By: ajkr Differential Revision: D33641965 Pulled By: riversand963 fbshipit-source-id: 2a6a74693e5de36834f355e41d6865db206af48b

-