- 06 5月, 2021 1 次提交

-

-

由 mrambacher 提交于

Summary: The ImmutableCFOptions contained a bunch of fields that belonged to the ImmutableDBOptions. This change cleans that up by introducing an ImmutableOptions struct. Following the pattern of Options struct, this class inherits from the DB and CFOption structs (of the Immutable form). Only one structural change (the ImmutableCFOptions::fs was changed to a shared_ptr from a raw one) is in this PR. All of the other changes involve moving the member variables from the ImmutableCFOptions into the ImmutableOptions and changing member variables or function parameters as required for compilation purposes. Follow-on PRs may do a further clean-up of the code, such as renaming variables (such as "ImmutableOptions cf_options") and potentially eliminating un-needed function parameters (there is no longer a need to pass both an ImmutableDBOptions and an ImmutableOptions to a function). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8262 Reviewed By: pdillinger Differential Revision: D28226540 Pulled By: mrambacher fbshipit-source-id: 18ae71eadc879dedbe38b1eb8e6f9ff5c7147dbf

-

- 27 4月, 2021 1 次提交

-

-

由 mrambacher 提交于

Summary: Renaming ImmutableCFOptions::info_log and statistics to logger and stats. This is stage 2 in creating an ImmutableOptions class. It is necessary because the names match those in ImmutableOptions and have different types. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8227 Reviewed By: jay-zhuang Differential Revision: D28000967 Pulled By: mrambacher fbshipit-source-id: 3bf2aa04e8f1e8724d825b7deacf41080c14420b

-

- 15 4月, 2021 1 次提交

-

-

由 Justin Chapman 提交于

Summary: Resolves https://github.com/facebook/rocksdb/issues/8014 - Add an assertion on `DB::Open` to ensure `db_options.max_open_files` is unlimited if FIFO Compaction is being used. - This is to align with what the docs mention and to prevent premature data deletion. - Update tests to work with this assertion. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8172 Test Plan: ```bash $ make check -j$(nproc) Generated TARGETS Summary: - 6 libs - 0 binarys - 180 tests ``` Reviewed By: ajkr Differential Revision: D27768792 Pulled By: thejchap fbshipit-source-id: cf6350535e3a3577fec72bcba75b3c094dc7a6f3

-

- 15 3月, 2021 1 次提交

-

-

由 mrambacher 提交于

Summary: For performance purposes, the lower level routines were changed to use a SystemClock* instead of a std::shared_ptr<SystemClock>. The shared ptr has some performance degradation on certain hardware classes. For most of the system, there is no risk of the pointer being deleted/invalid because the shared_ptr will be stored elsewhere. For example, the ImmutableDBOptions stores the Env which has a std::shared_ptr<SystemClock> in it. The SystemClock* within the ImmutableDBOptions is essentially a "short cut" to gain access to this constant resource. There were a few classes (PeriodicWorkScheduler?) where the "short cut" property did not hold. In those cases, the shared pointer was preserved. Using db_bench readrandom perf_level=3 on my EC2 box, this change performed as well or better than 6.17: 6.17: readrandom : 28.046 micros/op 854902 ops/sec; 61.3 MB/s (355999 of 355999 found) 6.18: readrandom : 32.615 micros/op 735306 ops/sec; 52.7 MB/s (290999 of 290999 found) PR: readrandom : 27.500 micros/op 871909 ops/sec; 62.5 MB/s (367999 of 367999 found) (Note that the times for 6.18 are prior to revert of the SystemClock). Pull Request resolved: https://github.com/facebook/rocksdb/pull/8033 Reviewed By: pdillinger Differential Revision: D27014563 Pulled By: mrambacher fbshipit-source-id: ad0459eba03182e454391b5926bf5cdd45657b67

-

- 11 3月, 2021 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: This PR - adds a class `ManifestTailer` that inherits from `VersionEditHandlerPointInTime`. `ManifestTailer::Iterate()` can be called multiple times to tail the primary instance's MANIFEST and apply the changes to the secondary, - updates the implementation of `ReactiveVersionSet::ReadAndApply` to use this class, - removes unused code in version_set.cc, - updates existing tests, e.g. removing deleted sync points from unit tests, - adds a new test to address the bug in https://github.com/facebook/rocksdb/issues/7815. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7998 Test Plan: make check Existing and newly-added tests in version_set_test.cc and db_secondary_test.cc Reviewed By: jay-zhuang Differential Revision: D26926641 Pulled By: riversand963 fbshipit-source-id: 8d4dd15db0ba863c213f743e33b5a207e948c980

-

- 09 3月, 2021 1 次提交

-

-

由 fanrui03 提交于

Summary: ## 1. Bug description: When RocksDB Checkpoint, it may be stuck in `WaitUntilFlushWouldNotStallWrites` method. ## 2. Simple analysis of the reasons: ### 2.1 Configuration parameters: ```yaml Compaction Style : Universal max_write_buffer_number : 4 min_write_buffer_number_to_merge : 3 ``` Checkpoint is usually very fast. When the Checkpoint is executed, `WaitUntilFlushWouldNotStallWrites` is called. If there are 2 Immutable MemTables, which are less than `min_write_buffer_number_to_merge`, they will not be flushed. But will enter this code. ```c++ // method: GetWriteStallConditionAndCause if (mutable_cf_options.max_write_buffer_number> 3 && num_unflushed_memtables >= mutable_cf_options.max_write_buffer_number-1) { return {WriteStallCondition::kDelayed, WriteStallCause::kMemtableLimit}; } ``` code link: https://github.com/facebook/rocksdb/blob/fbed72f03c3d9e4fdca3e5993587ef2559ba6ab9/db/column_family.cc#L847 Checkpoint thought there was a FlushJob, but it didn't. So will always wait. ### 2.2 solution: Increase the restriction: the `number of Immutable MemTable` >= `min_write_buffer_number_to_merge will wait`. If there are other better solutions, you can correct me. ### 2.3 Code that can reproduce the problem: https://github.com/1996fanrui/fanrui-learning/blob/flink-1.12/module-java/src/main/java/com/dream/rocksdb/RocksDBCheckpointStuck.java ## 3. Interesting point This bug will be triggered only when `the number of sorted runs >= level0_file_num_compaction_trigger`. Because there is a break in WaitUntilFlushWouldNotStallWrites. ```c++ if (cfd->imm()->NumNotFlushed() < cfd->ioptions()->min_write_buffer_number_to_merge && vstorage->l0_delay_trigger_count() < mutable_cf_options.level0_file_num_compaction_trigger) { break; } ``` code link: https://github.com/facebook/rocksdb/blob/fbed72f03c3d9e4fdca3e5993587ef2559ba6ab9/db/db_impl/db_impl_compaction_flush.cc#L1974 Universal may have `l0_delay_trigger_count() >= level0_file_num_compaction_trigger`, so this bug is triggered. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7921 Reviewed By: jay-zhuang Differential Revision: D26900559 Pulled By: ajkr fbshipit-source-id: 133c1252dad7393753f04a47590b68c7d8e670df

-

- 20 2月, 2021 1 次提交

-

-

由 mrambacher 提交于

Fix handling of Mutable options; Allow DB::SetOptions to update mutable TableFactory Options (#7936) Summary: Added a "only_mutable_options" flag to the ConfigOptions. When set, the Configurable methods will only look at/update options that are marked as kMutable. Fixed DB::SetOptions to allow for the update of any mutable TableFactory options. Fixes https://github.com/facebook/rocksdb/issues/7385. Added tests for the new flag. Updated HISTORY.md Pull Request resolved: https://github.com/facebook/rocksdb/pull/7936 Reviewed By: akankshamahajan15 Differential Revision: D26389646 Pulled By: mrambacher fbshipit-source-id: 6dc247f6e999fa2814059ebbd0af8face109fea0

-

- 17 2月, 2021 1 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: Add support for IOTracing in blob files Pull Request resolved: https://github.com/facebook/rocksdb/pull/7958 Test Plan: Add a new test and checked manually the trace_file for blob files being recorded during read and write. Reviewed By: ltamasi Differential Revision: D26415950 Pulled By: akankshamahajan15 fbshipit-source-id: 49c2859b3a4f8307e7cb69a92704403a4da46d44

-

- 26 1月, 2021 1 次提交

-

-

由 mrambacher 提交于

Summary: Introduces and uses a SystemClock class to RocksDB. This class contains the time-related functions of an Env and these functions can be redirected from the Env to the SystemClock. Many of the places that used an Env (Timer, PerfStepTimer, RepeatableThread, RateLimiter, WriteController) for time-related functions have been changed to use SystemClock instead. There are likely more places that can be changed, but this is a start to show what can/should be done. Over time it would be nice to migrate most (if not all) of the uses of the time functions from the Env to the SystemClock. There are several Env classes that implement these functions. Most of these have not been converted yet to SystemClock implementations; that will come in a subsequent PR. It would be good to unify many of the Mock Timer implementations, so that they behave similarly and be tested similarly (some override Sleep, some use a MockSleep, etc). Additionally, this change will allow new methods to be introduced to the SystemClock (like https://github.com/facebook/rocksdb/issues/7101 WaitFor) in a consistent manner across a smaller number of classes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7858 Reviewed By: pdillinger Differential Revision: D26006406 Pulled By: mrambacher fbshipit-source-id: ed10a8abbdab7ff2e23d69d85bd25b3e7e899e90

-

- 24 12月, 2020 1 次提交

-

-

由 mrambacher 提交于

Summary: Added "no-elide-constructors to the ASSERT_STATUS_CHECK builds. This flag gives more errors/warnings for some of the Status checks where an inner class checks a Status and later returns it. In this case, without the elide check on, the returned status may not have been checked in the caller, thereby bypassing the checked code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7798 Reviewed By: jay-zhuang Differential Revision: D25680451 Pulled By: pdillinger fbshipit-source-id: c3f14ed9e2a13f0a8c54d839d5fb4d1fc1e93917

-

- 12 12月, 2020 1 次提交

-

-

由 Peter Dillinger 提交于

Summary: Uncommon bug seen by ASAN with ColumnFamilyTest.LiveIteratorWithDroppedColumnFamily, if the last two references to a ColumnFamilyData are both SuperVersions (during InstallSuperVersion). The fix is to use UnrefAndTryDelete even in SuperVersion::Cleanup but with a parameter to avoid re-entering Cleanup on the same SuperVersion being cleaned up. ColumnFamilyData::Unref is considered unsafe so removed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7749 Test Plan: ./column_family_test --gtest_filter=*LiveIter* --gtest_repeat=100 Reviewed By: jay-zhuang Differential Revision: D25354304 Pulled By: pdillinger fbshipit-source-id: e78f3a3f67c40013b8432f31d0da8bec55c5321c

-

- 04 12月, 2020 1 次提交

-

-

由 Cheng Chang 提交于

Summary: When 2 phase commit is enabled, if there are prepared data in a WAL, the WAL should be kept, the minimum log number for such a WAL is written to MANIFEST during flush. In atomic flush, such information is not written to MANIFEST. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7570 Test Plan: Added a new unit test `DBAtomicFlushTest.ManualFlushUnder2PC`, this test fails in atomic flush without this PR, after this PR, it succeeds. Reviewed By: riversand963 Differential Revision: D24394222 Pulled By: cheng-chang fbshipit-source-id: 60ce74b21b704804943be40c8de01b41269cf116

-

- 24 11月, 2020 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch adds basic garbage collection support to the integrated BlobDB implementation. Valid blobs residing in the oldest blob files are relocated as they are encountered during compaction. The threshold that determines which blob files qualify is computed based on the configuration option `blob_garbage_collection_age_cutoff`, which was introduced in https://github.com/facebook/rocksdb/issues/7661 . Once a blob is retrieved for the purposes of relocation, it passes through the same logic that extracts large values to blob files in general. This means that if, for instance, the size threshold for key-value separation (`min_blob_size`) got changed or writing blob files got disabled altogether, it is possible for the value to be moved back into the LSM tree. In particular, one way to re-inline all blob values if needed would be to perform a full manual compaction with `enable_blob_files` set to `false`, `enable_blob_garbage_collection` set to `true`, and `blob_file_garbage_collection_age_cutoff` set to `1.0`. Some TODOs that I plan to address in separate PRs: 1) We'll have to measure the amount of new garbage in each blob file and log `BlobFileGarbage` entries as part of the compaction job's `VersionEdit`. (For the time being, blob files are cleaned up solely based on the `oldest_blob_file_number` relationships.) 2) When compression is used for blobs, the compression type hasn't changed, and the blob still qualifies for being written to a blob file, we can simply copy the compressed blob to the new file instead of going through decompression and compression. 3) We need to update the formula for computing write amplification to account for the amount of data read from blob files as part of GC. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7694 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D25069663 Pulled By: ltamasi fbshipit-source-id: bdfa8feb09afcf5bca3b4eba2ba72ce2f15cd06a

-

- 29 10月, 2020 1 次提交

-

-

由 Ramkumar Vadivelu 提交于

Summary: Fixes Issue https://github.com/facebook/rocksdb/issues/7497 When allow_data_in_errors db_options is set, log error key details in `ParseInternalKey()` Have fixed most of the calls. Have few TODOs still pending - because have to make more deeper changes to pass in the allow_data_in_errors flag. Will do those in a separate PR later. Tests: - make check - some of the existing tests that exercise the "internal key too small" condition are: dbformat_test, cuckoo_table_builder_test - some of the existing tests that exercise the corrupted key path are: corruption_test, merge_helper_test, compaction_iterator_test Example of new status returns: - Key too small - `Corrupted Key: Internal Key too small. Size=5` - Corrupt key with allow_data_in_errors option set to false: `Corrupted Key: '<redacted>' seq:3, type:3` - Corrupt key with allow_data_in_errors option set to true: `Corrupted Key: '61' seq:3, type:3` Pull Request resolved: https://github.com/facebook/rocksdb/pull/7515 Reviewed By: ajkr Differential Revision: D24240264 Pulled By: ramvadiv fbshipit-source-id: bc48f5d4475ac19d7713e16df37505b31aac42e7

-

- 16 10月, 2020 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch adds blob file support to the `Get` API by extending `Version` so that whenever a blob reference is read from a file, the blob is retrieved from the corresponding blob file and passed back to the caller. (This is assuming the blob reference is valid and the blob file is actually part of the given `Version`.) It also introduces a cache of `BlobFileReader`s called `BlobFileCache` that enables sharing `BlobFileReader`s between callers. `BlobFileCache` uses the same backing cache as `TableCache`, so `max_open_files` (if specified) limits the total number of open (table + blob) files. TODO: proactively open/cache blob files and pin the cache handles of the readers in the metadata objects similarly to what `VersionBuilder::LoadTableHandlers` does for table files. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7540 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D24260219 Pulled By: ltamasi fbshipit-source-id: a8a2a4f11d3d04d6082201b52184bc4d7b0857ba

-

- 01 10月, 2020 1 次提交

-

-

由 Ramkumar Vadivelu 提交于

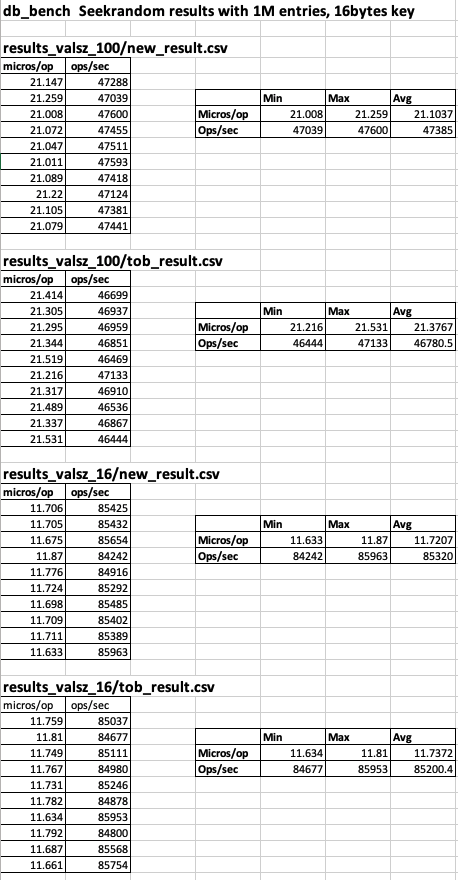

Summary: Fixes https://github.com/facebook/rocksdb/issues/7430 Change ParseInternalKey() to return Status instead of bool. db_bench (seekrandom) based before/after results with value size of 100 bytes and 16 bytes can be found at (tests ran on an udb server): https://www.dropbox.com/s/47bwamdy5ozngph/PIK_ret_Status_results.xlsx?dl=0  Pull Request resolved: https://github.com/facebook/rocksdb/pull/7457 Reviewed By: ajkr Differential Revision: D24002433 Pulled By: ramvadiv fbshipit-source-id: ac253ecf577a29044c47c3fe254a01e71404c44c

-

- 25 9月, 2020 1 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: Fix few test cases and add them in ASSERT_STATUS_CHECKED build. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7427 Test Plan: 1. ASSERT_STATUS_CHECKED=1 make -j48 check, 2. travis build for ASSERT_STATUS_CHECKED, 3. Without ASSERT_STATUS_CHECKED: make check -j64, CircleCI build and travis build Reviewed By: pdillinger Differential Revision: D23909983 Pulled By: akankshamahajan15 fbshipit-source-id: 42d7e4aea972acb9fcddb7ca73fcb82f93272434

-

- 15 9月, 2020 1 次提交

-

-

由 mrambacher 提交于

Summary: This PR merges the functionality of making the ColumnFamilyOptions, TableFactory, and DBOptions into Configurable into a single PR, resolving any merge conflicts Pull Request resolved: https://github.com/facebook/rocksdb/pull/5753 Reviewed By: ajkr Differential Revision: D23385030 Pulled By: zhichao-cao fbshipit-source-id: 8b977a7731556230b9b8c5a081b98e49ee4f160a

-

- 28 8月, 2020 1 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: Replace FSRandomAccessFile pointer with FSRandomAccessFilePtr object in RandomAccessFileReader. This new object wraps FSRandomAccessFile pointer. Objective: If tracing is enabled, FSRandomAccessFile Ptr returns FSRandomAccessFileTracingWrapper pointer that includes all necessary information in IORecord and calls underlying FileSystem and invokes IOTracer to dump that record in a binary file. If tracing is disabled then, underlying FileSystem pointer is returned directly. FSRandomAccessFilePtr wrapper class is added to bypass the FSRandomAccessFileWrapper when tracing is disabled. Test Plan: make check -j64 Pull Request resolved: https://github.com/facebook/rocksdb/pull/7192 Reviewed By: anand1976 Differential Revision: D23356867 Pulled By: akankshamahajan15 fbshipit-source-id: 48f31168166a17a7444b40be44a9a9d4a5c7182c

-

- 25 8月, 2020 1 次提交

-

-

由 Connor1996 提交于

Summary: After releasing a snapshot, it checks whether it is suitable to trigger bottom compactions. When disabling auto compactions, it may still schedule compaction when releasing a snapshot. Whereas no compaction job will be actually handled, so the state of LSM is not changed and compaction will be triggered again and again every time releasing a snapshot. Too frequent compactions lead to high CPU usage and high db_mutex lock contention which affects foreground write duration finally. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7267 Test Plan: - make check - manual test Reviewed By: akankshamahajan15 Differential Revision: D23252880 Pulled By: ajkr fbshipit-source-id: 4431e071a35d9912a2a3592875db27bae521434b

-

- 19 8月, 2020 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/7280 Test Plan: `make check` Reviewed By: riversand963 Differential Revision: D23195192 Pulled By: ltamasi fbshipit-source-id: 743b382de391963e62ba86119e9fbd0233ea3b3a

-

- 23 7月, 2020 1 次提交

-

-

由 Jay Zhuang 提交于

Summary: Make `max-subcompactions` dynamically changeable by passing the `DBOption` to Compaction. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7159 Reviewed By: siying Differential Revision: D22671238 Pulled By: jay-zhuang fbshipit-source-id: 311ca9f6bb606965544d8708616d358cfed5be42

-

- 09 7月, 2020 1 次提交

-

-

由 rockeet 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/7080 Reviewed By: riversand963 Differential Revision: D22412352 Pulled By: ajkr fbshipit-source-id: 1d7f4c1621040a0130245139b52c3f4d3deac865

-

- 03 7月, 2020 1 次提交

-

-

由 Jay Zhuang 提交于

Summary: Replace `reinterpret_cast` with `static_cast_with_check` for `DBImpl` and `ColumnFamilyHandleImpl`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7067 Reviewed By: siying Differential Revision: D22361587 Pulled By: jay-zhuang fbshipit-source-id: dfe9e8f3af39c3d27cc372c55ab9ad905eb0a5a1

-

- 21 3月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: There are situations when RocksDB tries to recover, but the db is in an inconsistent state due to SST files referenced in the MANIFEST being missing. In this case, previous RocksDB will just fail the recovery and return a non-ok status. This PR enables another possibility. During recovery, RocksDB checks possible MANIFEST files, and try to recover to the most recent state without missing table file. `VersionSet::Recover()` applies version edits incrementally and "materializes" a version only when this version does not reference any missing table file. After processing the entire MANIFEST, the version created last will be the latest version. `DBImpl::Recover()` calls `VersionSet::Recover()`. Afterwards, WAL replay will *not* be performed. To use this capability, set `options.best_efforts_recovery = true` when opening the db. Best-efforts recovery is currently incompatible with atomic flush. Test plan (on devserver): ``` $make check $COMPILE_WITH_ASAN=1 make all && make check ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/6334 Reviewed By: anand1976 Differential Revision: D19778960 Pulled By: riversand963 fbshipit-source-id: c27ea80f29bc952e7d3311ecf5ee9c54393b40a8

-

- 12 3月, 2020 1 次提交

-

-

由 Cheng Chang 提交于

Summary: In Linux, when reopening DB with many SST files, profiling shows that 100% system cpu time spent for a couple of seconds for `GetLogicalBufferSize`. This slows down MyRocks' recovery time when site is down. This PR introduces two new APIs: 1. `Env::RegisterDbPaths` and `Env::UnregisterDbPaths` lets `DB` tell the env when it starts or stops using its database directories . The `PosixFileSystem` takes this opportunity to set up a cache from database directories to the corresponding logical block sizes. 2. `LogicalBlockSizeCache` is defined only for OS_LINUX to cache the logical block sizes. Other modifications: 1. rename `logical buffer size` to `logical block size` to be consistent with Linux terms. 2. declare `GetLogicalBlockSize` in `PosixHelper` to expose it to `PosixFileSystem`. 3. change the functions `IOError` and `IOStatus` in `env/io_posix.h` to have external linkage since they are used in other translation units too. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6457 Test Plan: 1. A new unit test is added for `LogicalBlockSizeCache` in `env/io_posix_test.cc`. 2. A new integration test is added for `DB` operations related to the cache in `db/db_logical_block_size_cache_test.cc`. `make check` Differential Revision: D20131243 Pulled By: cheng-chang fbshipit-source-id: 3077c50f8065c0bffb544d8f49fb10bba9408d04

-

- 03 3月, 2020 1 次提交

-

-

由 Zhichao Cao 提交于

Summary: In the current code base, we can use Directory from Env to manage directory (e.g, Fsync()). The PR https://github.com/facebook/rocksdb/issues/5761 introduce the File System as a new Env API. So we further replace the Directory class in DB with FSDirectory such that we can have more IO information from IOStatus returned by FSDirectory. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6468 Test Plan: pass make asan_check Differential Revision: D20195261 Pulled By: zhichao-cao fbshipit-source-id: 93962cb9436852bfcfb76e086d9e7babd461cbe1

-

- 21 2月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: When dynamically linking two binaries together, different builds of RocksDB from two sources might cause errors. To provide a tool for user to solve the problem, the RocksDB namespace is changed to a flag which can be overridden in build time. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6433 Test Plan: Build release, all and jtest. Try to build with ROCKSDB_NAMESPACE with another flag. Differential Revision: D19977691 fbshipit-source-id: aa7f2d0972e1c31d75339ac48478f34f6cfcfb3e

-

- 04 2月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: A relatively recent regression causes for every CF, create and open directory is called for the DB directory, unless CF has a private directory. This doesn't scale well with large number of column families. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6358 Test Plan: Run all existing tests and see it pass. strace with db_bench --num_column_families and observe it doesn't open directory for number of column families. Differential Revision: D19675141 fbshipit-source-id: da01d9216f1dae3f03d4064fbd88ce71245bd9be

-

- 11 1月, 2020 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: unordered_write is incompatible with non-zero max_successive_merges. Although we check this at runtime, we currently don't prevent the user from setting this combination in options. This has led to stress tests to fail with this combination is tried in ::SetOptions. The patch fixes that and also reverts the changes performed by https://github.com/facebook/rocksdb/pull/6254, in which max_successive_merges was mistakenly declared incompatible with unordered_write. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6284 Differential Revision: D19356115 Pulled By: maysamyabandeh fbshipit-source-id: f06dadec777622bd75f267361c022735cf8cecb6

-

- 03 1月, 2020 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: allow_concurrent_memtable_write is incompatible with non-zero max_successive_merges. Although we check this at runtime, we currently don't prevent the user from setting this combination in options. This has led to stress tests to fail with this combination is tried in ::SetOptions. The patch fixes that. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6254 Differential Revision: D19265819 Pulled By: maysamyabandeh fbshipit-source-id: 47f2e2dc26fe0972c7152f4da15dadb9703f1179

-

- 19 12月, 2019 1 次提交

-

-

由 sdong 提交于

Summary: level passed into ColumnFamilyData::CalculateSSTWriteHint() can be smaller than base_level in current version, which would cause overflow. We see ubsan complains: db/compaction/compaction_job.cc:1511:39: runtime error: load of value 4294967295, which is not a valid value for type 'Env::WriteLifeTimeHint' and I hope this commit fixes it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6212 Test Plan: Run existing tests and see them to pass. Differential Revision: D19168442 fbshipit-source-id: bf8fd86f85478ecfa7556db46dc3242de8c83dc9

-

- 18 12月, 2019 1 次提交

-

-

由 解轶伦 提交于

Summary: I found that CleanupSuperVersion() may block Get() for 30ms+ (per MemTable is 256MB). Then I found "delete sv" in ~SuperVersion() takes the time. The backtrace looks like this DBImpl::GetImpl() -> DBImpl::ReturnAndCleanupSuperVersion() -> DBImpl::CleanupSuperVersion() : delete sv; -> ~SuperVersion() I think it's better to delete in a background thread, please review it。 Pull Request resolved: https://github.com/facebook/rocksdb/pull/6146 Differential Revision: D18972066 fbshipit-source-id: 0f7b0b70b9bb1e27ad6fc1c8a408fbbf237ae08c

-

- 14 12月, 2019 1 次提交

-

-

由 anand76 提交于

Summary: The current Env API encompasses both storage/file operations, as well as OS related operations. Most of the APIs return a Status, which does not have enough metadata about an error, such as whether its retry-able or not, scope (i.e fault domain) of the error etc., that may be required in order to properly handle a storage error. The file APIs also do not provide enough control over the IO SLA, such as timeout, prioritization, hinting about placement and redundancy etc. This PR separates out the file/storage APIs from Env into a new FileSystem class. The APIs are updated to return an IOStatus with metadata about the error, as well as to take an IOOptions structure as input in order to allow more control over the IO. The user can set both ```options.env``` and ```options.file_system``` to specify that RocksDB should use the former for OS related operations and the latter for storage operations. Internally, a ```CompositeEnvWrapper``` has been introduced that inherits from ```Env``` and redirects individual methods to either an ```Env``` implementation or the ```FileSystem``` as appropriate. When options are sanitized during ```DB::Open```, ```options.env``` is replaced with a newly allocated ```CompositeEnvWrapper``` instance if both env and file_system have been specified. This way, the rest of the RocksDB code can continue to function as before. This PR also ports PosixEnv to the new API by splitting it into two - PosixEnv and PosixFileSystem. PosixEnv is defined as a sub-class of CompositeEnvWrapper, and threading/time functions are overridden with Posix specific implementations in order to avoid an extra level of indirection. The ```CompositeEnvWrapper``` translates ```IOStatus``` return code to ```Status```, and sets the severity to ```kSoftError``` if the io_status is retryable. The error handling code in RocksDB can then recover the DB automatically. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5761 Differential Revision: D18868376 Pulled By: anand1976 fbshipit-source-id: 39efe18a162ea746fabac6360ff529baba48486f

-

- 13 12月, 2019 1 次提交

-

-

由 Jermy Li 提交于

Summary: It's easy to cause coredump when closing ColumnFamilyHandle with unreleased iterators, especially iterators release is controlled by java GC when using JNI. This patch fixed concurrent CF iteration and drop, we let iterators(actually SuperVersion) hold a ColumnFamilyData reference to prevent the CF from being released too early. fixed https://github.com/facebook/rocksdb/issues/5982 Pull Request resolved: https://github.com/facebook/rocksdb/pull/6147 Differential Revision: D18926378 fbshipit-source-id: 1dff6d068c603d012b81446812368bfee95a5e15

-

- 27 11月, 2019 1 次提交

-

-

由 sdong 提交于

Summary: By default options.ttl is disabled. We believe a better default will be 30 days, which means deleted data the database will be removed from SST files slightly after 30 days, for most of the cases. Make the default UINT64_MAX - 1 to indicate that it is not overridden by users. Change periodic_compaction_seconds to be UINT64_MAX - 1 to UINT64_MAX too to be consistent. Also fix a small bug in the previous periodic_compaction_seconds default code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6073 Test Plan: Add unit tests for it. Differential Revision: D18669626 fbshipit-source-id: 957cd4374cafc1557d45a0ba002010552a378cc8

-

- 23 11月, 2019 2 次提交

-

-

由 Sagar Vemuri 提交于

Summary: `options.ttl` is now supported in universal compaction, similar to how periodic compactions are implemented in PR https://github.com/facebook/rocksdb/issues/5970 . Setting `options.ttl` will simply set `options.periodic_compaction_seconds` to execute the periodic compactions code path. Discarded PR https://github.com/facebook/rocksdb/issues/4749 in lieu of this. This is a short term work-around/hack of falling back to periodic compactions when ttl is set. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6071 Test Plan: Added a unit test. Differential Revision: D18668336 Pulled By: sagar0 fbshipit-source-id: e75f5b81ba949f77ef9eff05e44bb1c757f58612

-

由 sdong 提交于

Summary: Previously, options.ttl cannot be set with options.max_open_files = -1, because it makes use of creation_time field in table properties, which is not available unless max_open_files = -1. With this commit, the information will be stored in manifest and when it is available, will be used instead. Note that, this change will break forward compatibility for release 5.1 and older. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6060 Test Plan: Extend existing test case to options.max_open_files != -1, and simulate backward compatility in one test case by forcing the value to be 0. Differential Revision: D18631623 fbshipit-source-id: 30c232a8672de5432ce9608bb2488ecc19138830

-

- 20 11月, 2019 1 次提交

-

-

由 Little-Wallace 提交于

Summary: ## Problem Description Our process was abort when it call `CheckConsistency`. And the information in `stderr` show that "`L0 files seqno 3001491972 3004797440 vs. 3002875611 3004524421` ". Here are the causes of the accident I investigated. * RocksDB will call `CheckConsistency` whenever `MANIFEST` file is update. It will check sequence number interval of every file, except files which were ingested. * When one file is ingested into RocksDB, it will be assigned the value of global sequence number, and the minimum and maximum seqno of this file are equal, which are both equal to global sequence number. * `CheckConsistency` determines whether the file is ingested by whether the smallest and largest seqno of an sstable file are equal. * If IntraL0Compaction picks one sst which was ingested just now and compacted it into another sst, the `smallest_seqno` of this new file will be smaller than his `largest_seqno`. * If more than one ingested file was ingested before memtable schedule flush, and they all compact into one new sstable file by `IntraL0Compaction`. The sequence interval of this new file will be included in the interval of the memtable. So `CheckConsistency` will return a `Corruption`. * If a sstable was ingested after the memtable was schedule to flush, which would assign a larger seqno to it than memtable. Then the file was compacted with other files (these files were all flushed before the memtable) in L0 into one file. This compaction start before the flush job of memtable start, but completed after the flush job finish. So this new file produced by the compaction (we call it s1) would have a larger interval of sequence number than the file produced by flush (we call it s2). **But there was still some data in s1 written into RocksDB before the s2, so it's possible that some data in s2 was cover by old data in s1.** Of course, it would also make a `Corruption` because of overlap of seqno. There is the relationship of the files: > s1.smallest_seqno < s2.smallest_seqno < s2.largest_seqno < s1.largest_seqno So I skip pick sst file which was ingested in function `FindIntraL0Compaction ` ## Reason Here is my bug report: https://github.com/facebook/rocksdb/issues/5913 There are two situations that can cause the check to fail. ### First situation: - First we ingest five external sst into Rocksdb, and they happened to be ingested in L0. and there had been some data in memtable, which make the smallest sequence number of memtable is less than which of sst that we ingest. - If there had been one compaction job which compacted sst from L0 to L1, `LevelCompactionPicker` would trigger a `IntraL0Compaction` which would compact this five sst from L0 to L0. We call this sst A, which was merged from five ingested sst. - Then some data was put into memtable, and memtable was flushed to L0. We called this sst B. - RocksDB check consistency , and find the `smallest_seqno` of B is less than that of A and crash. Because A was merged from five sst, the smallest sequence number of it was less than the biggest sequece number of itself, so RocksDB could not tell if A was produce by ingested. ### Secondary situaion - First we have flushed many sst in L0, we call them [s1, s2, s3]. - There is an immutable memtable request to be flushed, but because flush thread is busy, so it has not been picked. we call it m1. And at the moment, one sst is ingested into L0. We call it s4. Because s4 is ingested after m1 became immutable memtable, so it has a larger log sequence number than m1. - m1 is flushed in L0. because it is small, this flush job finish quickly. we call it s5. - [s1, s2, s3, s4] are compacted into one sst to L0, by IntraL0Compaction. We call it s6. - compacted 4@0 files to L0 - When s6 is added into manifest, the corruption happened. because the largest sequence number of s6 is equal to s4, and they are both larger than that of s5. But because s1 is older than m1, so the smallest sequence number of s6 is smaller than that of s5. - s6.smallest_seqno < s5.smallest_seqno < s5.largest_seqno < s6.largest_seqno Pull Request resolved: https://github.com/facebook/rocksdb/pull/5958 Differential Revision: D18601316 fbshipit-source-id: 5fe54b3c9af52a2e1400728f565e895cde1c7267

-

- 08 11月, 2019 1 次提交

-

-

由 sdong 提交于

Summary: Recently, periodic compaction got turned on by default for leveled compaction is compaction filter is used. Since periodic compaction is now supported in universal compaction too, we do the same default for universal now. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5994 Test Plan: Add a new unit test. Differential Revision: D18363744 fbshipit-source-id: 5093288ce990ee3cab0e44ffd92d8489fbcd6a48

-