- 11 2月, 2022 2 次提交

-

-

由 Levi Tamasi 提交于

Summary: Fixes a bug introduced in https://github.com/facebook/rocksdb/issues/9526 where we index one position past the end of a `vector`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9542 Test Plan: `make asan_check` Will add a unit test in a separate PR. Reviewed By: akankshamahajan15 Differential Revision: D34145825 Pulled By: ltamasi fbshipit-source-id: 4e87c948407dee489d669a3e41f59e2fcc1228d8

-

由 Hui Xiao 提交于

Summary: **Context:** `EventListenerTest.MultiCF` occasionally failed on TSAN data race as below: ``` WARNING: ThreadSanitizer: data race (pid=2047633) Read of size 8 at 0x7b6000001440 by main thread: #0 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::size() const /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/stl_vector.h:916:40 (listener_test+0x52337c) https://github.com/facebook/rocksdb/issues/1 rocksdb::EventListenerTest_MultiCF_Test::TestBody() /home/circleci/project/db/listener_test.cc:384:7 (listener_test+0x52337c) Previous write of size 8 at 0x7b6000001440 by thread T2: #0 void std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::_M_realloc_insert<rocksdb::DB* const&>(__gnu_cxx::__normal_iterator<rocksdb::DB**, std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> > >, rocksdb::DB* const&) /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/vector.tcc:503:31 (listener_test+0x550654) https://github.com/facebook/rocksdb/issues/1 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::push_back(rocksdb::DB* const&) /usr/bin/../lib/gcc/x86_64-linux-gnu/9/../../../../include/c++/9/bits/stl_vector.h:1195:4 (listener_test+0x550654) https://github.com/facebook/rocksdb/issues/2 rocksdb::TestFlushListener::OnFlushCompleted(rocksdb::DB*, rocksdb::FlushJobInfo const&) /home/circleci/project/db/listener_test.cc:255:18 (listener_test+0x550654) ``` After investigation, it is due to the following: (1) `ASSERT_OK(Flush(i));` before the read `std::vector::size()` is supposed to be [blocked on `DB::Impl::bg_cv_` for memtable flush to finish](https://github.com/facebook/rocksdb/blob/320d9a8e8a1b6998f92934f87fc71ad8bd6d4596/db/db_impl/db_impl_compaction_flush.cc#L2319) and get signaled [at the end of background flush ](https://github.com/facebook/rocksdb/blob/320d9a8e8a1b6998f92934f87fc71ad8bd6d4596/db/db_impl/db_impl_compaction_flush.cc#L2830), which happens after the write `std::vector::push_back()` . So the sequence of execution should have been synchronized as `call flush() -> write -> return from flush() -> read` and would not cause any TSAN data race. - The subsequent `ASSERT_OK(dbfull()->TEST_WaitForFlushMemTable());` serves a similar purpose based on [the previous attempt to deflake the test.](https://github.com/facebook/rocksdb/pull/9084) (2) However, there are multiple places in the code can signal this `DB::Impl::bg_cv_` and mistakenly wake up `ASSERT_OK(Flush(i));` (or `ASSERT_OK(dbfull()->TEST_WaitForFlushMemTable());`) too early (and with the lock available to them), resulting in non-synchronized read and write thus a TSAN data race. - Reproduced by the following, suggested by ajkr: ``` diff --git a/db/db_impl/db_impl_compaction_flush.cc b/db/db_impl/db_impl_compaction_flush.cc index 4ff87c1e4..52492e9cf 100644 --- a/db/db_impl/db_impl_compaction_flush.cc +++ b/db/db_impl/db_impl_compaction_flush.cc @@ -22,7 +22,7 @@ #include "test_util/sync_point.h" #include "util/cast_util.h" #include "util/concurrent_task_limiter_impl.h" namespace ROCKSDB_NAMESPACE { bool DBImpl::EnoughRoomForCompaction( @@ -855,6 +855,7 @@ void DBImpl::NotifyOnFlushCompleted( mutable_cf_options.level0_stop_writes_trigger); // release lock while notifying events mutex_.Unlock(); + bg_cv_.SignalAll(); ``` **Summary:** - Added synchornization between read and write by ` ROCKSDB_NAMESPACE::SyncPoint::GetInstance()->LoadDependency()` mechanism Pull Request resolved: https://github.com/facebook/rocksdb/pull/9528 Test Plan: `./listener_test --gtest_filter=EventListenerTest.MultiCF --gtest_repeat=10` - pre-fix: ``` Repeating all tests (iteration 3) Note: Google Test filter = EventListenerTest.MultiCF [==========] Running 1 test from 1 test case. [----------] Global test environment set-up. [----------] 1 test from EventListenerTest [ RUN ] EventListenerTest.MultiCF ================== WARNING: ThreadSanitizer: data race (pid=3377137) Read of size 8 at 0x7b6000000840 by main thread: #0 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::size() https://github.com/facebook/rocksdb/issues/1 rocksdb::EventListenerTest_MultiCF_Test::TestBody() db/listener_test.cc:384 (listener_test+0x4bb300) Previous write of size 8 at 0x7b6000000840 by thread T2: #0 void std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::_M_realloc_insert<rocksdb::DB* const&>(__gnu_cxx::__normal_iterator<rocksdb::DB**, std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> > >, rocksdb::DB* const&) https://github.com/facebook/rocksdb/issues/1 std::vector<rocksdb::DB*, std::allocator<rocksdb::DB*> >::push_back(rocksdb::DB* const&) https://github.com/facebook/rocksdb/issues/2 rocksdb::TestFlushListener::OnFlushCompleted(rocksdb::DB*, rocksdb::FlushJobInfo const&) db/listener_test.cc:255 (listener_test+0x4e820f) ``` - post-fix: `All passed` Reviewed By: ajkr Differential Revision: D34085791 Pulled By: hx235 fbshipit-source-id: f877aa687ea1d5cb6f31ef8c4772625d22868e8b

-

- 10 2月, 2022 3 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch replaces `std::map` with a sorted `std::vector` for `VersionStorageInfo::blob_files_` and preallocates the space for the `vector` before saving the `BlobFileMetaData` into the new `VersionStorageInfo` in `VersionBuilder::Rep::SaveBlobFilesTo`. These changes reduce the time the DB mutex is held while saving new `Version`s, and using a sorted `vector` also makes lookups faster thanks to better memory locality. In addition, the patch introduces helper methods `VersionStorageInfo::GetBlobFileMetaData` and `VersionStorageInfo::GetBlobFileMetaDataLB` that can be used by clients to perform lookups in the `vector`, and does some general cleanup in the parts of code where blob file metadata are used. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9526 Test Plan: Ran `make check` and the crash test script for a while. Performance was tested using a load-optimized benchmark (`fillseq` with vector memtable, no WAL) and small file sizes so that a significant number of files are produced: ``` numactl --interleave=all ./db_bench --benchmarks=fillseq --allow_concurrent_memtable_write=false --level0_file_num_compaction_trigger=4 --level0_slowdown_writes_trigger=20 --level0_stop_writes_trigger=30 --max_background_jobs=8 --max_write_buffer_number=8 --db=/data/ltamasi-dbbench --wal_dir=/data/ltamasi-dbbench --num=800000000 --num_levels=8 --key_size=20 --value_size=400 --block_size=8192 --cache_size=51539607552 --cache_numshardbits=6 --compression_max_dict_bytes=0 --compression_ratio=0.5 --compression_type=lz4 --bytes_per_sync=8388608 --cache_index_and_filter_blocks=1 --cache_high_pri_pool_ratio=0.5 --benchmark_write_rate_limit=0 --write_buffer_size=16777216 --target_file_size_base=16777216 --max_bytes_for_level_base=67108864 --verify_checksum=1 --delete_obsolete_files_period_micros=62914560 --max_bytes_for_level_multiplier=8 --statistics=0 --stats_per_interval=1 --stats_interval_seconds=20 --histogram=1 --memtablerep=skip_list --bloom_bits=10 --open_files=-1 --subcompactions=1 --compaction_style=0 --min_level_to_compress=3 --level_compaction_dynamic_level_bytes=true --pin_l0_filter_and_index_blocks_in_cache=1 --soft_pending_compaction_bytes_limit=167503724544 --hard_pending_compaction_bytes_limit=335007449088 --min_level_to_compress=0 --use_existing_db=0 --sync=0 --threads=1 --memtablerep=vector --allow_concurrent_memtable_write=false --disable_wal=1 --enable_blob_files=1 --blob_file_size=16777216 --min_blob_size=0 --blob_compression_type=lz4 --enable_blob_garbage_collection=1 --seed=<some value> ``` Final statistics before the patch: ``` Cumulative writes: 0 writes, 700M keys, 0 commit groups, 0.0 writes per commit group, ingest: 284.62 GB, 121.27 MB/s Interval writes: 0 writes, 334K keys, 0 commit groups, 0.0 writes per commit group, ingest: 139.28 MB, 72.46 MB/s ``` With the patch: ``` Cumulative writes: 0 writes, 760M keys, 0 commit groups, 0.0 writes per commit group, ingest: 308.66 GB, 131.52 MB/s Interval writes: 0 writes, 445K keys, 0 commit groups, 0.0 writes per commit group, ingest: 185.35 MB, 93.15 MB/s ``` Total time to complete the benchmark is 2611 seconds with the patch, down from 2986 secs. Reviewed By: riversand963 Differential Revision: D34082728 Pulled By: ltamasi fbshipit-source-id: fc598abf676dce436734d06bb9d2d99a26a004fc

-

由 Alan Paxton 提交于

Summary: For RocksDB 7. Remove deprecated dispose() And as a consequence remove finalize(), which is good Modern Java hygiene. It is extremely non-deterministic when `finalize()` is called on an object, and resource closure/recovery of underlying native/C++ objects and/or non-memory resource cannot be adequately controlled through GC finalization. The RocksDB Java/JNI interface provides and encourages the use of AutoCloseable objects with close() methods, allowing predictable disposal of resources at exit from try-with-resource blocks. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9523 Reviewed By: mrambacher Differential Revision: D34079843 Pulled By: jay-zhuang fbshipit-source-id: d1f0463a89a548b5d57bfaa50154379e722d189a

-

由 Yanqin Jin 提交于

Summary: The keys as part of write batch read from trace file can contain trailing timestamps. This PR removes them before calling `ExpectedState`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9525 Test Plan: make check make crash_test_with_ts Reviewed By: ajkr Differential Revision: D34082358 Pulled By: riversand963 fbshipit-source-id: 78c925659e2a19e4a8278fb4a8ddf5070e265c04

-

- 09 2月, 2022 5 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: In RocksDB option new_table_reader_for_compaction_inputs has not effect on Compaction or on the behavior of RocksDB library. Therefore, we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9443 Test Plan: CircleCI Reviewed By: ajkr Differential Revision: D33788508 Pulled By: akankshamahajan15 fbshipit-source-id: 324ca6f12bfd019e9bd5e1b0cdac39be5c3cec7d

-

由 Levi Tamasi 提交于

Summary: ... since it was only necessary to work around a bug on certain Ubuntu 16.04 images (and we now use 20.04 across the board). Pull Request resolved: https://github.com/facebook/rocksdb/pull/9531 Test Plan: Watch CI. Reviewed By: ajkr Differential Revision: D34089424 Pulled By: ltamasi fbshipit-source-id: f15f86332c119099f61b9bdc74604657fc5d964e

-

由 Peter Dillinger 提交于

Summary: * Inefficient block-based filter is no longer customizable in the public API, though (for now) can still be enabled. * Removed deprecated FilterPolicy::CreateFilter() and FilterPolicy::KeyMayMatch() * Removed `rocksdb_filterpolicy_create()` from C API * Change meaning of nullptr return from GetBuilderWithContext() from "use block-based filter" to "generate no filter in this case." This is a cleaner solution to the proposal in https://github.com/facebook/rocksdb/issues/8250. * Also, when user specifies bits_per_key < 0.5, we now round this down to "no filter" because we expect a filter with >= 80% FP rate is unlikely to be worth the CPU cost of accessing it (esp with cache_index_and_filter_blocks=1 or partition_filters=1). * bits_per_key >= 0.5 and < 1.0 is still rounded up to 1.0 (for 62% FP rate) * This also gives us some support for configuring filters from OPTIONS file as currently saved: `filter_policy=rocksdb.BuiltinBloomFilter`. Opening from such an options file will enable reading filters (an improvement) but not writing new ones. (See Customizable follow-up below.) * Also removed deprecated functions * FilterBitsBuilder::CalculateNumEntry() * FilterPolicy::GetFilterBitsBuilder() * NewExperimentalRibbonFilterPolicy() * Remove default implementations of * FilterBitsBuilder::EstimateEntriesAdded() * FilterBitsBuilder::ApproximateNumEntries() * FilterPolicy::GetBuilderWithContext() * Remove support for "filter_policy=experimental_ribbon" configuration string. * Allow "filter_policy=bloomfilter:n" without bool to discourage use of block-based filter. Some pieces for https://github.com/facebook/rocksdb/issues/9389 Likely follow-up (later PRs): * Refactoring toward FilterPolicy Customizable, so that we can generate filters with same configuration as before when configuring from options file. * Remove support for user enabling block-based filter (ignore `bool use_block_based_builder`) * Some months after this change, we could even remove read support for block-based filter, because it is not critical to DB data preservation. * Make FilterBitsBuilder::FinishV2 to avoid `using FilterBitsBuilder::Finish` mess and add support for specifying a MemoryAllocator (for cache warming) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9501 Test Plan: A number of obsolete tests deleted and new tests or test cases added or updated. Reviewed By: hx235 Differential Revision: D34008011 Pulled By: pdillinger fbshipit-source-id: a39a720457c354e00d5b59166b686f7f59e392aa

-

由 Akanksha Mahajan 提交于

Summary: Add releases till 6.29.fb to compatibility check for forward and backward compatibility Pull Request resolved: https://github.com/facebook/rocksdb/pull/9529 Test Plan: run locally Reviewed By: hx235 Differential Revision: D34086063 Pulled By: akankshamahajan15 fbshipit-source-id: 4ccff513c99cf2d0e41da0b76ab27ffcfdffe7df

-

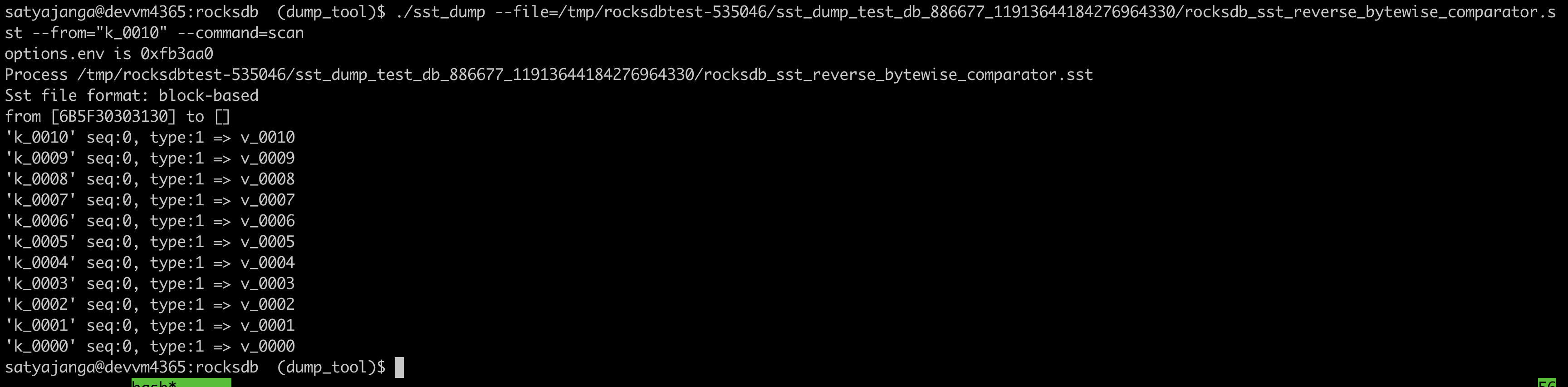

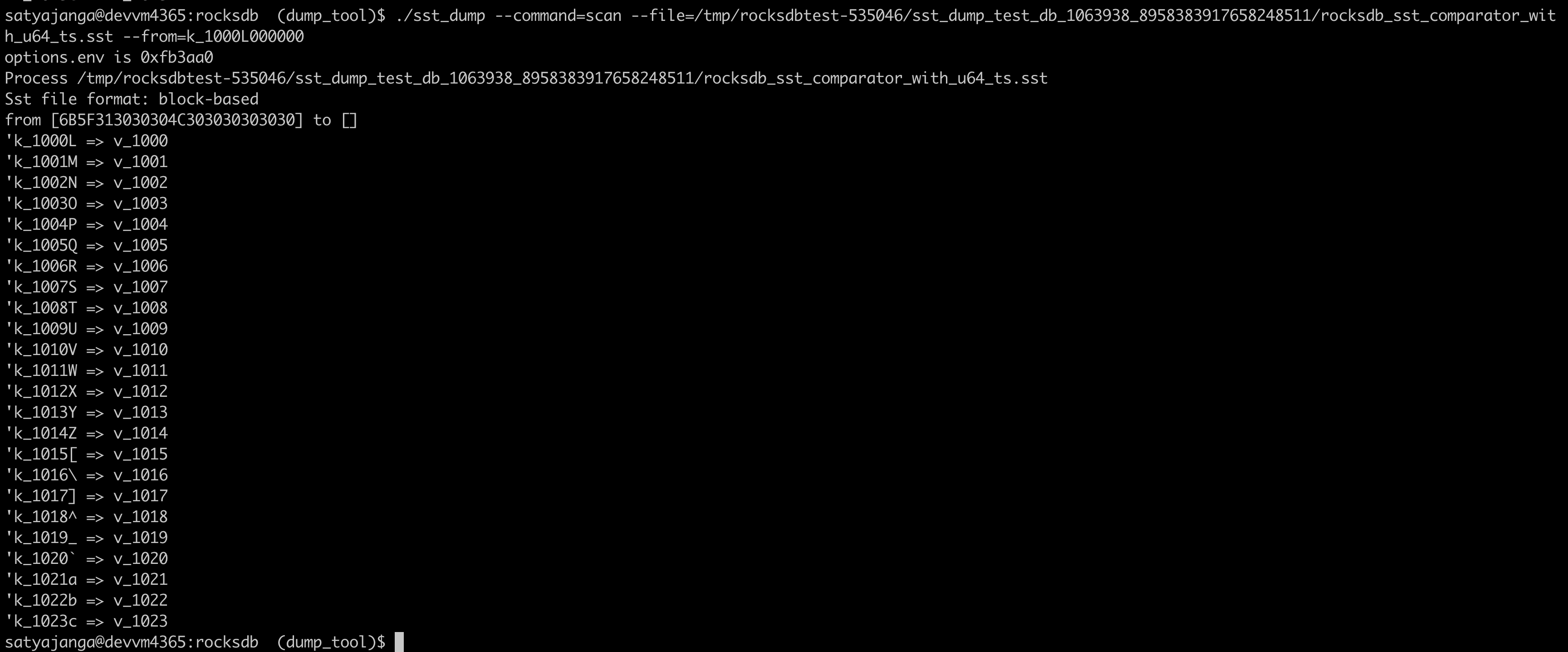

由 satyajanga 提交于

Summary: We introduced a new Comparator for timestamp in user keys. In the sst_dump_tool by default we use BytewiseComparator to read sst files. This change allows us to read comparator_name from table properties in meta data block and use it to read. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9491 Test Plan: added unittests for new functionality. make check   Reviewed By: riversand963 Differential Revision: D33993614 Pulled By: satyajanga fbshipit-source-id: 4b5cf938e6d2cb3931d763bef5baccc900b8c536

-

- 08 2月, 2022 9 次提交

-

-

由 Peter Dillinger 提交于

Summary: After https://github.com/facebook/rocksdb/issues/9481, we are using newer default compiler for build-format-compatible CircleCI nightly job, which fails on building 2.2.fb.branch branch because it tries to use a pre-compiled libsnappy.a that is checked into the repo (!). This works around that by setting SNAPPY_LDFLAGS=-lsnappy, which is only understood by such old versions. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9517 Test Plan: Run check_format_compatible.sh on Ubuntu 20 AWS machine, watch nightly run Reviewed By: hx235 Differential Revision: D34055561 Pulled By: pdillinger fbshipit-source-id: 45f9d428dd082f026773bfa8d9dd4dad66fc9378

-

由 Peter Dillinger 提交于

Summary: ... seen only in internal clang-analyze runs after https://github.com/facebook/rocksdb/issues/9481 * Mostly, this works around falsely reported leaks by using std::unique_ptr in some places where clang-analyze was getting confused. (I didn't see any changes in C++17 that could make our Status implementation leak memory.) * Also fixed SetBGError returning address of a stack variable. * Also fixed another false null deref report by adding an assert. Also, use SKIP_LINK=1 to speed up `make analyze` Pull Request resolved: https://github.com/facebook/rocksdb/pull/9515 Test Plan: Was able to reproduce the reported errors locally and verify they're fixed (except SetBGError). Otherwise, existing tests Reviewed By: hx235 Differential Revision: D34054630 Pulled By: pdillinger fbshipit-source-id: 38600ef3da75ddca307dff96b7a1a523c2885c2e

-

由 Akanksha Mahajan 提交于

Summary: In RocksDB few overloads of DB::GetApproximateSizes are marked as DEPRECATED_FUNC, and we are removing it in the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9458 Test Plan: CircleCI Reviewed By: riversand963 Differential Revision: D34043791 Pulled By: akankshamahajan15 fbshipit-source-id: 815c0ad283a6627c4b241479c7d40ce03a758493

-

由 Peter Dillinger 提交于

Summary: For tiered storage Pull Request resolved: https://github.com/facebook/rocksdb/pull/9498 Test Plan: Just API placeholders for now Reviewed By: jay-zhuang Differential Revision: D33993094 Pulled By: pdillinger fbshipit-source-id: 3cf19a450c7232e05306e94018559b26e9fd35db

-

由 Levi Tamasi 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9513 Reviewed By: riversand963 Differential Revision: D34046181 Pulled By: ltamasi fbshipit-source-id: a5d8d3bf84e5c13bdc6cbd5ba1b4216bad9adfc5

-

由 Hui Xiao 提交于

Summary: **Context:** Google benchmark [v1.6.0](https://github.com/google/benchmark/releases/tag/v1.6.0) introduced a breaking change "`introduce accessorrs for public data members (https://github.com/google/benchmark/pull/1208)`" that will fail RocksDB build of microbench developed based on previous code. For example, https://github.com/facebook/rocksdb/issues/9489. **Summary:** Clarify the maximum version of Google benchmark needed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9505 Test Plan: CI Reviewed By: ajkr Differential Revision: D34023447 Pulled By: hx235 fbshipit-source-id: 0128ffc31485f2d752ab2116771f6ae53231fcd7

-

由 Peter Dillinger 提交于

Summary: Due to some unexplained errors with gcc-7 ``` Assembler messages: Error: invalid switch -march=z14 Error: unrecognized option -march=z14 ``` Relevant to https://github.com/facebook/rocksdb/issues/9388 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9512 Test Plan: CI Reviewed By: hx235 Differential Revision: D34044989 Pulled By: pdillinger fbshipit-source-id: a5406e8f30b2b187949f75c8cee4e2a0eb976670

-

由 Levi Tamasi 提交于

Summary: The patch builds on the refactoring done in https://github.com/facebook/rocksdb/issues/9494 and improves the performance of building the hash of file locations in `VersionStorageInfo` in two ways. First, the hash building is moved from `AddFile` (which is called under the DB mutex) to a separate post-processing step done as part of `PrepareForVersionAppend` (during which the mutex is *not* held). Second, the space necessary for the hash is preallocated to prevent costly reallocation/rehashing operations. These changes mitigate the overhead of the file location hash, which can be significant with certain workloads where the baseline CPU usage is low (see https://github.com/facebook/rocksdb/issues/9351, which is a workload where keys are sorted, WAL is turned off, the vector memtable implementation is used, and there are lots of small SST files). Fixes https://github.com/facebook/rocksdb/issues/9351 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9504 Test Plan: `make check` ``` numactl --interleave=all ./db_bench --benchmarks=fillseq --allow_concurrent_memtable_write=false --level0_file_num_compaction_trigger=4 --level0_slowdown_writes_trigger=20 --level0_stop_writes_trigger=30 --max_background_jobs=8 --max_write_buffer_number=8 --db=/data/ltamasi-dbbench --wal_dir=/data/ltamasi-dbbench --num=800000000 --num_levels=8 --key_size=20 --value_size=400 --block_size=8192 --cache_size=51539607552 --cache_numshardbits=6 --compression_max_dict_bytes=0 --compression_ratio=0.5 --compression_type=lz4 --bytes_per_sync=8388608 --cache_index_and_filter_blocks=1 --cache_high_pri_pool_ratio=0.5 --benchmark_write_rate_limit=0 --write_buffer_size=16777216 --target_file_size_base=16777216 --max_bytes_for_level_base=67108864 --verify_checksum=1 --delete_obsolete_files_period_micros=62914560 --max_bytes_for_level_multiplier=8 --statistics=0 --stats_per_interval=1 --stats_interval_seconds=20 --histogram=1 --bloom_bits=10 --open_files=-1 --subcompactions=1 --compaction_style=0 --level_compaction_dynamic_level_bytes=true --pin_l0_filter_and_index_blocks_in_cache=1 --soft_pending_compaction_bytes_limit=167503724544 --hard_pending_compaction_bytes_limit=335007449088 --min_level_to_compress=0 --use_existing_db=0 --sync=0 --threads=1 --memtablerep=vector --disable_wal=1 --seed=<some_seed> ``` Final statistics before this patch: ``` Cumulative writes: 0 writes, 697M keys, 0 commit groups, 0.0 writes per commit group, ingest: 283.25 GB, 241.08 MB/s Interval writes: 0 writes, 1264K keys, 0 commit groups, 0.0 writes per commit group, ingest: 525.69 MB, 176.67 MB/s ``` With the patch: ``` Cumulative writes: 0 writes, 759M keys, 0 commit groups, 0.0 writes per commit group, ingest: 308.57 GB, 262.63 MB/s Interval writes: 0 writes, 1555K keys, 0 commit groups, 0.0 writes per commit group, ingest: 646.61 MB, 215.11 MB/s ``` Reviewed By: riversand963 Differential Revision: D34014734 Pulled By: ltamasi fbshipit-source-id: acb2703677451d5ccaa7e9d950844b33d240695b

-

由 Jay Zhuang 提交于

Summary: Thread-pool pops a thread function and then run the function, which may cause thread-pool is empty but the last function is still running. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9502 Test Plan: `gtest-parallel ./env_test --gtest_filter=DefaultEnvWithoutDirectIO/EnvPosixTestWithParam.RunMany/0 -r 10000 -w 1000` Reviewed By: ajkr Differential Revision: D34011184 Pulled By: jay-zhuang fbshipit-source-id: 8c38bef155205bef96fd1c988dcc643a6b2ac270

-

- 07 2月, 2022 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: Ubuntu 16.04 has reached EOL. The patch upgrades the image for all of our CircleCI jobs to the latest, namely `ubuntu-2004:202111-02`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9486 Test Plan: Watch the CI build results. Reviewed By: ajkr Differential Revision: D34029339 Pulled By: ltamasi fbshipit-source-id: a266b631c04d227fe29b8156be61229605eb9dd7

-

- 05 2月, 2022 6 次提交

-

-

由 Peter Dillinger 提交于

Summary: Drop support for some old compilers by requiring C++17 standard (or higher). See https://github.com/facebook/rocksdb/issues/9388 First modification based on this is to remove some conditional compilation in slice.h (also better for ODR) Also in this PR: * Fix some Makefile formatting that seems to affect ASSERT_STATUS_CHECKED config in some cases * Add c_test to NON_PARALLEL_TEST in Makefile * Fix a clang-analyze reported "potential leak" in lru_cache_test * Better "compatibility" definition of DEFINE_uint32 for old versions of gflags * Fix a linking problem with shared libraries in Makefile (`./random_test: error while loading shared libraries: librocksdb.so.6.29: cannot open shared object file: No such file or directory`) * Always set ROCKSDB_SUPPORT_THREAD_LOCAL and use thread_local (from C++11) * TODO in later PR: clean up that obsolete flag * Fix a cosmetic typo in c.h (https://github.com/facebook/rocksdb/issues/9488) Pull Request resolved: https://github.com/facebook/rocksdb/pull/9481 Test Plan: CircleCI config substantially updated. * Upgrade to latest Ubuntu images for each release * Generally prefer Ubuntu 20, but keep a couple Ubuntu 16 builds with oldest supported compilers, to ensure compatibility * Remove .circleci/cat_ignore_eagain except for Ubuntu 16 builds, because this is to work around a kernel bug that should not affect anything but Ubuntu 16. * Remove designated gcc-9 build, because the default linux build now uses GCC 9 from Ubuntu 20. * Add some `apt-key add` to fix some apt "couldn't be verified" errors * Generally drop SKIP_LINK=1; work-around no longer needed * Generally `add-apt-repository` before `apt-get update` as manual testing indicated the reverse might not work. Travis: * Use gcc-7 by default (remove specific gcc-7 and gcc-4.8 builds) * TODO in later PR: fix s390x "Assembler messages: Error: invalid switch -march=z14" failure AppVeyor: * Completely dropped because we are dropping VS2015 support and CircleCI covers VS >= 2017 Also local testing with old gflags (out of necessity when using ROCKSDB_NO_FBCODE=1). Reviewed By: mrambacher Differential Revision: D33946377 Pulled By: pdillinger fbshipit-source-id: ae077c823905b45370a26c0103ada119459da6c1

-

由 Radek Hubner 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9295 Reviewed By: riversand963 Differential Revision: D33672440 Pulled By: ajkr fbshipit-source-id: 85f73a9297888b00255b636e7826b37186aba45c

-

由 Si Ke 提交于

Summary: Fixed all RocksJava test failures in Centos and Alpine 32 bit and 64 bit OSes Pull Request resolved: https://github.com/facebook/rocksdb/pull/9395 Reviewed By: mrambacher Differential Revision: D33771987 Pulled By: ajkr fbshipit-source-id: fed91033b8df08f191ad65e1fb745a9264bbfa70

-

由 Jermy Li 提交于

Summary: refer to: https://github.com/facebook/rocksdb/wiki/Prefix-Seek#configure-prefix-bloom-filter Pull Request resolved: https://github.com/facebook/rocksdb/pull/9394 Reviewed By: mrambacher Differential Revision: D33671533 Pulled By: ajkr fbshipit-source-id: d90db1712efdd5dd65020329867381d6b3cf2626

-

由 Peter Dillinger 提交于

Summary: Follow-up to https://github.com/facebook/rocksdb/issues/9126 Added new unit tests to validate some of the claims of guaranteed uniqueness within certain large bounds. Also cleaned up the cache_bench -stress-cache-key tool with better comments and description. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9329 Test Plan: no changes to production code Reviewed By: mrambacher Differential Revision: D33269328 Pulled By: pdillinger fbshipit-source-id: 3a2b684a6b2b15f79dc872e563e3d16563be26de

-

由 Levi Tamasi 提交于

Summary: The patch does some cleanup in and around `VersionStorageInfo`: * Renames the method `PrepareApply` to `PrepareAppend` in `Version` to make it clear that it is to be called before appending the `Version` to `VersionSet` (via `AppendVersion`), not before applying any `VersionEdit`s. * Introduces a helper method `VersionStorageInfo::PrepareForVersionAppend` (called by `Version::PrepareAppend`) that encapsulates the population of the various derived data structures in `VersionStorageInfo`, and turns the methods computing the derived structures (`UpdateNumNonEmptyLevels`, `CalculateBaseBytes` etc.) into private helpers. * Changes `Version::PrepareAppend` so it only calls `UpdateAccumulatedStats` if the `update_stats` flag is set. (Earlier, this was checked by the callee.) Related to this, it also moves the call to `ComputeCompensatedSizes` to `VersionStorageInfo::PrepareForVersionAppend`. * Updates and cleans up `version_builder_test`, `version_set_test`, and `compaction_picker_test` so `PrepareForVersionAppend` is called anytime a new `VersionStorageInfo` is set up or saved. This cleanup also involves splitting `VersionStorageInfoTest.MaxBytesForLevelDynamic` into multiple smaller test cases. * Fixes up a bunch of comments that were outdated or just plain incorrect. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9494 Test Plan: Ran `make check` and the crash test script for a while. Reviewed By: riversand963 Differential Revision: D33971666 Pulled By: ltamasi fbshipit-source-id: fda52faac7783041126e4f8dec0fe01bdcadf65a

-

- 04 2月, 2022 4 次提交

-

-

由 Baptiste Lemaire 提交于

Summary: In RocksDB, this option was already marked as "NOT SUPPORTED" for a long time, and setting this option does not have any effect on the behavior of RocksDB library. Therefore, we are removing it in the preparations of the upcoming 7.0 release. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9446 Reviewed By: ajkr Differential Revision: D33793048 Pulled By: bjlemaire fbshipit-source-id: 73316efdb194e90225005246673dae99e65577ae

-

由 Yanqin Jin 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/9497 Reviewed By: ajkr Differential Revision: D33990256 Pulled By: riversand963 fbshipit-source-id: 268ce16b037e23e42b14fa0fcb45535582e1a0d6

-

由 Yanqin Jin 提交于

Summary: I feel it would be nice if we can fix this spelling error. In `SizeApproximationOptions`, the `include_memtabtles` should be `include_memtables`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9490 Test Plan: make check Reviewed By: hx235 Differential Revision: D33949862 Pulled By: riversand963 fbshipit-source-id: b2be67501b65d4aabb6b8df1bf25eb8d54cc1466

-

由 mrambacher 提交于

Summary: Added a CountedFileSystem that tracks a number of file operations (opens, closes, deletes, renames, flushes, syncs, fsyncs, reads, writes). This class was based on the ReportFileOpEnv from db_bench. This is a stepping stone PR to be able to change the SpecialEnv into a SpecialFileSystem, where several of the file varieties wish to do operation counting. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9283 Reviewed By: pdillinger Differential Revision: D33062004 Pulled By: mrambacher fbshipit-source-id: d0d297a7fb9c48c06cbf685e5fa755c27193b6f5

-

- 03 2月, 2022 2 次提交

-

-

由 Hui Xiao 提交于

Reviewed By: ajkr Differential Revision: D33962843 fbshipit-source-id: 9c4e4c46403e50549d341237bae0f495b26c5613

-

由 anand76 提交于

Summary: Remove default implementation of Name(), which is an abstract method inherited from Customizable, from FileSystemWrapper. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9474 Reviewed By: pdillinger Differential Revision: D33896455 Pulled By: anand1976 fbshipit-source-id: bc3df3bc0cec580cf63c60a52c344f23ca651102

-

- 02 2月, 2022 8 次提交

-

-

由 Yanqin Jin 提交于

Summary: ajkr reminded me that we have a rule of not including per-kv related data in `WriteOptions`. Namely, `WriteOptions` should not include information about "what-to-write", but should just include information about "how-to-write". According to this rule, `WriteOptions::timestamp` (experimental) is clearly a violation. Therefore, this PR removes `WriteOptions::timestamp` for compliance. After the removal, we need to pass timestamp info via another set of APIs. This PR proposes a set of overloaded functions `Put(write_opts, key, value, ts)`, `Delete(write_opts, key, ts)`, and `SingleDelete(write_opts, key, ts)`. Planned to add `Write(write_opts, batch, ts)`, but its complexity made me reconsider doing it in another PR (maybe). For better checking and returning error early, we also add a new set of APIs to `WriteBatch` that take extra `timestamp` information when writing to `WriteBatch`es. These set of APIs in `WriteBatchWithIndex` are currently not supported, and are on our TODO list. Removed `WriteBatch::AssignTimestamps()` and renamed `WriteBatch::AssignTimestamp()` to `WriteBatch::UpdateTimestamps()` since this method require that all keys have space for timestamps allocated already and multiple timestamps can be updated. The constructor of `WriteBatch` now takes a fourth argument `default_cf_ts_sz` which is the timestamp size of the default column family. This will be used to allocate space when calling APIs that do not specify a column family handle. Also, updated `DB::Get()`, `DB::MultiGet()`, `DB::NewIterator()`, `DB::NewIterators()` methods, replacing some assertions about timestamp to returning Status code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/8946 Test Plan: make check ./db_bench -benchmarks=fillseq,fillrandom,readrandom,readseq,deleterandom -user_timestamp_size=8 ./db_stress --user_timestamp_size=8 -nooverwritepercent=0 -test_secondary=0 -secondary_catch_up_one_in=0 -continuous_verification_interval=0 Make sure there is no perf regression by running the following ``` ./db_bench_opt -db=/dev/shm/rocksdb -use_existing_db=0 -level0_stop_writes_trigger=256 -level0_slowdown_writes_trigger=256 -level0_file_num_compaction_trigger=256 -disable_wal=1 -duration=10 -benchmarks=fillrandom ``` Before this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.831 micros/op 546235 ops/sec; 60.4 MB/s ``` After this PR ``` DB path: [/dev/shm/rocksdb] fillrandom : 1.820 micros/op 549404 ops/sec; 60.8 MB/s ``` Reviewed By: ltamasi Differential Revision: D33721359 Pulled By: riversand963 fbshipit-source-id: c131561534272c120ffb80711d42748d21badf09

-

由 Hui Xiao 提交于

Summary: Note: rebase on and merge after https://github.com/facebook/rocksdb/pull/9349, https://github.com/facebook/rocksdb/pull/9345, (optional) https://github.com/facebook/rocksdb/pull/9393 **Context:** (Quoted from pdillinger) Layers of information during new Bloom/Ribbon Filter construction in building block-based tables includes the following: a) set of keys to add to filter b) set of hashes to add to filter (64-bit hash applied to each key) c) set of Bloom indices to set in filter, with duplicates d) set of Bloom indices to set in filter, deduplicated e) final filter and its checksum This PR aims to detect corruption (e.g, unexpected hardware/software corruption on data structures residing in the memory for a long time) from b) to e) and leave a) as future works for application level. - b)'s corruption is detected by verifying the xor checksum of the hash entries calculated as the entries accumulate before being added to the filter. (i.e, `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()`) - c) - e)'s corruption is detected by verifying the hash entries indeed exists in the constructed filter by re-querying these hash entries in the filter (i.e, `FilterBitsBuilder::MaybePostVerify()`) after computing the block checksum (except for PartitionFilter, which is done right after each `FilterBitsBuilder::Finish` for impl simplicity - see code comment for more). For this stage of detection, we assume hash entries are not corrupted after checking on b) since the time interval from b) to c) is relatively short IMO. Option to enable this feature of detection is `BlockBasedTableOptions::detect_filter_construct_corruption` which is false by default. **Summary:** - Implemented new functions `XXPH3FilterBitsBuilder::MaybeVerifyHashEntriesChecksum()` and `FilterBitsBuilder::MaybePostVerify()` - Ensured hash entries, final filter and banding and their [cache reservation ](https://github.com/facebook/rocksdb/issues/9073) are released properly despite corruption - See [Filter.construction.artifacts.release.point.pdf ](https://github.com/facebook/rocksdb/files/7923487/Design.Filter.construction.artifacts.release.point.pdf) for high-level design - Bundled and refactored hash entries's related artifact in XXPH3FilterBitsBuilder into `HashEntriesInfo` for better control on lifetime of these artifact during `SwapEntires`, `ResetEntries` - Ensured RocksDB block-based table builder calls `FilterBitsBuilder::MaybePostVerify()` after constructing the filter by `FilterBitsBuilder::Finish()` - When encountering such filter construction corruption, stop writing the filter content to files and mark such a block-based table building non-ok by storing the corruption status in the builder. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9342 Test Plan: - Added new unit test `DBFilterConstructionCorruptionTestWithParam.DetectCorruption` - Included this new feature in `DBFilterConstructionReserveMemoryTestWithParam.ReserveMemory` as this feature heavily touch ReserveMemory's impl - For fallback case, I run `./filter_bench -impl=3 -detect_filter_construct_corruption=true -reserve_table_builder_memory=true -strict_capacity_limit=true -quick -runs 10 | grep 'Build avg'` to make sure nothing break. - Added to `filter_bench`: increased filter construction time by **30%**, mostly by `MaybePostVerify()` - FastLocalBloom - Before change: `./filter_bench -impl=2 -quick -runs 10 | grep 'Build avg'`: **28.86643s** - After change: - `./filter_bench -impl=2 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless): **27.6644s (-4% perf improvement might be due to now we don't drop bloom hash entry in `AddAllEntries` along iteration but in bulk later, same with the bypassing-MaybePostVerify case below)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (expect acceptable increase): **34.41159s (+20%)** - `./filter_bench -impl=2 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'` (by-passing MaybePostVerify, expect minor increase): **27.13431s (-6%)** - Standard128Ribbon - Before change: `./filter_bench -impl=3 -quick -runs 10 | grep 'Build avg'`: **122.5384s** - After change: - `./filter_bench -impl=3 -detect_filter_construct_corruption=false -quick -runs 10 | grep 'Build avg'` (expect a tiny increase due to MaybePostVerify is always called regardless - verified by removing MaybePostVerify under this case and found only +-1ns difference): **124.3588s (+2%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(expect acceptable increase): **159.4946s (+30%)** - `./filter_bench -impl=3 -detect_filter_construct_corruption=true -quick -runs 10 | grep 'Build avg'`(by-passing MaybePostVerify, expect minor increase) : **125.258s (+2%)** - Added to `db_stress`: `make crash_test`, `./db_stress --detect_filter_construct_corruption=true` - Manually smoke-tested: manually corrupted the filter construction in some db level tests with basic PUT and background flush. As expected, the error did get returned to users in subsequent PUT and Flush status. Reviewed By: pdillinger Differential Revision: D33746928 Pulled By: hx235 fbshipit-source-id: cb056426be5a7debc1cd16f23bc250f36a08ca57

-

由 Levi Tamasi 提交于

Summary: Fixes a typo introduced in https://github.com/facebook/rocksdb/pull/9466. Fixes https://github.com/facebook/rocksdb/issues/9482 Pull Request resolved: https://github.com/facebook/rocksdb/pull/9485 Test Plan: ``` COMPILE_WITH_TSAN=1 make db_stress -j24 ./db_stress --ops_per_thread=1000 --reopen=5 ``` Reviewed By: ajkr Differential Revision: D33928601 Pulled By: ltamasi fbshipit-source-id: 3e01a0ca5fffb56c268c811cbe045413b225059a

-

由 Andrew Kryczka 提交于

Summary: As reported in https://github.com/facebook/rocksdb/pull/2899#issuecomment-1001467021, `prev_num_drains_` is confusing as we never set it to nonzero. So this PR removes it. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9484 Test Plan: `make check -j24` Reviewed By: hx235 Differential Revision: D33923203 Pulled By: ajkr fbshipit-source-id: 6277d50a198b90646583ee8094c2e6a1bbdadc7b

-

由 Andrew Kryczka 提交于

Summary: It is too slow that our `db_crashtest.py` often kills `db_stress` before the setup phase completes. Profiled it and found a few ways to optimize. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9475 Test Plan: Measured setup phase time reduced 22% (36 -> 28 seconds) for first run, and 36% (38 -> 24 seconds) for non-first run on empty-ish DB. - first run benchmark command: `rm -rf /dev/shm/dbstress*/ && mkdir -p /dev/shm/dbstress_expected/ && ./db_stress -max_key=100000000 -destroy_db_initially=1 -expected_values_dir=/dev/shm/dbstress_expected/ -db=/dev/shm/dbstress/ --clear_column_family_one_in=0 --reopen=0 --nooverwritepercent=1` output before this PR: ``` 2022/01/31-11:14:05 Initializing db_stress ... 2022/01/31-11:14:41 Starting database operations ``` output after this PR: ``` ... 2022/01/31-11:12:23 Initializing db_stress ... 2022/01/31-11:12:51 Starting database operations ``` - non-first run benchmark command: `./db_stress -max_key=100000000 -destroy_db_initially=0 -expected_values_dir=/dev/shm/dbstress_expected/ -db=/dev/shm/dbstress/ --clear_column_family_one_in=0 --reopen=0 --nooverwritepercent=1` output before this PR: ``` 2022/01/31-11:20:45 Initializing db_stress ... 2022/01/31-11:21:23 Starting database operations ``` output after this PR: ``` 2022/01/31-11:22:02 Initializing db_stress ... 2022/01/31-11:22:26 Starting database operations ``` - ran minified crash test a while: `DEBUG_LEVEL=0 TEST_TMPDIR=/dev/shm python3 tools/db_crashtest.py blackbox --simple --interval=10 --max_key=1000000 --write_buffer_size=1048576 --target_file_size_base=1048576 --max_bytes_for_level_base=4194304 --value_size_mult=33` Reviewed By: anand1976 Differential Revision: D33897793 Pulled By: ajkr fbshipit-source-id: 0d7b2c93e1e2a9f8a878e87632c2455406313087

-

由 Peter Dillinger 提交于

Summary: Crash test recently started showing failures as in https://github.com/facebook/rocksdb/issues/9118 but for files created by compaction. This change applies a similar fix. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9480 Test Plan: Updated / extended unit test. (Some re-arranging to do the simpler compaction testing before this special case.) Reviewed By: ltamasi Differential Revision: D33909835 Pulled By: pdillinger fbshipit-source-id: 58e4b44e4ecc2d21e4df2c2d8440ec0633aa1f6c

-

由 Yanqin Jin 提交于

Summary: This PR does the following: - Fix compilation and linking errors when building fuzzer - Add the above to CircleCI - Update documentation Pull Request resolved: https://github.com/facebook/rocksdb/pull/9420 Test Plan: CI Reviewed By: jay-zhuang Differential Revision: D33849452 Pulled By: riversand963 fbshipit-source-id: 0794e5d04a3f53bfd2216fe2b3cd827ca2083ac3

-

由 Jay Zhuang 提交于

Summary: * Add more micro-benchmark tests * Expose an API in DBImpl for waiting for compactions (still not visible to the user) * Add argument name for ribbon_bench * remove benchmark run from CI, as it runs too long. Pull Request resolved: https://github.com/facebook/rocksdb/pull/9436 Test Plan: CI Reviewed By: riversand963 Differential Revision: D33777836 Pulled By: jay-zhuang fbshipit-source-id: c05de3bc082cc05b5d019f00b324e774bf4bbd96

-