- 24 10月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Add a threshold timestamp, full_history_ts_low_ of type `std::string*` to `CompactionIterator`, so that RocksDB can also perform garbage collection during compaction. * If full_history_ts_low_ is nullptr, then compaction iterator does not perform GC, preserving all timestamp history for all keys. Compaction iterator will treat user key with different timestamps as different user keys. * If full_history_ts_low_ is not nullptr, then compaction iterator performs GC. GC will look at keys older than `*full_history_ts_low_` and determine their eligibility based on factors including snapshots. Current rules of GC: * If an internal key is in the same snapshot as a previous counterpart with the same user key, and this key is eligible for GC, and the key is not single-delete or merge operand, then this key can be dropped. Note that the previous internal key cannot be a merge operand either. * If a tombstone is the most recent one in the earliest snapshot and it is eligible for GC, and keyNotExistsBeyondLevel() is true, then this tombstone can be dropped. * If a tombstone is the most recent one in a snapshot and it is eligible for GC, and the compaction is at bottommost level, then all other older internal keys of the same user key must also be eligible for GC, thus can be dropped * Single-delete, delete-range and merge are not currently supported. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7556 Test Plan: make check Reviewed By: ltamasi Differential Revision: D24507728 Pulled By: riversand963 fbshipit-source-id: 3c09c7301f41eed76dfcf4d1527e68cf6e0a8bb3

-

- 01 10月, 2020 1 次提交

-

-

由 Ramkumar Vadivelu 提交于

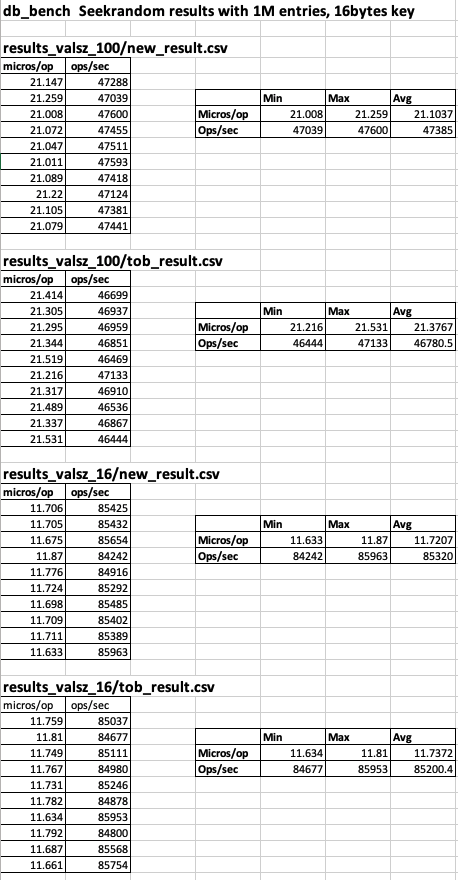

Summary: Fixes https://github.com/facebook/rocksdb/issues/7430 Change ParseInternalKey() to return Status instead of bool. db_bench (seekrandom) based before/after results with value size of 100 bytes and 16 bytes can be found at (tests ran on an udb server): https://www.dropbox.com/s/47bwamdy5ozngph/PIK_ret_Status_results.xlsx?dl=0  Pull Request resolved: https://github.com/facebook/rocksdb/pull/7457 Reviewed By: ajkr Differential Revision: D24002433 Pulled By: ramvadiv fbshipit-source-id: ac253ecf577a29044c47c3fe254a01e71404c44c

-

- 30 9月, 2020 1 次提交

-

-

由 Akanksha Mahajan 提交于

Summary: Add a new Option "allow_data_in_errors". When it's set by users, it allows them to opt-in to get error messages containing corrupted keys/values. Corrupt keys, values will be logged in the messages, logs, status etc. that will help users with the useful information regarding affected data. By default value is set false to prevent users data to be exposed in the messages. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7420 Test Plan: 1. make check -j64 2. Add a new test case Reviewed By: ajkr Differential Revision: D23835028 Pulled By: akankshamahajan15 fbshipit-source-id: 8d2eba8fb898e79fcf1fccc07295065a75eb59b1

-

- 15 9月, 2020 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch adds support for writing blob files during flush by integrating `BlobFileBuilder` with the flush logic, most importantly, `BuildTable` and `CompactionIterator`. If `enable_blob_files` is set, large values are extracted to blob files and replaced with references. The resulting blob files are then logged to the MANIFEST as part of the flush job's `VersionEdit` and added to the `Version`, similarly to table files. Errors related to writing blob files fail the flush, and any blob files written by such jobs are immediately deleted (again, similarly to how SST files are handled). In addition, the patch extends the logging and statistics around flushes to account for the presence of blob files (e.g. `InternalStats::CompactionStats::bytes_written`, which is used for calculating write amplification, now considers the blob files as well). Pull Request resolved: https://github.com/facebook/rocksdb/pull/7345 Test Plan: Tested using `make check` and `db_bench`. Reviewed By: riversand963 Differential Revision: D23506369 Pulled By: ltamasi fbshipit-source-id: 646885f22dfbe063f650d38a1fedc132f499a159

-

- 12 9月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: During bottommost compaction, RocksDB cannot simply drop a tombstone if this tombstone is not in the earliest snapshot. The current behavior is: RocksDB skips other internal keys (of the same user key) in the same snapshot range. In the meantime, RocksDB should check for the `shutting_down` flag. Otherwise, it is possible for a bottommost compaction that has already started running to take a long time to finish, even if the application has tried to cancel all background jobs. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7356 Test Plan: make check Reviewed By: ltamasi Differential Revision: D23663241 Pulled By: riversand963 fbshipit-source-id: 25f8e9b51bc3bfa3353cdf87557800f9d90ee0b5

-

- 04 9月, 2020 1 次提交

-

-

由 Hiep 提交于

Summary: - Closes https://github.com/facebook/rocksdb/issues/6490 - Currently MERGEs are converted to PUTs at bottom or compaction has reached the beginning of the key, this can wrongly cover a PUT future base case. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7166 Test Plan: - Automated: `make all check` - Manual: With `allow_ingest_behind = true`, add Merge operations to a key then run compaction. Then run ingesting external files to make sure the base case is probably compacted with existing Merges. Reviewed By: cheng-chang Differential Revision: D23325425 Pulled By: ajkr fbshipit-source-id: 3eb415eb7b381b5453e45245393566153b1abb68

-

- 15 8月, 2020 1 次提交

-

-

由 Andrew Kryczka 提交于

Summary: Manual compaction with `CompactRangeOptions::change_levels` set could refit to a level targeted by another manual compaction. If force_consistency_checks were disabled, it could be possible for overlapping files to be written at that target level. This PR prevents the possibility by calling `DisableManualCompaction()` prior to `ReFitLevel()`. It also improves the manual compaction disabling mechanism to wait for pending manual compactions to complete before returning, and support disabling from multiple threads. Fixes https://github.com/facebook/rocksdb/issues/6432. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7250 Test Plan: crash test command that repro'd the bug reliably: ``` $ TEST_TMPDIR=/dev/shm python tools/db_crashtest.py blackbox --simple -target_file_size_base=524288 -write_buffer_size=1048576 -clear_column_family_one_in=0 -reopen=0 -max_key=10000000 -column_families=1 -max_background_compactions=8 -compact_range_one_in=100000 -compression_type=none -compaction_style=1 -num_levels=5 -universal_min_merge_width=4 -universal_max_merge_width=8 -level0_file_num_compaction_trigger=12 -rate_limiter_bytes_per_sec=1048576000 -universal_max_size_amplification_percent=100 --duration=3600 --interval=60 --use_direct_io_for_flush_and_compaction=0 --use_direct_reads=0 --enable_compaction_filter=0 ``` Reviewed By: ltamasi Differential Revision: D23090800 Pulled By: ajkr fbshipit-source-id: afcbcd51b42ce76789fdb907d8b9ada790709c13

-

- 15 7月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Currently, RocksDB lets compaction to go through even in case of corrupted keys, the number of which is reported in CompactionJobStats. However, RocksDB does not check this value. We should let compaction run in a stricter mode. Temporarily disable two tests that allow corrupted keys in compaction. With this PR, the two tests will assert(false) and terminate. Still need to investigate what is the recommended google-test way of doing it. Death test (EXPECT_DEATH) in gtest has warnings now. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7124 Test Plan: make check Reviewed By: ajkr Differential Revision: D22530722 Pulled By: riversand963 fbshipit-source-id: 6a5a6a992028c6d4f92cb74693c92db462ae4ad6

-

- 30 6月, 2020 1 次提交

-

-

由 Burton Li 提交于

Summary: Added compaction filter support for BlobDB non-TTL values. Same as vanilla RocksDB, user compaction filter applies to all k/v pairs of the compaction for non-TTL values. It honors `min_blob_size`, which potentially results value transitions between inlined data and stored-in-blob data when size of value is changed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6850 Reviewed By: siying Differential Revision: D22263487 Pulled By: ltamasi fbshipit-source-id: 8fc03f8cde2a5c831e63b436b3dbf1b7f90939e8

-

- 21 2月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: When dynamically linking two binaries together, different builds of RocksDB from two sources might cause errors. To provide a tool for user to solve the problem, the RocksDB namespace is changed to a flag which can be overridden in build time. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6433 Test Plan: Build release, all and jtest. Try to build with ROCKSDB_NAMESPACE with another flag. Differential Revision: D19977691 fbshipit-source-id: aa7f2d0972e1c31d75339ac48478f34f6cfcfb3e

-

- 18 12月, 2019 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: With https://github.com/facebook/rocksdb/pull/6121, errors returned by `PrepareBlobValue` result in `CompactionIterator::status_` being set to `Corruption` or `IOError` as appropriate, however, `valid_` is not set to `false`. The error is eventually propagated in `CompactionJob::ProcessKeyValueCompaction` but only after the main loop completes. Setting `valid_` to `false` upon errors enables us to terminate the loop early and fail the compaction sooner. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6170 Test Plan: Ran `make check` and used `db_bench` in BlobDB mode. fbshipit-source-id: a2ca88a3ca71115e2605bd34a4c795d8a28bef27

-

- 14 12月, 2019 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: The patch adds logic that relocates live blobs from the oldest N non-TTL blob files as they are encountered during compaction (assuming the BlobDB configuration option `enable_garbage_collection` is `true`), where N is defined as the number of immutable non-TTL blob files multiplied by the value of a new BlobDB configuration option called `garbage_collection_cutoff`. (The default value of this parameter is 0.25, that is, by default the valid blobs residing in the oldest 25% of immutable non-TTL blob files are relocated.) Pull Request resolved: https://github.com/facebook/rocksdb/pull/6121 Test Plan: Added unit test and tested using the BlobDB mode of `db_bench`. Differential Revision: D18785357 Pulled By: ltamasi fbshipit-source-id: 8c21c512a18fba777ec28765c88682bb1a5e694e

-

- 31 10月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: Compaction iterator has many assert statements that are active only during test runs. Some rare bugs would show up only at runtime could violate the assert condition but go unnoticed since assert statements are not compiled in release mode. Turning the assert statements to runtime check sone pors and cons: Pros: - A bug that would result into incorrect data would be detected early before the incorrect data is written to the disk. Cons: - Runtime overhead: which should be negligible since compaction cpu is the minority in the overall cpu usage - The assert statements might already being violated at runtime, and turning them to runtime failure might result into reliability issues. The patch takes a conservative step in this direction by logging the assert violations at runtime. If we see any violation reported in logs, we investigate. Otherwise, we can go ahead turning them to runtime error. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5935 Differential Revision: D18229697 Pulled By: maysamyabandeh fbshipit-source-id: f1890eca80ccd7cca29737f1825badb9aa8038a8

-

- 20 9月, 2019 1 次提交

-

-

由 sdong 提交于

Summary: Some recent commits might not have passed through the formatter. I formatted recent 45 commits. The script hangs for more commits so I stopped there. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5827 Test Plan: Run all existing tests. Differential Revision: D17483727 fbshipit-source-id: af23113ee63015d8a43d89a3bc2c1056189afe8f

-

- 19 9月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: The snap_refresh_nanos option didn't bring much benefit. Remove the feature to simplify the code. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5826 Differential Revision: D17467147 Pulled By: maysamyabandeh fbshipit-source-id: 4f950b046990d0d1292d7fc04c2ccafaf751c7f0

-

- 17 9月, 2019 1 次提交

-

-

由 andrew 提交于

Summary: Manual compaction may bring in very high load because sometime the amount of data involved in a compaction could be large, which may affect online service. So it would be good if the running compaction making the server busy can be stopped immediately. In this implementation, stopping manual compaction condition is only checked in slow process. We let deletion compaction and trivial move go through. Pull Request resolved: https://github.com/facebook/rocksdb/pull/3971 Test Plan: add tests at more spots. Differential Revision: D17369043 fbshipit-source-id: 575a624fb992ce0bb07d9443eb209e547740043c

-

- 01 6月, 2019 1 次提交

-

-

由 Vijay Nadimpalli 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/5390 Differential Revision: D15579388 Pulled By: vjnadimpalli fbshipit-source-id: 5bfc95e31554b8ff05b97b76d6534113f527f366

-

- 31 5月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: There are too many types of files under util/. Some test related files don't belong to there or just are just loosely related. Mo ve them to a new directory test_util/, so that util/ is cleaner. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5377 Differential Revision: D15551366 Pulled By: siying fbshipit-source-id: 0f5c8653832354ef8caa31749c0143815d719e2c

-

- 16 5月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: The recent improvement in https://github.com/facebook/rocksdb/pull/3661 could cause a deadlock: When writing recoverable state, we also commit its sequence number to commit table, which could result into evicting existing commit entry, which could result into advancing max_evicted_seq_, which would need to get snapshots from database, which requires obtaining db mutex. The patch releases db_mutex before calling the callback in WriteRecoverableState to avoid the potential deadlock. It also improves the stress tests to let the issue be manifested in the tests. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5306 Differential Revision: D15341458 Pulled By: maysamyabandeh fbshipit-source-id: 05dcbed7e21b789fd1e5fd5ee8eea08077162323

-

- 04 5月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: Part of compaction cpu goes to processing snapshot list, the larger the list the bigger the overhead. Although the lifetime of most of the snapshots is much shorter than the lifetime of compactions, the compaction conservatively operates on the list of snapshots that it initially obtained. This patch allows the snapshot list to be updated via a callback if the compaction is taking long. This should let the compaction to continue more efficiently with much smaller snapshot list. For simplicity, to avoid the feature is disabled in two cases: i) When more than one sub-compaction are sharing the same snapshot list, ii) when Range Delete is used in which the range delete aggregator has its own copy of snapshot list. This fixes the reverted https://github.com/facebook/rocksdb/pull/5099 issue with range deletes. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5278 Differential Revision: D15203291 Pulled By: maysamyabandeh fbshipit-source-id: fa645611e606aa222c7ce53176dc5bb6f259c258

-

- 02 5月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: Our daily stress tests are failing after this feature. Reverting temporarily until we figure the reason for test failures. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5269 Differential Revision: D15151285 Pulled By: maysamyabandeh fbshipit-source-id: e4002b99690a97df30d4b4b58bf0f61e9591bc6e

-

- 27 4月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: The newly added test CompactionJobTest.SnapshotRefresh sets the snapshot refresh period to 0 to stress the feature. This results into large number of refresh events, which in turn results into an UBSAN failure when a bitwise shift operand goes beyond the uint64_t size. The patch fixes that by simplifying the shift logic to be done only by 2 bits after each refresh. Furthermore it verifies that the shift operation does not result in decreasing the refresh period. Testing: COMPILE_WITH_UBSAN=1 make -j32 compaction_job_test ./compaction_job_test --gtest_filter=CompactionJobTest.SnapshotRefresh Pull Request resolved: https://github.com/facebook/rocksdb/pull/5257 Differential Revision: D15106463 Pulled By: maysamyabandeh fbshipit-source-id: f2718898ea7ba4fa9f7e87b70cf98fe647c0de80

-

- 26 4月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: Part of compaction cpu goes to processing snapshot list, the larger the list the bigger the overhead. Although the lifetime of most of the snapshots is much shorter than the lifetime of compactions, the compaction conservatively operates on the list of snapshots that it initially obtained. This patch allows the snapshot list to be updated via a callback if the compaction is taking long. This should let the compaction to continue more efficiently with much smaller snapshot list. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5099 Differential Revision: D15086710 Pulled By: maysamyabandeh fbshipit-source-id: 7649f56c3b6b2fb334962048150142a3bf9c1a12

-

- 12 2月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: If IsInSnapshot(seq2, snapshot) determines that the snapshot is released, the future queries IsInSnapshot(seq1, snapshot) could still return a definitive answer of true if for example seq1 is too old that is determined visible in all snapshots. This violates a recently added assert statement to compaction iterator. The patch relaxes the assert. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4969 Differential Revision: D14030998 Pulled By: maysamyabandeh fbshipit-source-id: 6db53db0e37d0a20e8997ef2c1004b8627614ab9

-

- 08 2月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: We found that the behavior of CompactionFilter::IgnoreSnapshots() = false isn't what we have expected. We thought that snapshot will always be preserved. However, we just realized that, if no snapshot is created while compaction starts, and a snapshot is created after that, the data seen from the snapshot can successfully be dropped by the compaction. This creates a strange behavior to the feature, which is hard to explain. Like what is documented in code comment, this feature is not very useful with snapshot anyway. The decision is to deprecate the feature. We keep the function to avoid to break users code. However, we will fail compactions if false is returned. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4954 Differential Revision: D13981900 Pulled By: siying fbshipit-source-id: 2db8c2c3865acd86a28dca625945d1481b1d1e36

-

- 02 2月, 2019 1 次提交

-

-

由 Ming Zhao 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/4927 Differential Revision: D13889458 Pulled By: mzhaom fbshipit-source-id: d6b66db85901a9eb90748fba6a9dc4e7457b9c5e

-

- 19 1月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: Fix how CompactionIterator::findEarliestVisibleSnapshots handles released snapshot. It fixing the two scenarios: Scenario 1: key1 has two values v1 and v2. There're two snapshots s1 and s2 taken after v1 and v2 are committed. Right after compaction output v2, s1 is released. Now findEarliestVisibleSnapshot may see s1 being released, and return the next snapshot, which is s2. That's larger than v2's earliest visible snapshot, which was s1. The fix: the only place we check against last snapshot and current key snapshot is when we decide whether to compact out a value if it is hidden by a later value. In the check if we see current snapshot is even larger than last snapshot, we know last snapshot is released, and we are safe to compact out current key. Scenario 2: key1 has two values v1 and v2. there are two snapshots s1 and s2 taken after v1 and v2 are committed. During compaction before we process the key, s1 is released. When compaction process v2, snapshot checker may return kSnapshotReleased, and the earliest visible snapshot for v2 become s2. When compaction process v1, snapshot checker may return kIsInSnapshot (for WritePrepared transaction, it could be because v1 is still in commit cache). The result will become inconsistent here. The fix: remember the set of released snapshots ever reported by snapshot checker, and ignore them when finding result for findEarliestVisibleSnapshot. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4890 Differential Revision: D13705538 Pulled By: maysamyabandeh fbshipit-source-id: e577f0d9ee1ff5a6035f26859e56902ecc85a5a4

-

- 17 1月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: Compaction iterator keep a copy of list of live snapshots at the beginning of compaction, and then query snapshot checker to verify if values of a sequence number is visible to these snapshots. However when the snapshot is released in the middle of compaction, the snapshot checker implementation (i.e. WritePreparedSnapshotChecker) may remove info with the snapshot and may report incorrect result, which lead to values being compacted out when it shouldn't. This patch conservatively keep the values if snapshot checker determines that the snapshots is released. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4858 Differential Revision: D13617146 Pulled By: maysamyabandeh fbshipit-source-id: cf18a94f6f61a94bcff73c280f117b224af5fbc3

-

- 16 1月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: With WritePrepared transaction, flush/compaction can contain uncommitted keys, and those keys can get committed during compaction. If a snapshot is taken before the key is committed, it should not see the key. On the other hand, compaction grab the list of snapshots at its beginning, and only consider those snapshots to dedup keys. Consider the case: ``` seq = 1: put "foo" = "bar" seq = 2: transaction T: delete "foo", prepare seq = 3: compaction start seq = 4: take snapshot S seq = 5: transaction T: commit. ... seq = N: compaction iterator reached key "foo". ``` When compaction start, the list of snapshot is empty. Compaction doesn't take snapshot S into account. When it reached "foo", transaction T is committed. Compaction may think the value "foo=bar" is not visible by any snapshot (which is wrong), and compact the value out. The fix is to explicitly take a snapshot before compaction grabbing the list of snapshots. Compaction will then has to keep keys visible to this snapshot. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4883 Differential Revision: D13668775 Pulled By: maysamyabandeh fbshipit-source-id: 1cab9615f94b7d3e8522cc3d44c3a14c7d4720e4

-

- 10 1月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: The vector returned by SnapshotList::GetAll could have duplicate entries if two separate snapshots have the same sequence number. However, when this vector is used in compaction the duplicate entires are of no use and could be safely ignored. Moreover not having duplicate entires simplifies reasoning in the compaction_iterator.cc code. For example when searching for the previous_snap we currently use the snapshot before the current one but the way the code uses that it expects it to be also less than the current snapshot, which would be simpler to read if there is no duplicate entry in the snapshot list. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4860 Differential Revision: D13615502 Pulled By: maysamyabandeh fbshipit-source-id: d45bf01213ead5f39db811f951802da6fcc3332b

-

- 05 1月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: In compaction iterator, if the next value of single delete is a blob value, it should not treated as mismatch. This is only a minor fix and doesn't affect correctness. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4848 Differential Revision: D13585812 Pulled By: yiwu-arbug fbshipit-source-id: 0ff6223fa03a644ac9fd8a2d77f9d6711d0a62b0

-

- 18 12月, 2018 2 次提交

-

-

由 Abhishek Madan 提交于

Summary: Now that v2 is fully functional, the v1 aggregator is removed. The v2 aggregator has been renamed. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4778 Differential Revision: D13495930 Pulled By: abhimadan fbshipit-source-id: 9d69500a60a283e79b6c4fa938fc68a8aa4d40d6

-

由 Abhishek Madan 提交于

Summary: RangeDelAggregatorV2 now supports ShouldDelete calls on snapshot stripes and creation of range tombstone compaction iterators. RangeDelAggregator is no longer used on any non-test code path, and will be removed in a future commit. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4758 Differential Revision: D13439254 Pulled By: abhimadan fbshipit-source-id: fe105bcf8e3d4a2df37a622d5510843cd71b0401

-

- 16 10月, 2018 1 次提交

-

-

由 Andrew Kryczka 提交于

Summary: `CompactionIterator::snapshots_` is ordered by ascending seqnum, just like `DBImpl`'s linked list of snapshots from which it was copied. This PR exploits this ordering to make `findEarliestVisibleSnapshot` do binary search rather than linear scan. This can make flush/compaction significantly faster when many snapshots exist since that function is called on every single key. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4495 Differential Revision: D10386470 Pulled By: ajkr fbshipit-source-id: 29734991631227b6b7b677e156ac567690118a8b

-

- 25 8月, 2018 1 次提交

-

-

由 Shrikanth Shankar 提交于

Summary: This PR fixes issue 3842. We drop deletion markers iff 1. We are the bottom most level AND 2. All other occurrences of the key are in the same snapshot range as the delete I've also enhanced db_stress_test to add an option that does a full compare of the keys. This is done by a single thread (thread # 0). For tests I've run (so far) make check -j64 db_stress db_stress --acquire_snapshot_one_in=1000 --ops_per_thread=100000 /* to verify that new code doesnt break existing tests */ ./db_stress --compare_full_db_state_snapshot=true --acquire_snapshot_one_in=1000 --ops_per_thread=100000 /* to verify new test code */ Pull Request resolved: https://github.com/facebook/rocksdb/pull/4289 Differential Revision: D9491165 Pulled By: shrikanthshankar fbshipit-source-id: ce144834f31736c189aaca81bed356ba990331e2

-

- 24 8月, 2018 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: We want to sample the file I/O issued by RocksDB and report the function calls. This requires us to include the file paths otherwise it's hard to tell what has been going on. Pull Request resolved: https://github.com/facebook/rocksdb/pull/4039 Differential Revision: D8670178 Pulled By: riversand963 fbshipit-source-id: 97ee806d1c583a2983e28e213ee764dc6ac28f7a

-

- 13 7月, 2018 1 次提交

-

-

由 Nikhil Benesch 提交于

Summary: This fixes the same performance issue that #3992 fixes but with much more invasive cleanup. I'm more excited about this PR because it paves the way for fixing another problem we uncovered at Cockroach where range deletion tombstones can cause massive compactions. For example, suppose L4 contains deletions from [a, c) and [x, z) and no other keys, and L5 is entirely empty. L6, however, is full of data. When compacting L4 -> L5, we'll end up with one file that spans, massively, from [a, z). When we go to compact L5 -> L6, we'll have to rewrite all of L6! If, instead of range deletions in L4, we had keys a, b, x, y, and z, RocksDB would have been smart enough to create two files in L5: one for a and b and another for x, y, and z. With the changes in this PR, it will be possible to adjust the compaction logic to split tombstones/start new output files when they would span too many files in the grandparent level. ajkr please take a look when you have a minute! Pull Request resolved: https://github.com/facebook/rocksdb/pull/4014 Differential Revision: D8773253 Pulled By: ajkr fbshipit-source-id: ec62fa85f648fdebe1380b83ed997f9baec35677

-

- 22 6月, 2018 1 次提交

-

-

由 Zhongyi Xie 提交于

Summary: For example calling CompactionFilter is always timed and gives the user no way to disable. This PR will disable the timer if `Statistics::stats_level_` (which is part of DBOptions) is `kExceptDetailedTimers` Closes https://github.com/facebook/rocksdb/pull/4029 Differential Revision: D8583670 Pulled By: miasantreble fbshipit-source-id: 913be9fe433ae0c06e88193b59d41920a532307f

-

- 07 3月, 2018 1 次提交

-

-

由 Yi Wu 提交于

Summary: Improving blob db FIFO eviction with the following changes, * Change blob_dir_size to max_db_size. Take into account SST file size when computing DB size. * FIFO now only take into account live sst files and live blob files. It is normal for disk usage to go over max_db_size because there are obsolete sst files and blob files pending deletion. * FIFO eviction now also evict TTL blob files that's still open. It doesn't evict non-TTL blob files. * If FIFO is triggered, it will pass an expiration and the current sequence number to compaction filter. Compaction filter will then filter inlined keys to evict those with an earlier expiration and smaller sequence number. So call LSM FIFO. * Compaction filter also filter those blob indexes where corresponding blob file is gone. * Add an event listener to listen compaction/flush event and update sst file size. * Implement DB::Close() to make sure base db, as well as event listener and compaction filter, destruct before blob db. * More blob db statistics around FIFO. * Fix some locking issue when accessing a blob file. Closes https://github.com/facebook/rocksdb/pull/3556 Differential Revision: D7139328 Pulled By: yiwu-arbug fbshipit-source-id: ea5edb07b33dfceacb2682f4789bea61de28bbfa

-

- 06 3月, 2018 1 次提交

-

-

由 Andrew Kryczka 提交于

Summary: Submitting on behalf of another employee. Closes https://github.com/facebook/rocksdb/pull/3557 Differential Revision: D7146025 Pulled By: ajkr fbshipit-source-id: 495ca5db5beec3789e671e26f78170957704e77e

-