Merge pull request #47 from jrzaurin/saint

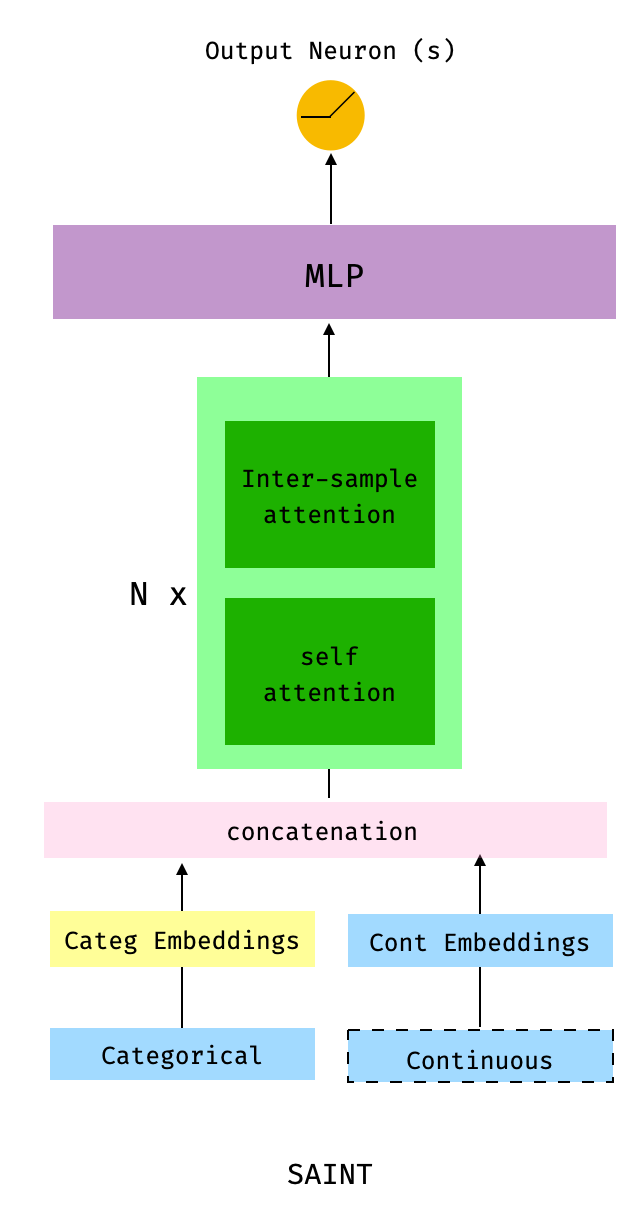

Saint

Showing

63.9 KB

docs/figures/saint_arch.png

0 → 100644

70.6 KB

| W: | H:

| W: | H:

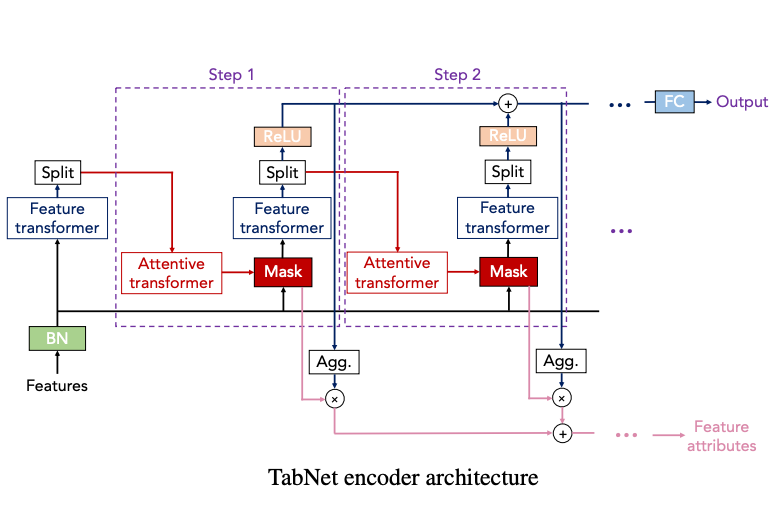

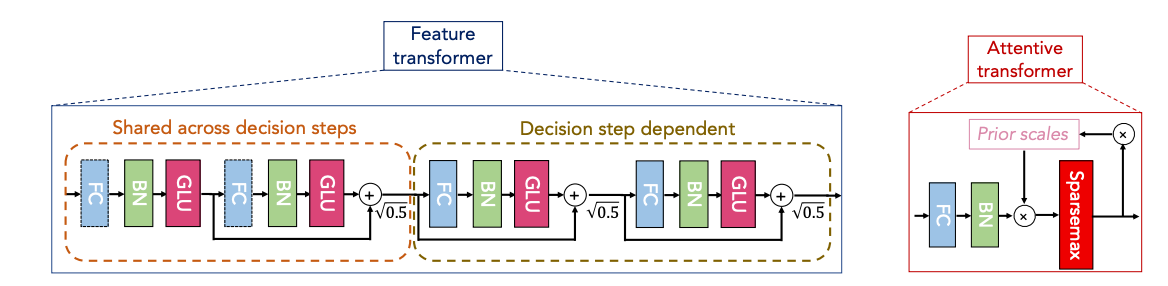

docs/figures/tabnet_arch_1.png

0 → 100644

64.1 KB

docs/figures/tabnet_arch_2.png

0 → 100644

72.7 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。