zero to one

Showing

无法预览此类型文件

file_pro/.data_read.h.swp

已删除

100644 → 0

文件已删除

无法预览此类型文件

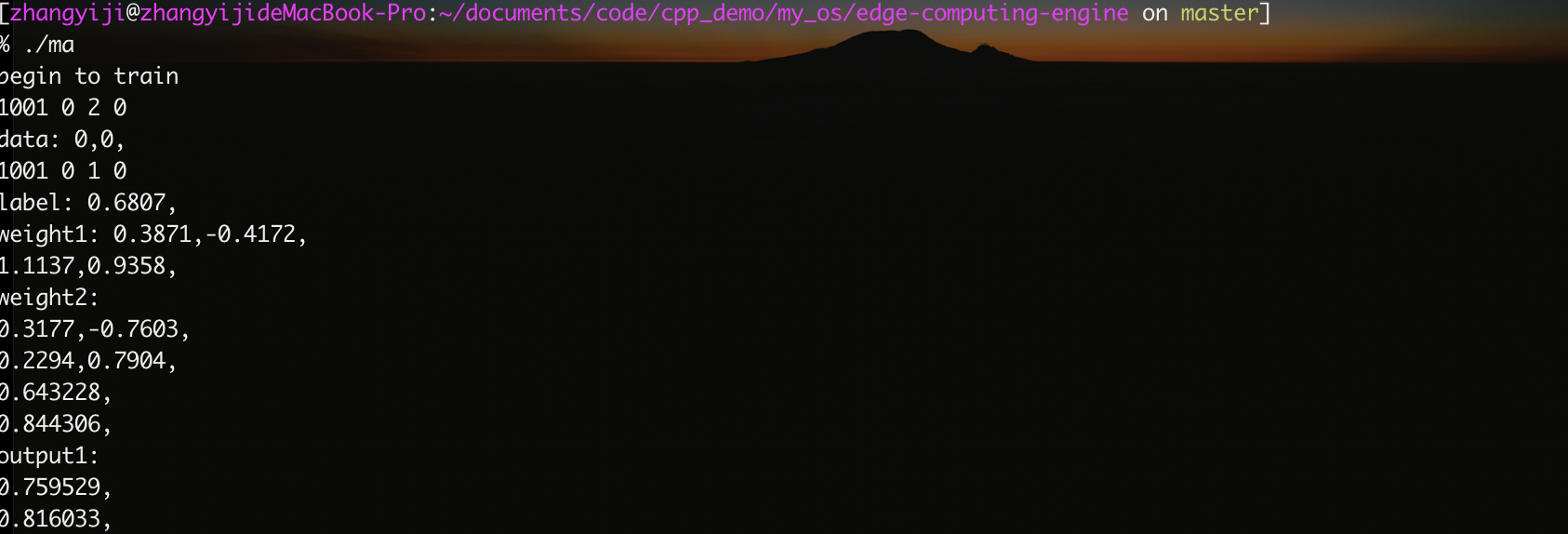

nerual_test.cpp

0 → 100644

| W: | H:

| W: | H:

picture/nerual_test1.png

0 → 100644

959.6 KB