Merge branch 'develop' into master

Showing

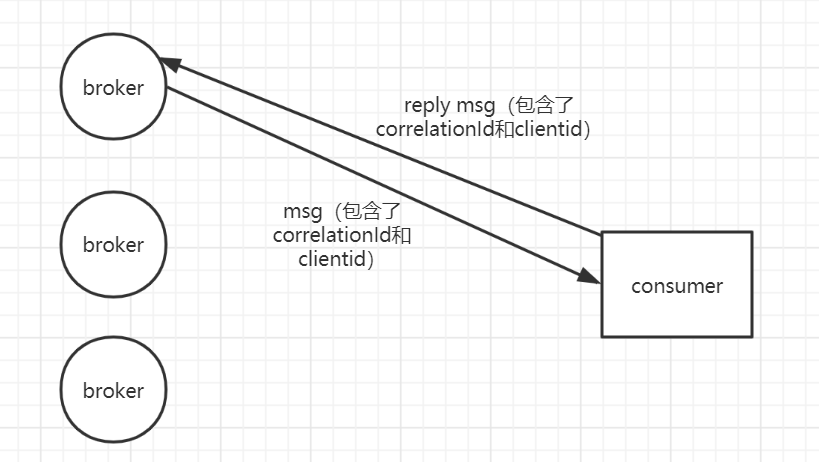

docs/cn/image/consumer_reply.png

0 → 100644

50.8 KB

85.5 KB

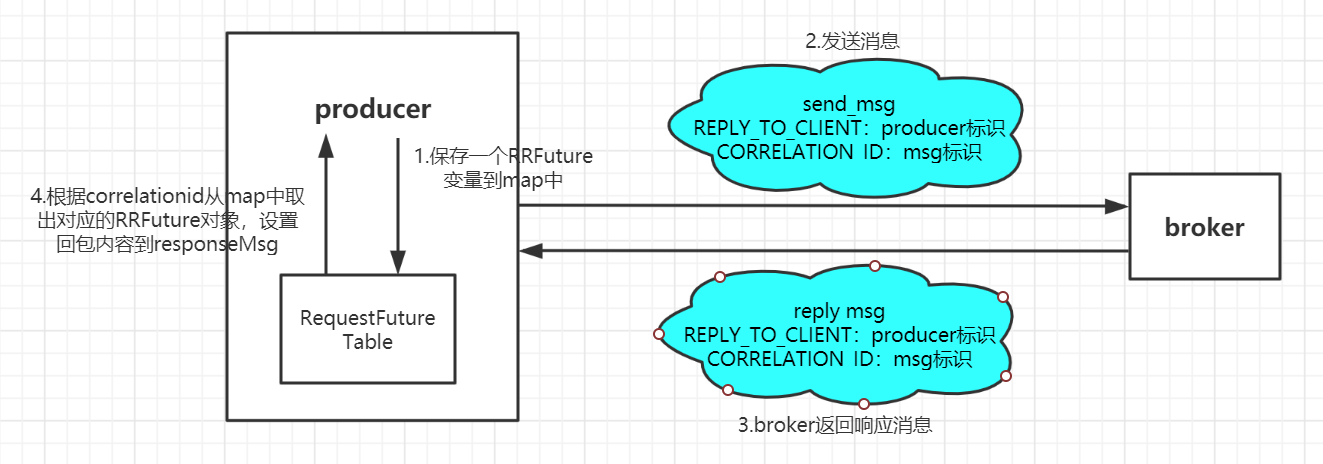

docs/cn/rpc_request.md

0 → 100644

docs/en/README.md

0 → 100644

docs/en/RocketMQ_Example.md

0 → 100644

docs/en/dledger/deploy_guide.md

0 → 100644

docs/en/dledger/quick_start.md

0 → 100644

docs/en/msg_trace/user_guide.md

0 → 100644

docs/en/operation.md

0 → 100644

此差异已折叠。