Commits (5)

Showing

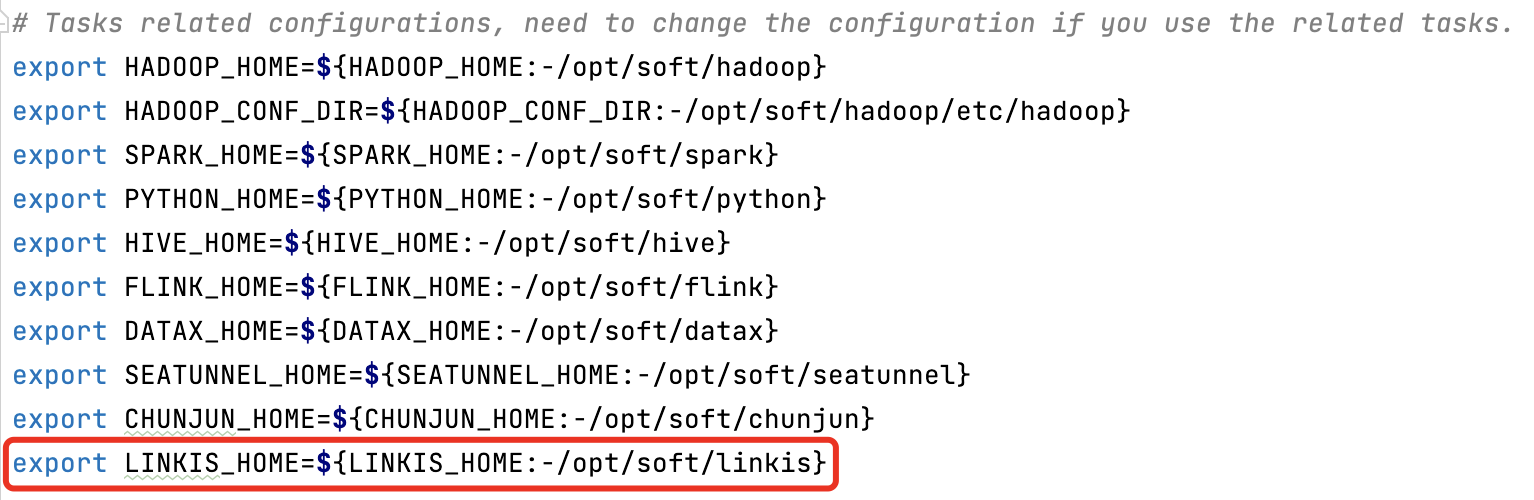

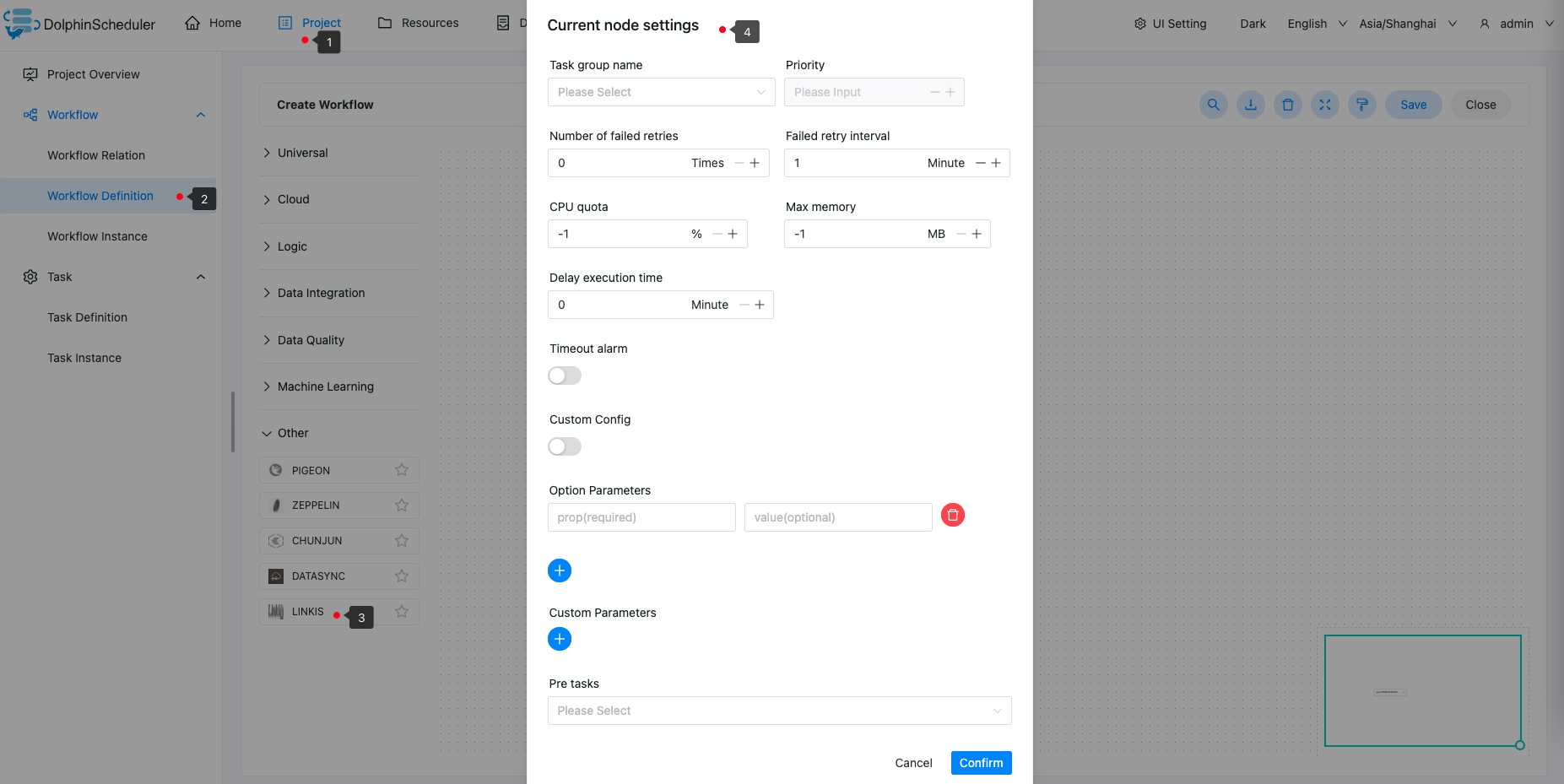

docs/docs/en/guide/task/linkis.md

0 → 100644

docs/docs/zh/guide/task/linkis.md

0 → 100644

127.0 KB

160.1 KB

docs/img/tasks/icons/linkis.png

0 → 100644

3.5 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

940 字节

3.5 KB