[feature][task] Add Kubeflow task plugin for MLOps scenario (#12843)

Showing

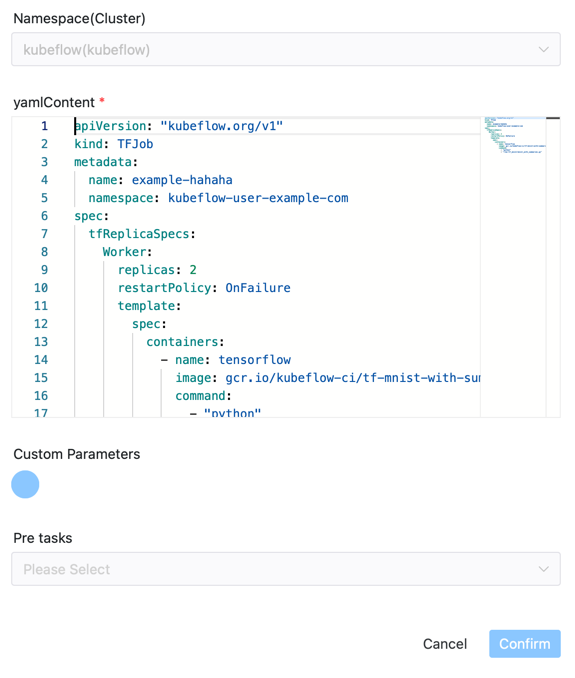

docs/img/tasks/demo/kubeflow.png

0 → 100644

70.4 KB

docs/img/tasks/icons/kubeflow.png

0 → 100644

155.4 KB

155.4 KB

115.4 KB

70.4 KB

155.4 KB

155.4 KB

115.4 KB