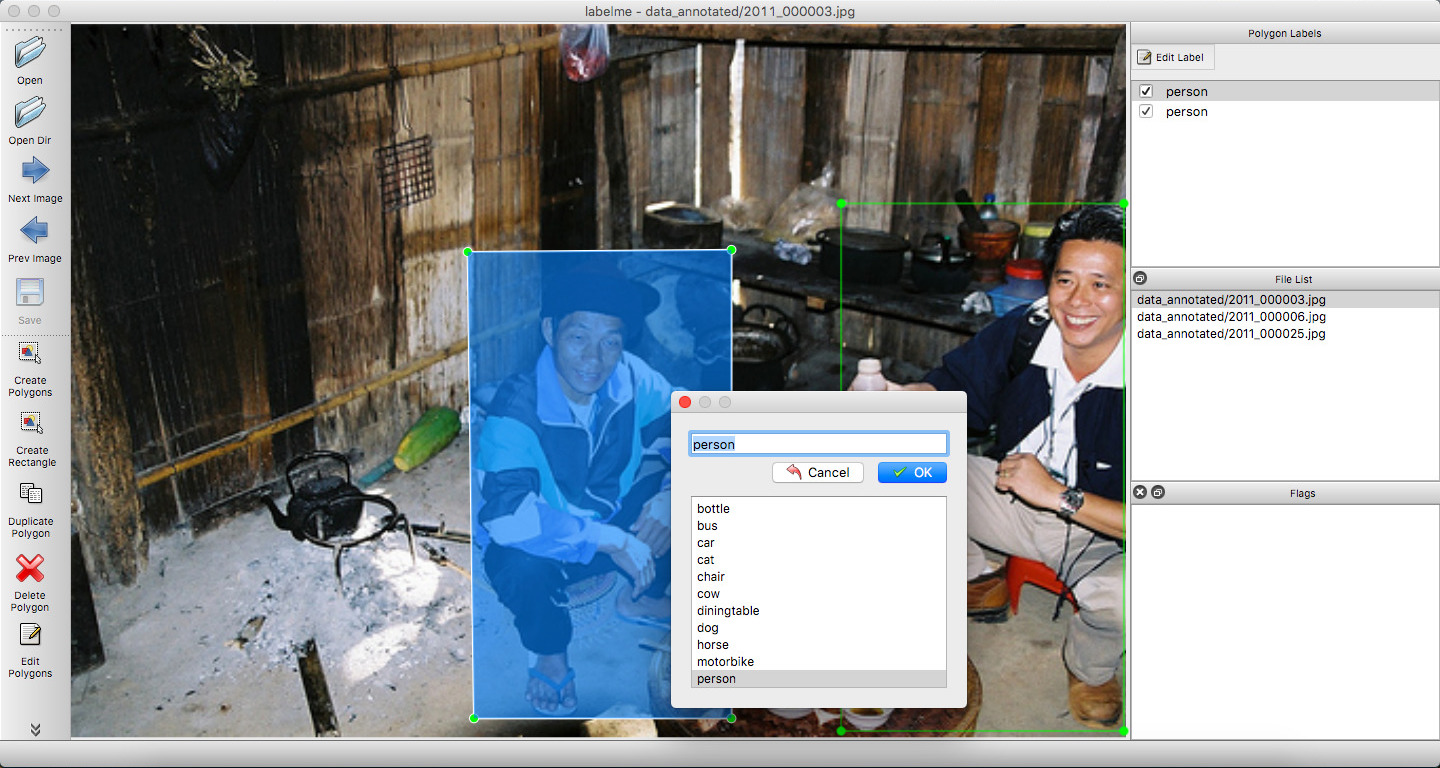

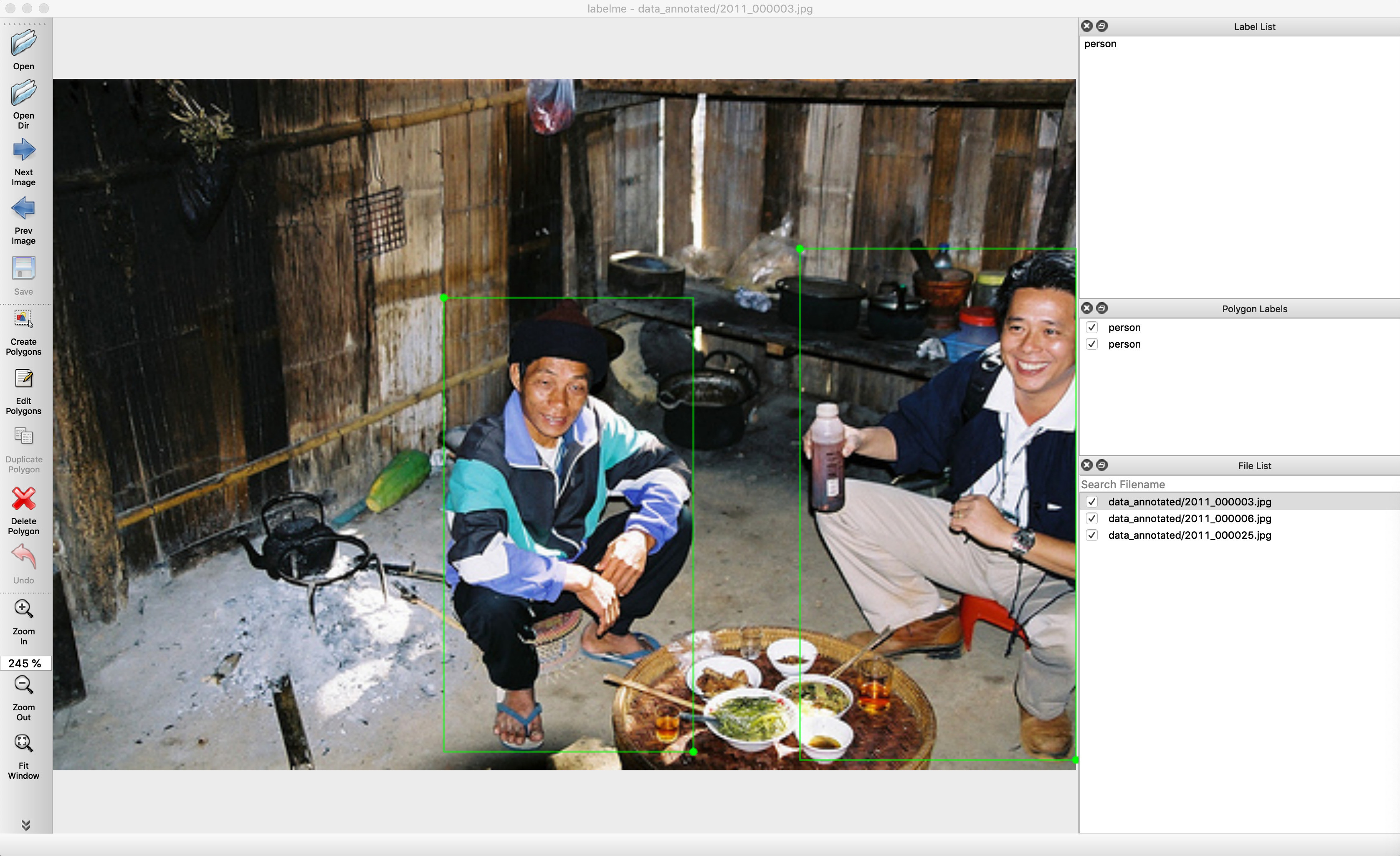

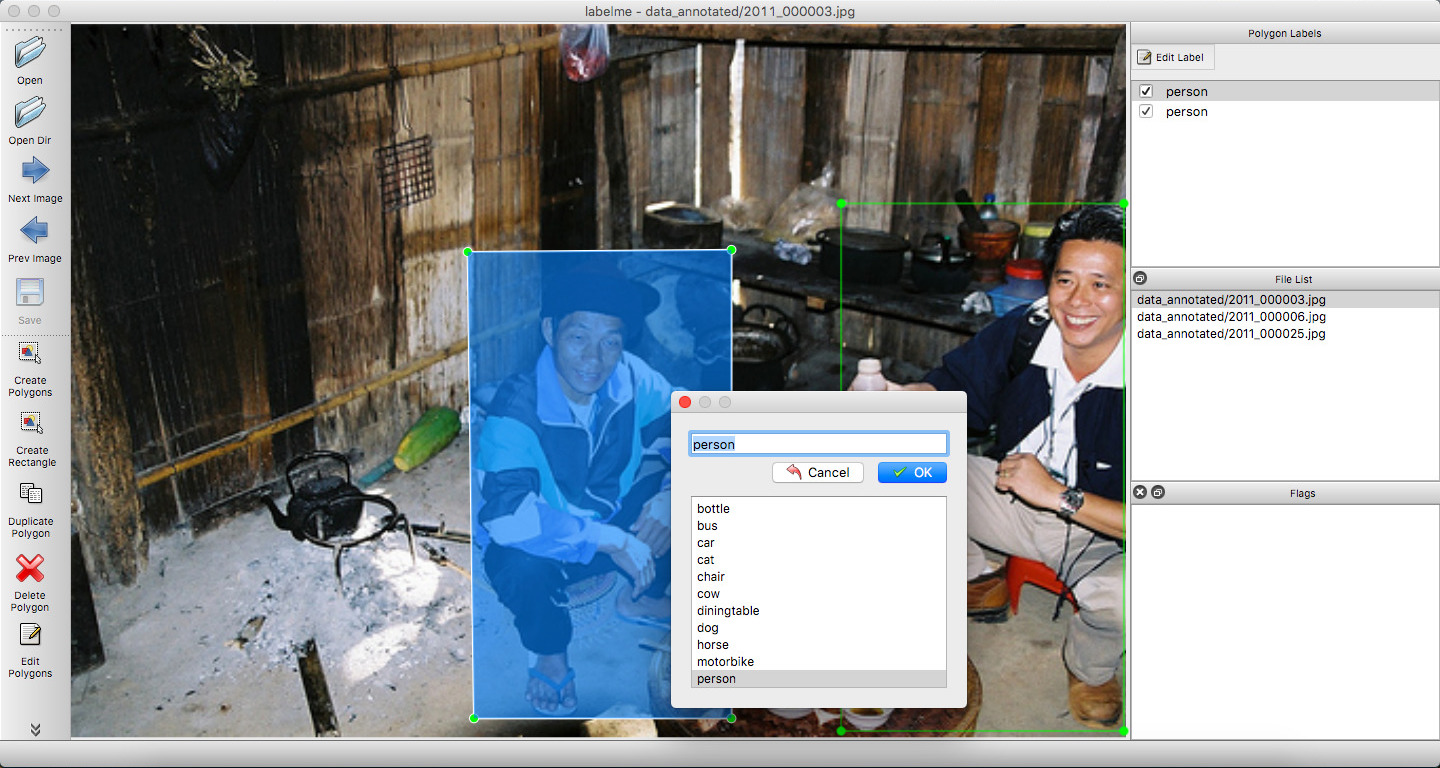

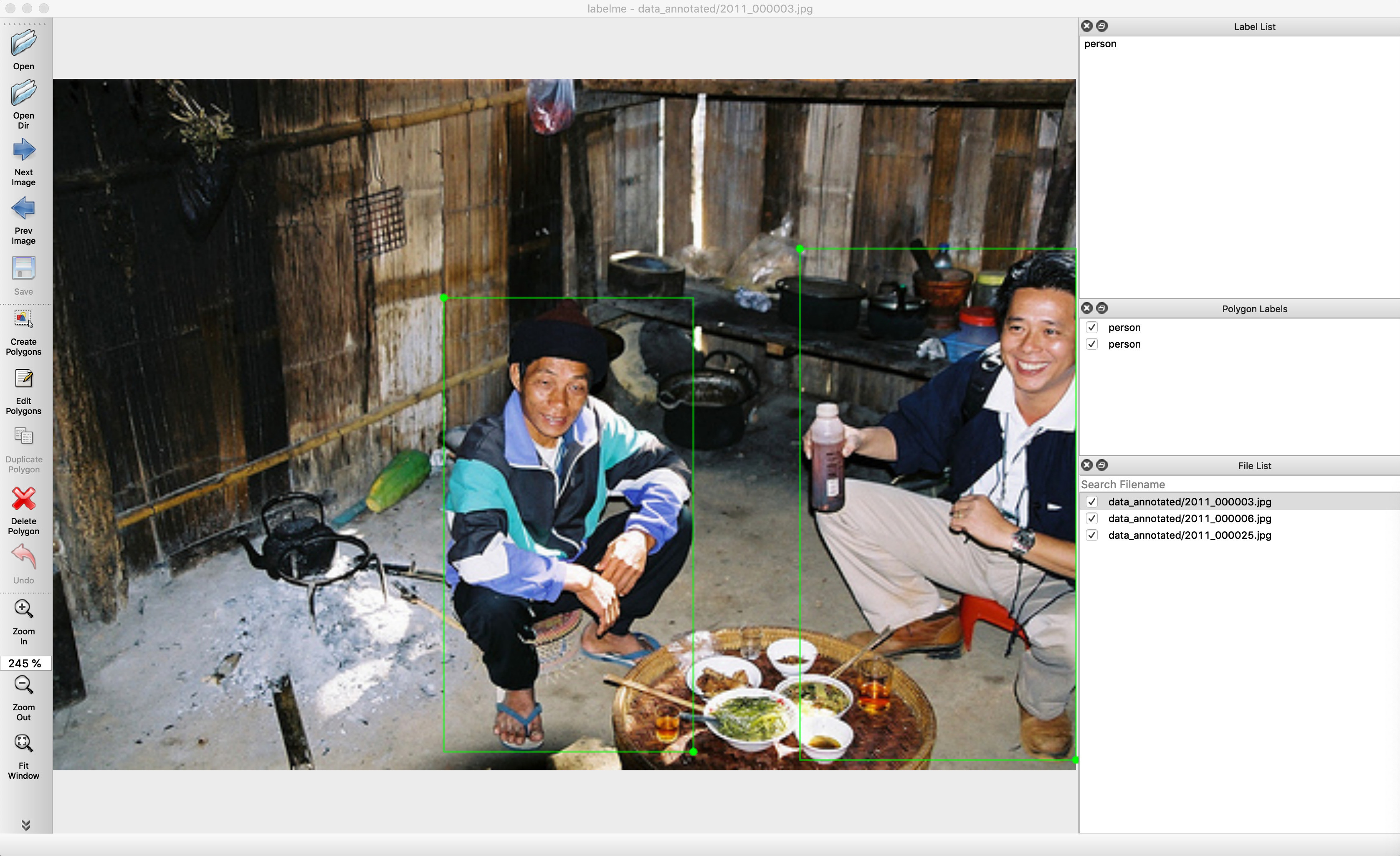

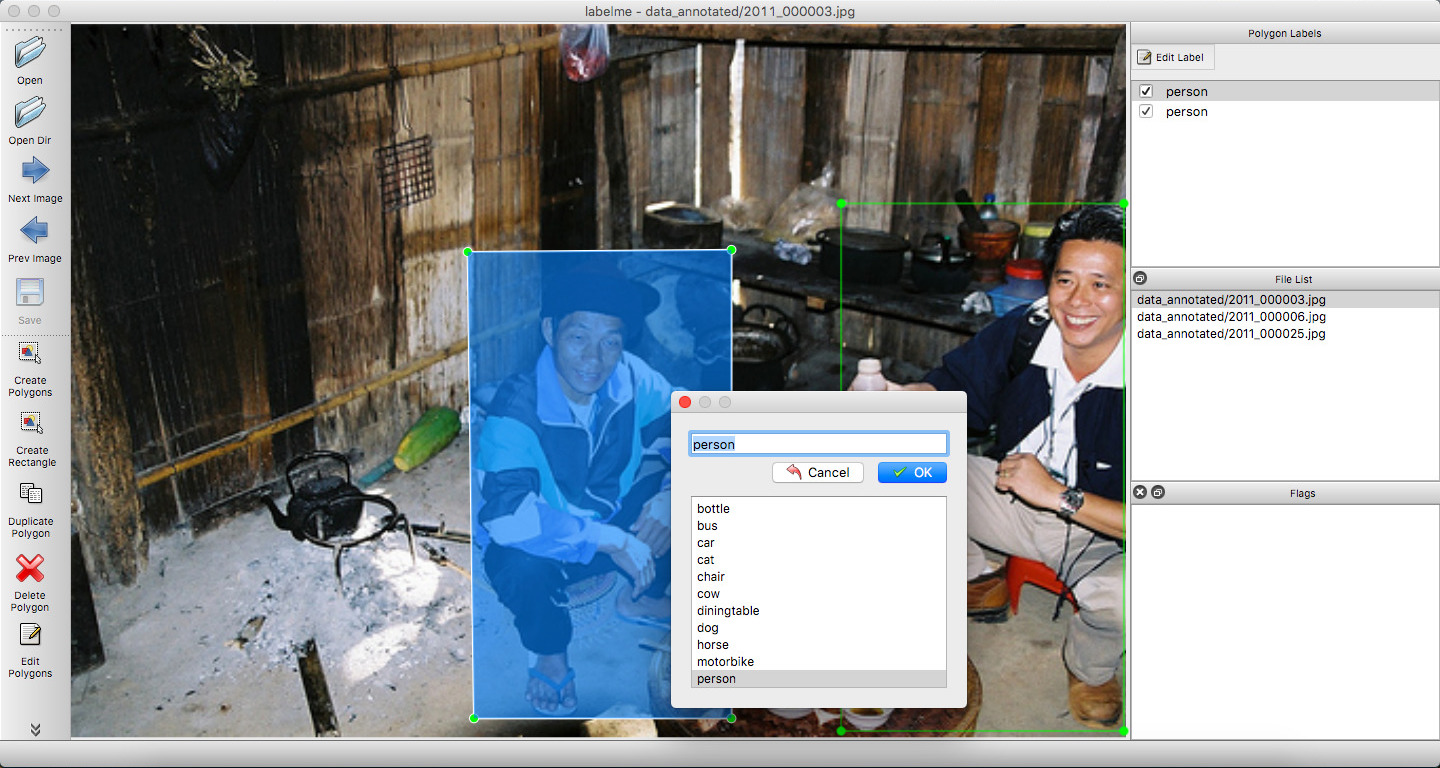

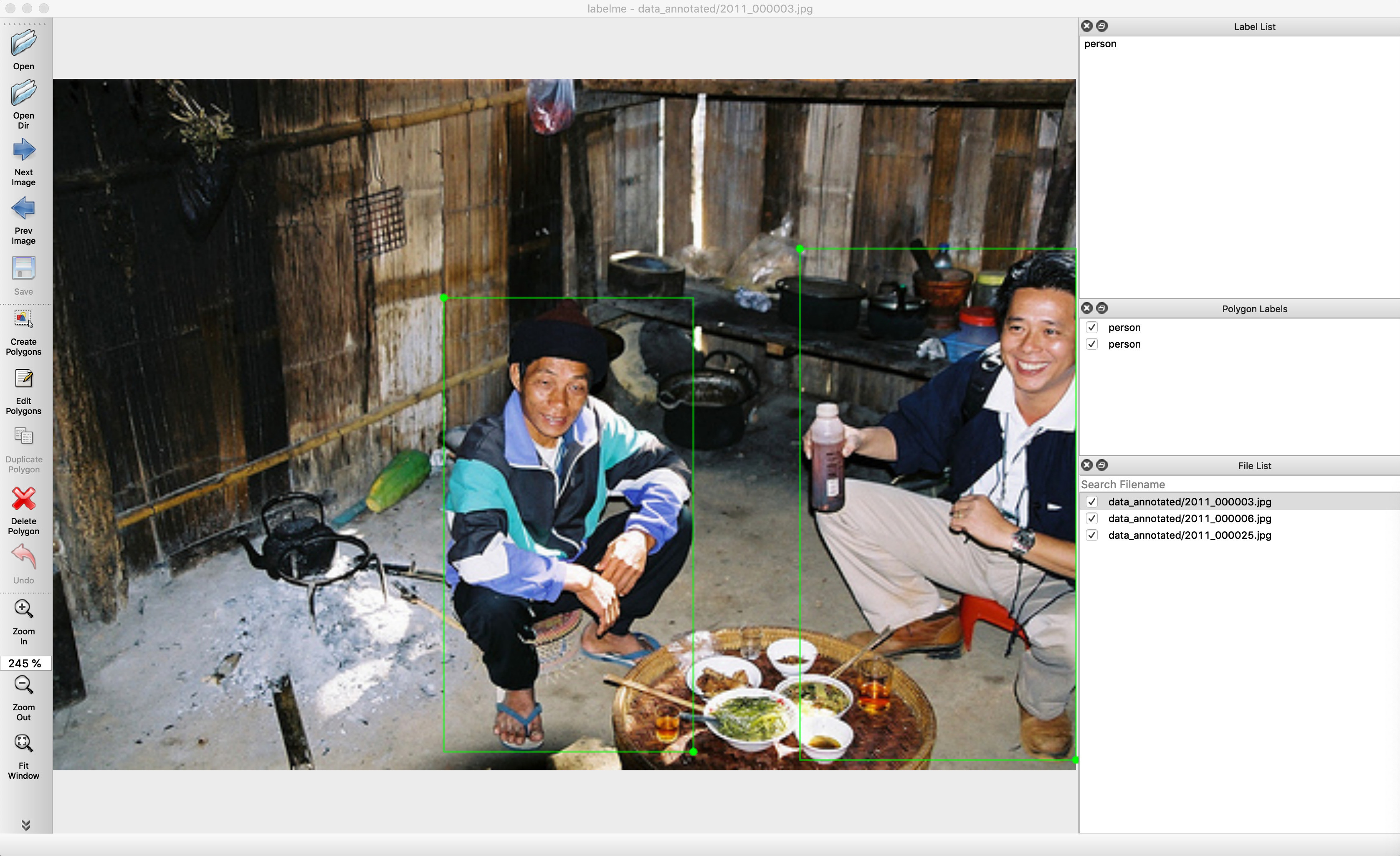

Convert bounding box annotated files to VOC-like dataset

Showing

| W: | H:

| W: | H:

46.1 KB

30.6 KB

45.8 KB

45.4 KB

28.6 KB

43.9 KB

327.1 KB | W: | H:

1.1 MB | W: | H:

46.1 KB

30.6 KB

45.8 KB

45.4 KB

28.6 KB

43.9 KB