Merge branch 'develop' into feature/change_op_creation

Showing

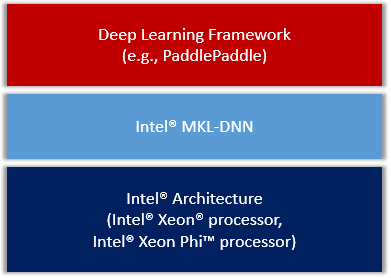

doc/design/mkldnn/README.MD

0 → 100644

9.7 KB

paddle/scripts/submit_local.sh.in

100644 → 100755

文件模式从 100644 更改为 100755

paddle/setup.py.in

已删除

100644 → 0