commit chapter5

Showing

chapter5/code.py

0 → 100755

chapter5/data/arima_data.xls

0 → 100755

文件已添加

chapter5/data/bankloan.xls

0 → 100755

文件已添加

文件已添加

chapter5/data/menu_orders.xls

0 → 100755

文件已添加

chapter5/data/sales_data.xls

0 → 100755

文件已添加

chapter5/tmp/apriori_rules.xls

0 → 100644

文件已添加

chapter5/tmp/data_type.xls

0 → 100755

文件已添加

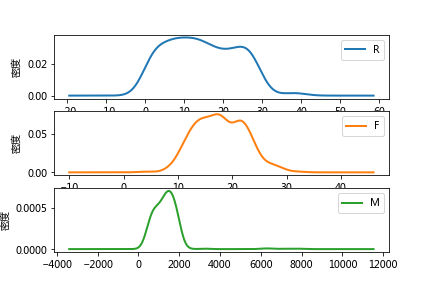

chapter5/tmp/pd_0.png

0 → 100755

19.1 KB

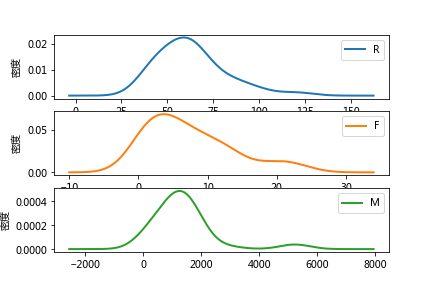

chapter5/tmp/pd_1.png

0 → 100755

21.4 KB

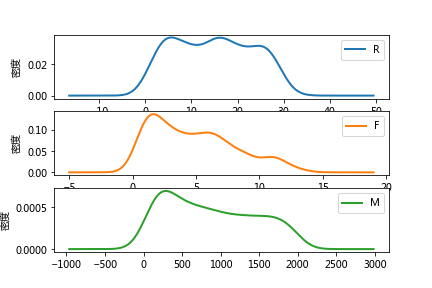

chapter5/tmp/pd_2.png

0 → 100755

20.8 KB