add some chapters

Showing

ch10/cifar10_train.py

0 → 100644

ch10/nb.py

0 → 100644

ch10/resnet.py

0 → 100644

ch10/resnet18_train.py

0 → 100644

ch11/nb.py

0 → 100644

ch11/pretrained.py

0 → 100644

ch11/sentiment_analysis_cell.py

0 → 100644

ch11/sentiment_analysis_layer.py

0 → 100644

ch12/autoencoder.py

0 → 100644

ch12/vae.py

0 → 100644

ch13/dataset.py

0 → 100644

ch13/gan.py

0 → 100644

ch13/gan_train.py

0 → 100644

ch14/REINFORCE_tf.py

0 → 100644

ch14/a3c_tf_cartpole.py

0 → 100644

ch14/dqn_tf.py

0 → 100644

ch14/ppo_tf_cartpole.py

0 → 100644

ch15/pokemon.py

0 → 100644

ch15/resnet.py

0 → 100644

ch15/train_scratch.py

0 → 100644

ch15/train_transfer.py

0 → 100644

ch9/lenna.png

0 → 100644

462.7 KB

ch9/lenna_crop.png

0 → 100644

296.0 KB

ch9/lenna_crop2.png

0 → 100644

299.0 KB

ch9/lenna_eras.png

0 → 100644

216.9 KB

ch9/lenna_eras2.png

0 → 100644

244.4 KB

ch9/lenna_flip.png

0 → 100644

254.9 KB

ch9/lenna_flip2.png

0 → 100644

255.2 KB

ch9/lenna_guassian.png

0 → 100644

452.3 KB

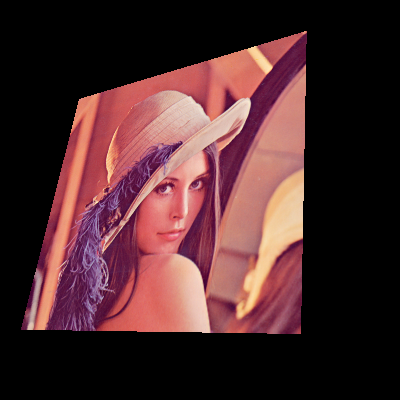

ch9/lenna_perspective.png

0 → 100644

123.4 KB

ch9/lenna_resize.png

0 → 100644

254.8 KB

ch9/lenna_rotate.png

0 → 100644

231.0 KB

ch9/lenna_rotate2.png

0 → 100644

259.0 KB

ch9/nb.py

0 → 100644