feat(tools): add assignment visualizer (#1616)

feat(tools): add assignment visualizer (#1616)

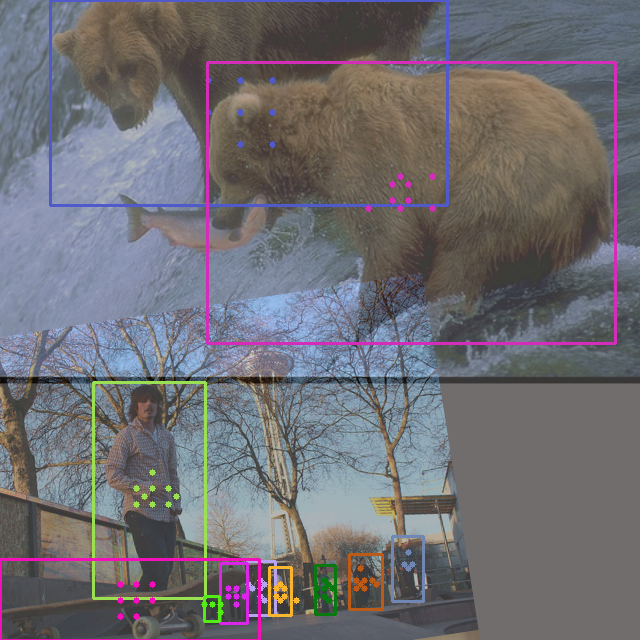

Showing

assets/assignment.png

0 → 100644

650.2 KB

docs/assignment_visualization.md

0 → 100644

tools/visualize_assign.py

0 → 100644